Game development software and tools include game engines, graphics and audio software, and Integrated Development Environments (IDEs). IDEs are software that combine all the common developer tools (code editors, debuggers, and compilers) into a single interface for ease of use. Using the correct tools during development helps make sure the game runs efficiently while giving players an immersive gameplay experience. The chosen software and tools always need to complement one another. Exporting an asset from a graphics tool to a game engine, for example, mustn’t reduce the asset’s quality.

Engines such as Unity and Unreal are known throughout the industry for ensuring clean workflows and support for large teams, with support for external plugins like Blender and Visual Studio. Small studios and solo devs are liable to work with free, open-source software like Godot, or Krita for graphics. Devs also need to consider whether the engines support their game’s visual style, as certain engines don’t support 3D development but hold their ground in 2D otherwise.

I expand on the 36 best game development software and tools below, including game engines, graphics and animation tools, audio software, IDEs and map editors. Read on to learn what software and tools are used by professionals in the game industry, with overviews of the toolsets they come with and which teams they’re best suited to.

1. Unity

Unity is a game engine founded in 2004 that helps devs build 3D worlds for games, including character simulations. Unity supports cross-platform publishing so devs are able to make one game and publish it across devices, including both VR and mobile. There’s a fully featured editor, launcher, C# integration, and tutorials for learners as well.

Unity Personal is free for use, making it ideal for indie devs but Unity Pro has extended features and costs about $2200 a year, so bigger studios and teams go for it instead. Hub Project Management lets devs use Unity Hub as their launcher so they’re able to open or start new projects and switch Unity versions for different projects. This makes it helpful when working with teams operating on an older version of Unity.

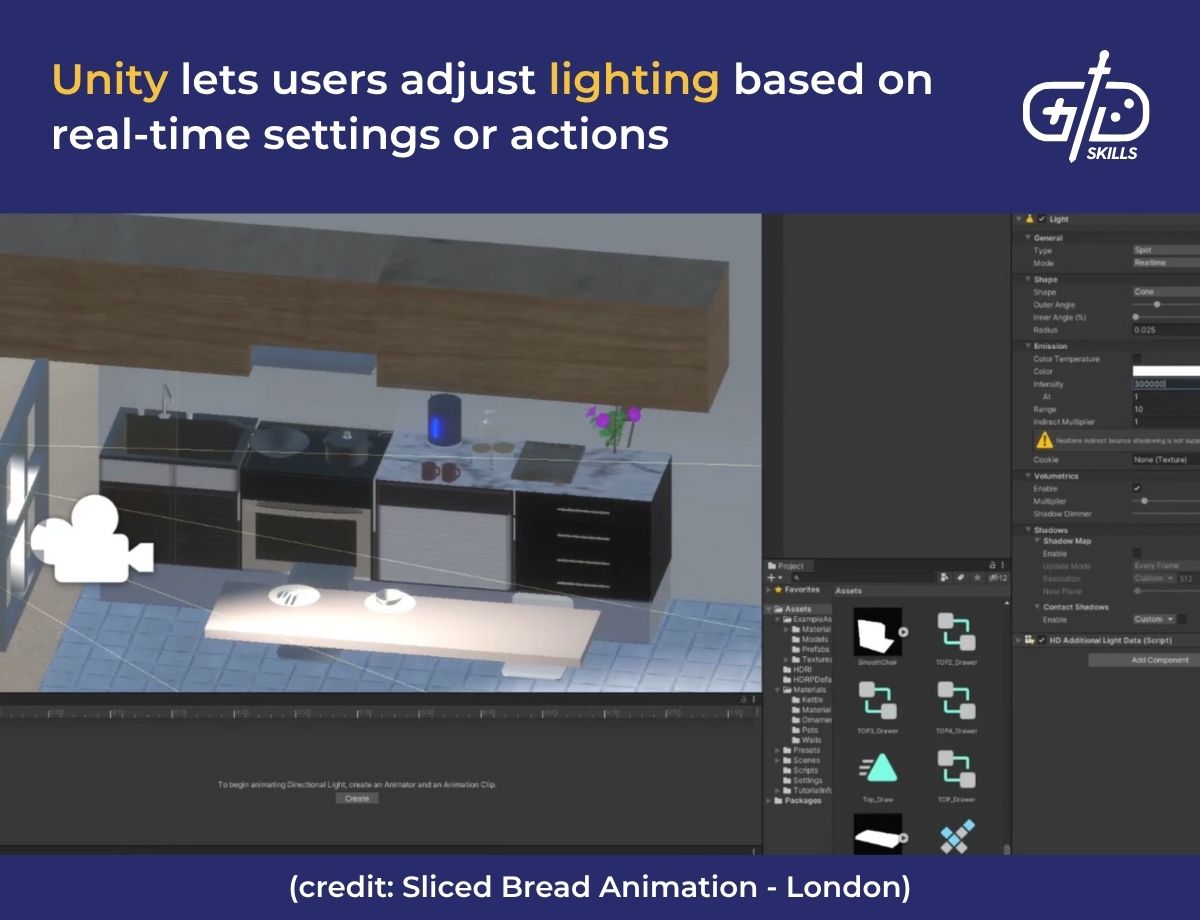

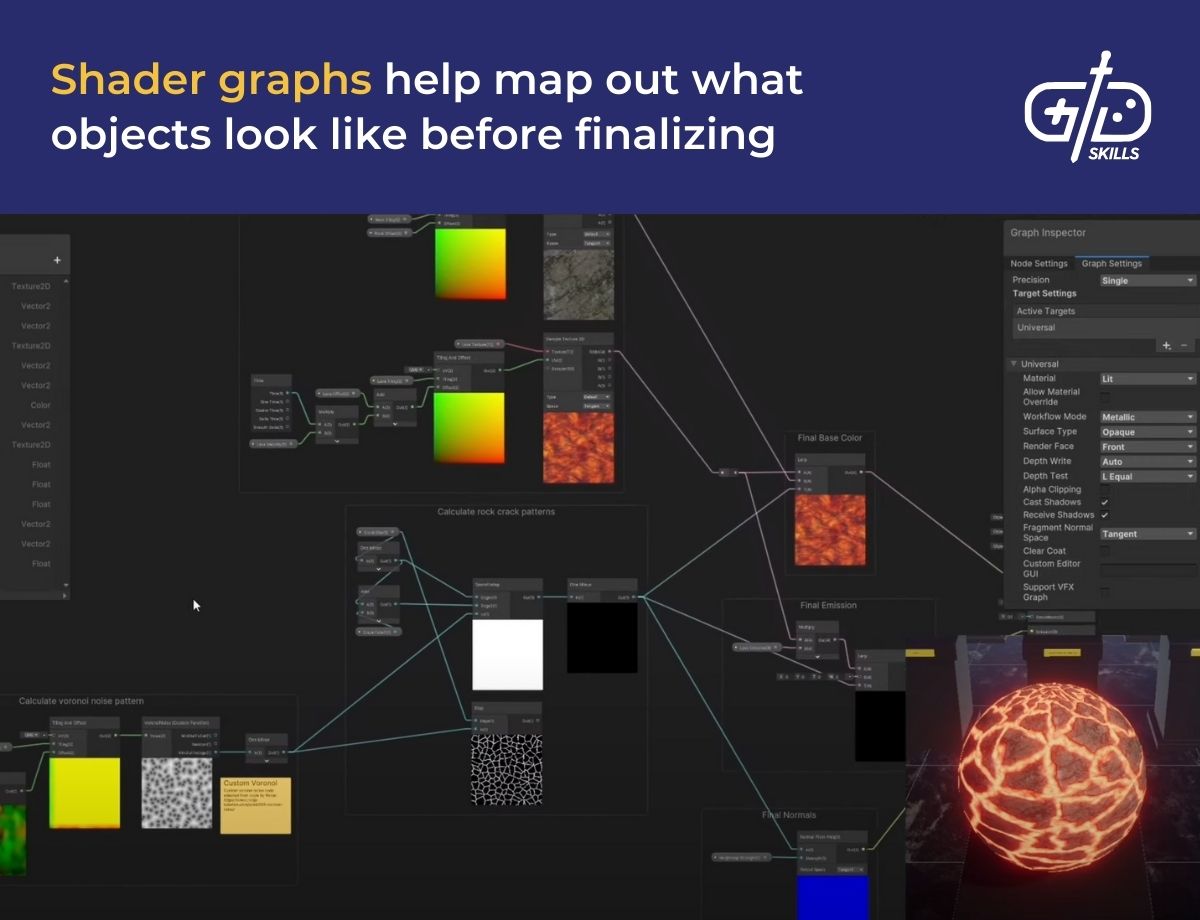

Unity’s editor interface is the main screen where devs build their game, repeatedly dragging in objects and testing the gameplay. The editor development tools show how the game runs, like the memory usage, and a timeline that controls events over time. Unity has a full range of lighting, physics and simulation tools that let devs create graphics and effects that change based on player actions, such as lighting up a dark hallway when the player uses a flashlight. The shader graphs are node-based systems that let devs add effects like glowing fireflies to create depth in the game world.

C# scripting is the main language in Unity, which has a chance of deterring devs familiar with Python. Python is the most popular scripting language at the moment, according to the TIOBE Index. There’s a visual scripting tool that allows devs to use logic blocks instead of writing code. Unity has learning tools built in to help devs figure out its core features, including tutorials on C# and beginner-friendly development courses.

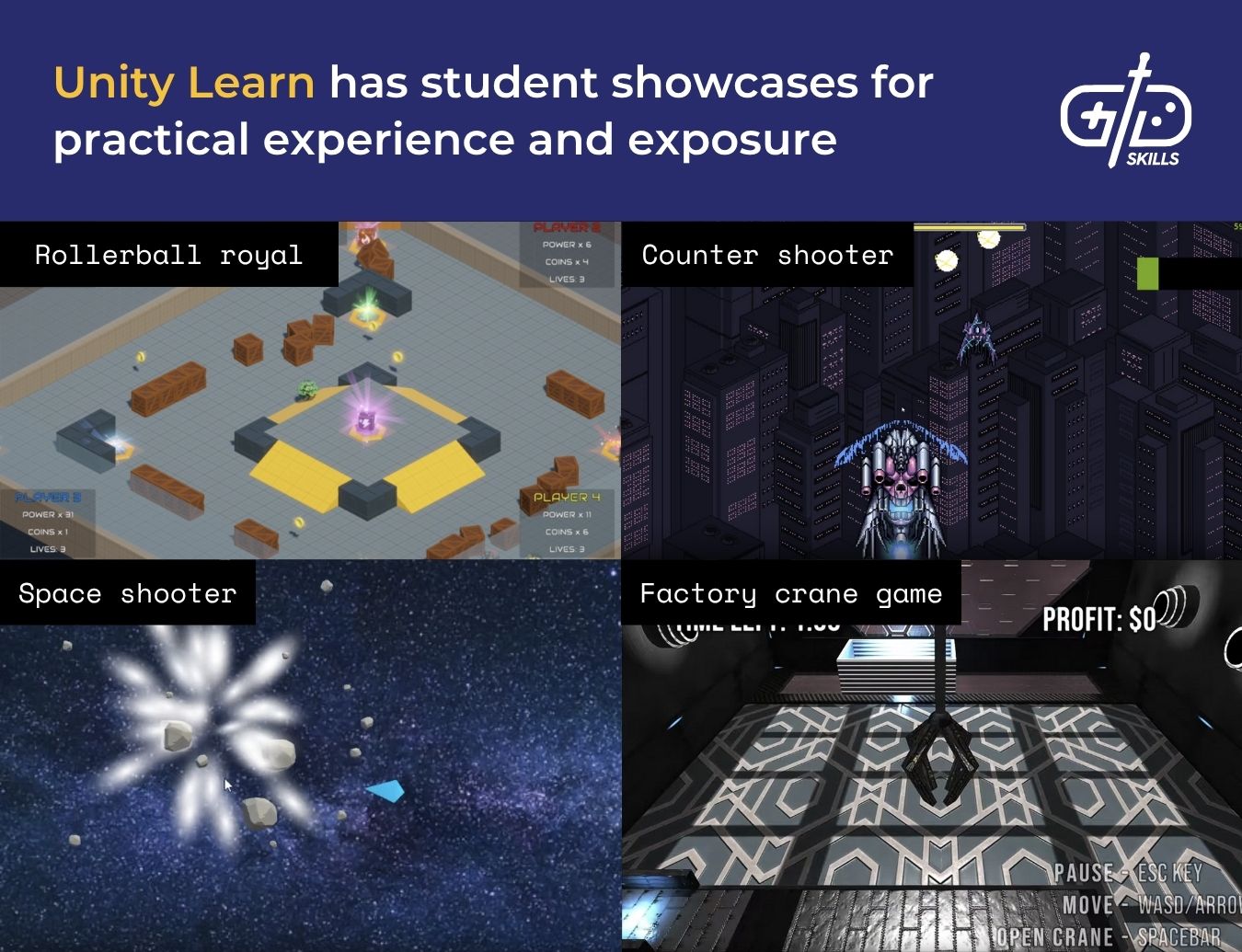

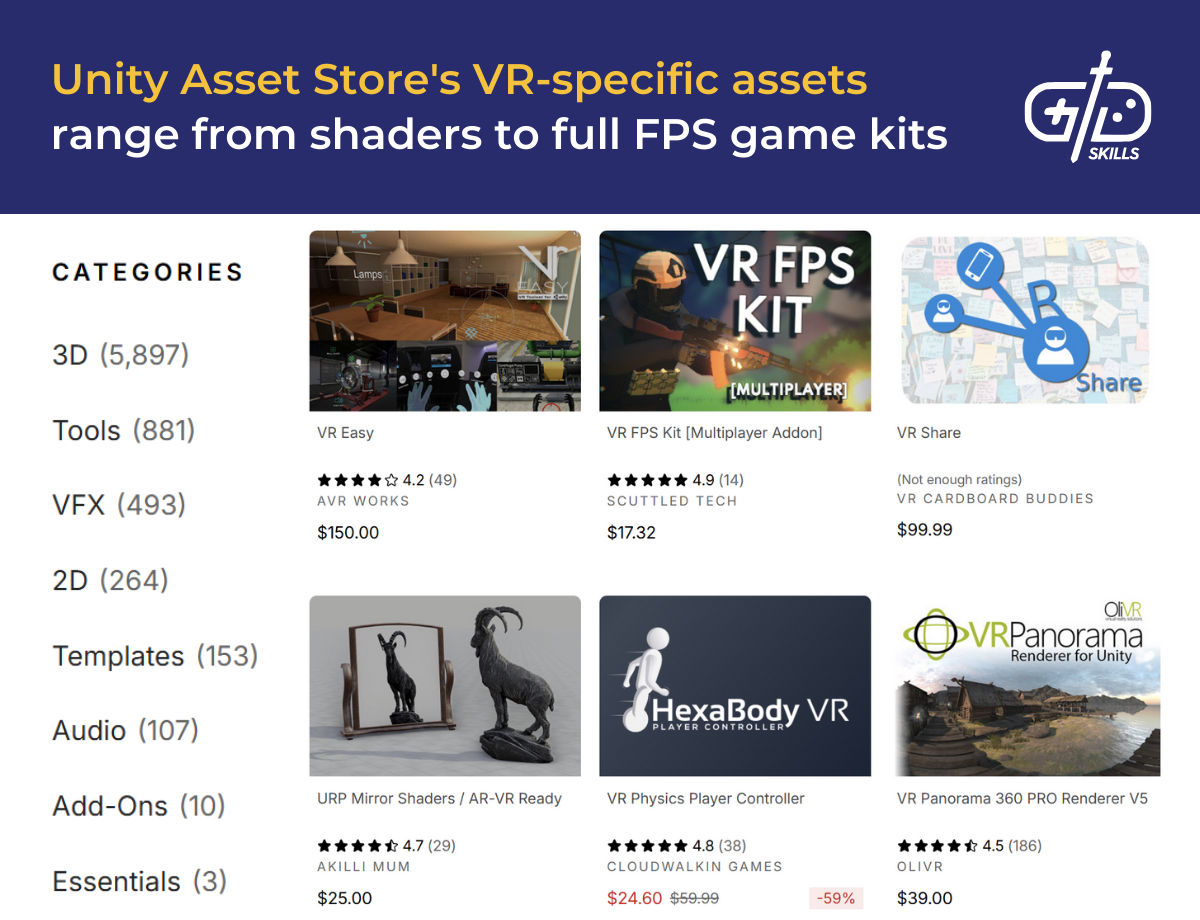

Unity Learn is available on Unity itself, with free tutorials and courses. The courses teach indie and mobile game devs how to build a 2D platformer, plus multiplayer or VR to high-scale games to appeal to experienced devs. External platforms like Udemy, Coursera and edX offer courses specifically for Unity, geared to all skill levels. Unity also has built-in asset stores, editors and management hubs for quick development. The Unity Asset Store and marketplace has multiple downloadable game parts like characters, graphics and sounds. The VR-ready content library saves time when developing heavyset games.

Unity is used most frequently for VR game development because it lets devs build one VR game and run it on multiple headsets and systems, like Meta Quest and Pico. There’s an active dev community online with multiple forums, and Discord servers that assist devs with picking the right Unity version for their VR projects, like between LTS (finished games) and Tech Stream (experimentation).

2. Unreal Engine

Unreal Engine is a game engine developed by Epic Games with AAA-standard graphics, letting devs build realistic visuals. The engines are optimized for large open worlds and support multiple platforms from mobile to high-end PCs. Unreal is free until a game earns $1 million, after which Epic requires a royalty fee.

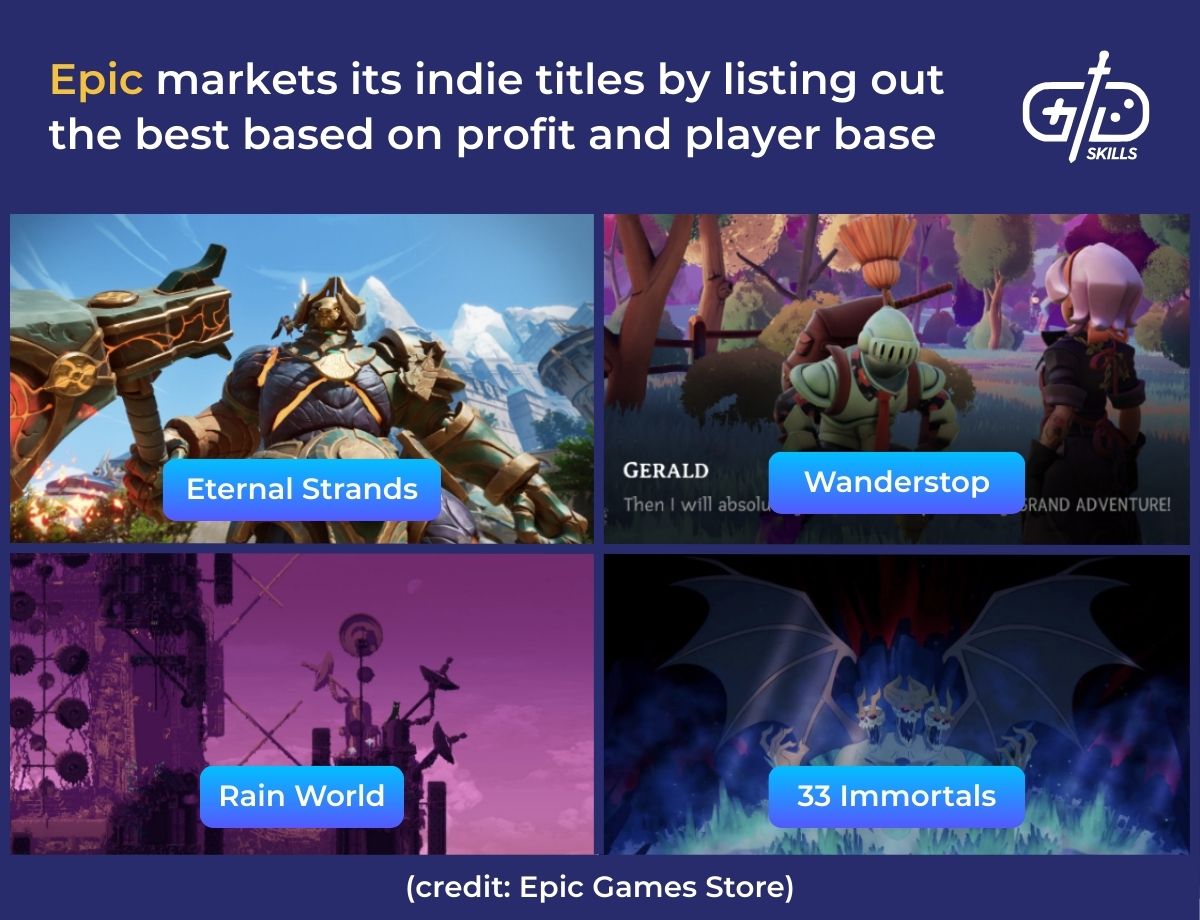

Epic Games is known for games like Fortnite, which is a multiplayer game with high-quality visuals. The revenue that came from Fortnite gave Epic room to collaborate with large studios and to invest in indie titles. Kena: Bridge of Spirits, for example, is an indie title that Epic published and showcased on its platform. Unreal Engine comes into play since it ensures both major and indie studios have access to the same set of tools. The list below gives an overview of the tools built into Unreal.

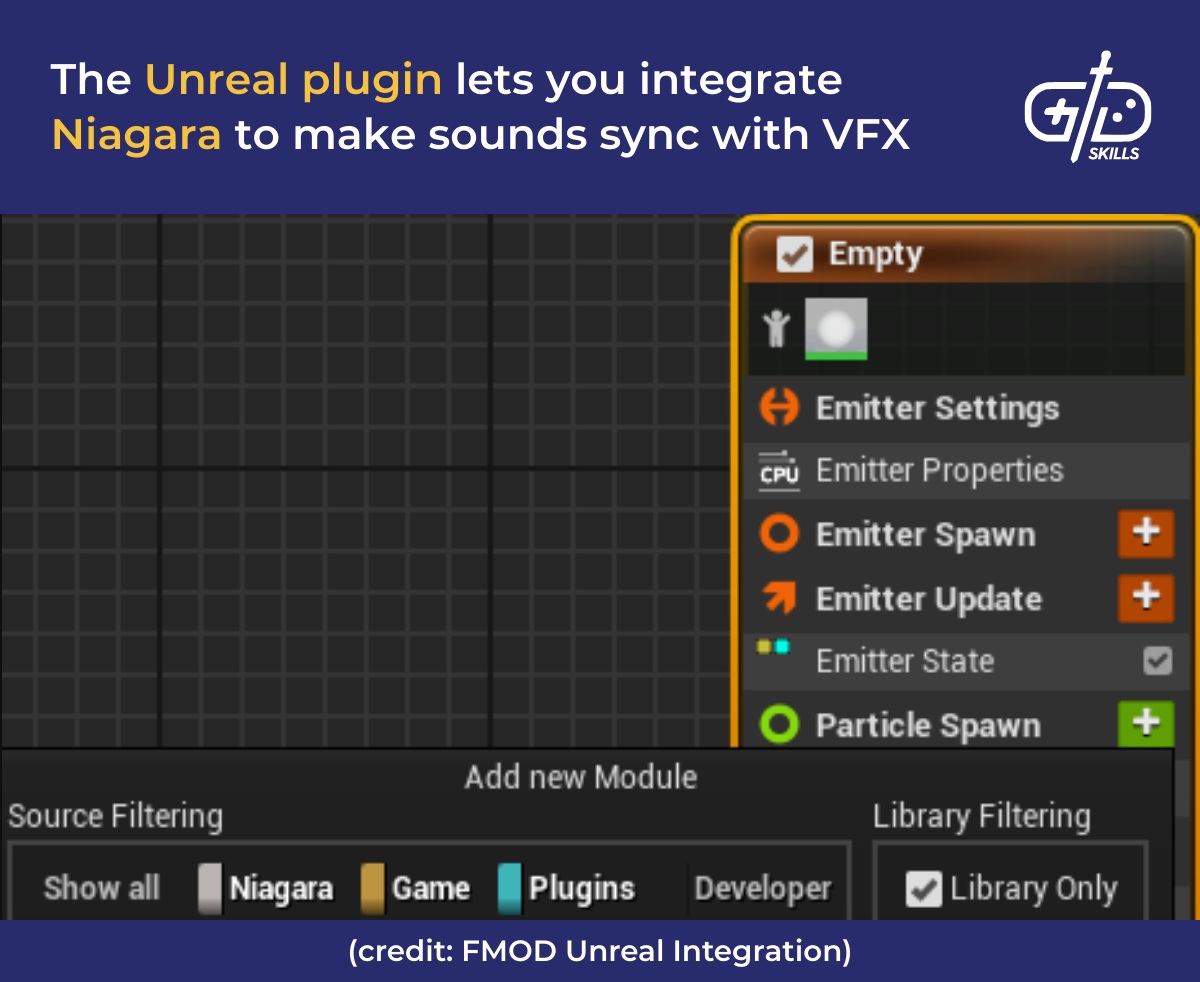

- Niagara VFX helps designers build particle effects like fire or smoke

- World Partition loads the big parts of worlds only, so memory is saved

- Material Editor helps design surfaces like metal to be realistic and fit the game concept

- MetaHuman Creator is so devs are able to create realistic characters quickly

- Sequencer ties scenes together, and lets devs use camera shots for cinematic appeal

The tools make Unreal ideal for VR since VR prioritizes realistic visuals, and Unreal handles open worlds better next to Unity and Godot. Devs need to choose between Unreal Engine 4 (UE4) and Unreal Engine 5 (UE5) depending on their project’s scope. UE4 is ideal for small projects since it’s lightweight, whereas UE5 is better optimized for realism with the built-in Nanite and Lumen systems.

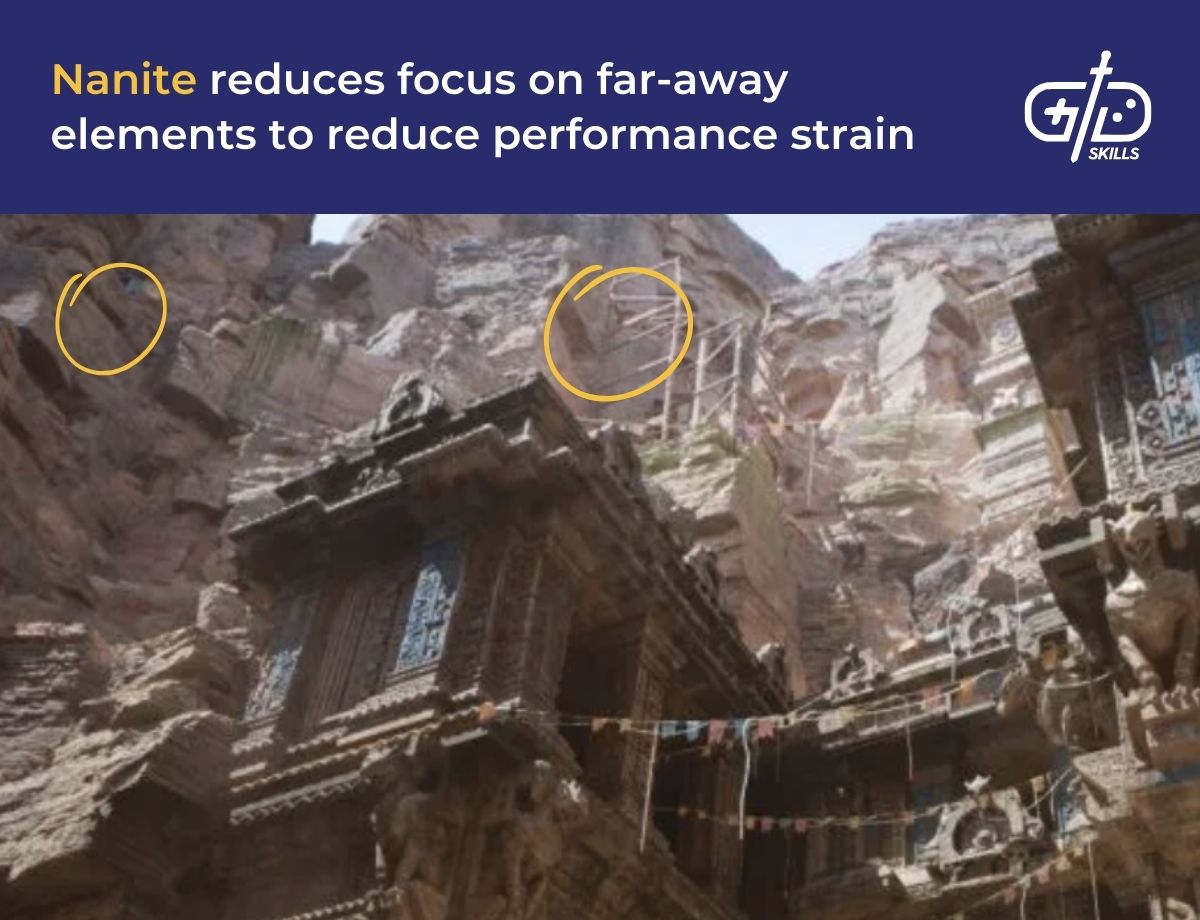

Nanite helps put detailed 3D objects into a game without compromising on performance. Objects like a skyscraper made up of millions of polygons make games run slow, so Nanite makes sure to stream only the details visible to players. This ensures that visuals are foregrounded and artists don’t need to painstakingly create low-detail versions for different platforms. Lumen is dynamic lighting that calculates how light moves in real time. Unreal combines this with ray tracing, which simulates how light bounces off walls or objects to match game states.

Devs and designers don’t need to write code with Unreal, as it uses blueprint visual scripting to replace C++. Devs and designers are instead able to drag and drop blocks to build their game, like dragging in menus, character actions and game rules from asset packs or add-ons. Sea of Thieves used blueprint for its treasure maps and ship control.

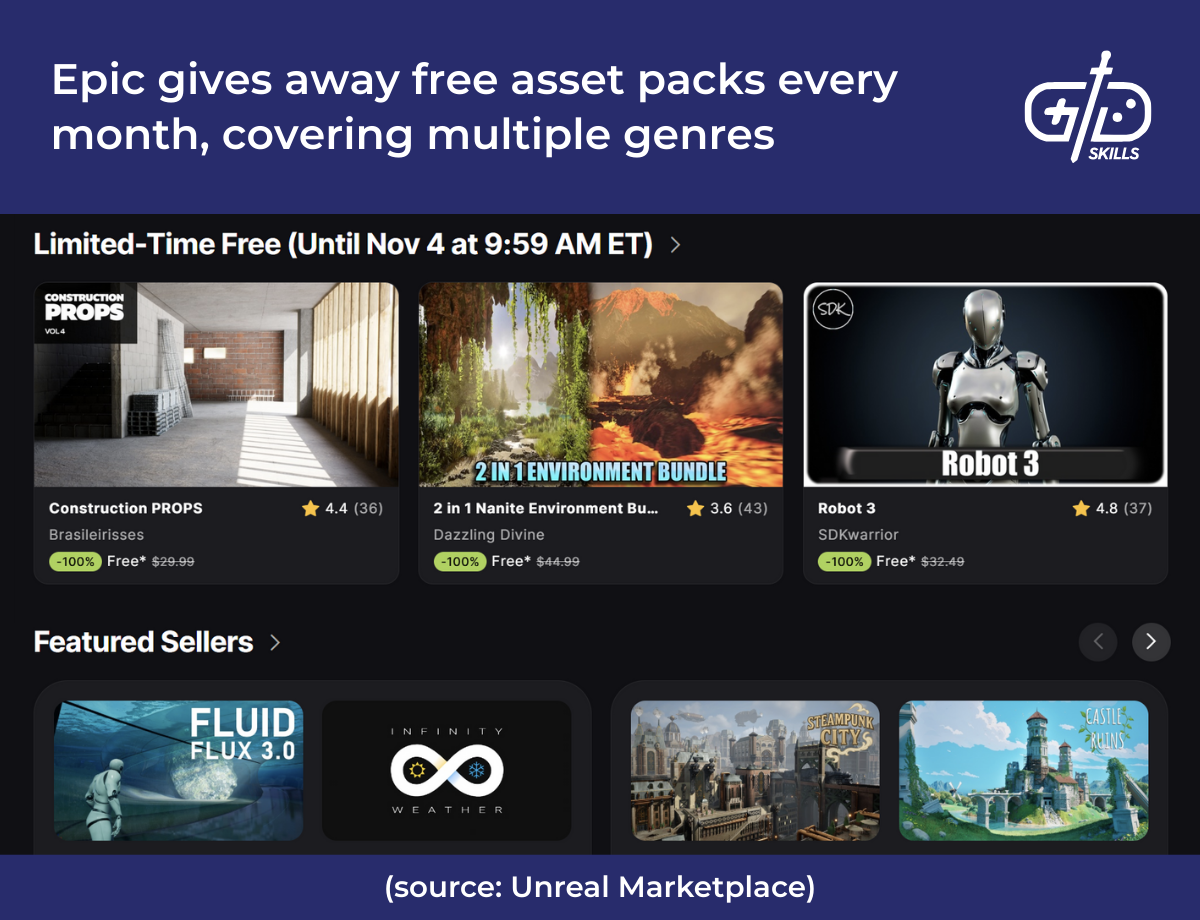

The Unreal marketplace is the ideal place to find assets and features to drag into games. Unreal’s marketplace has both free and paid content including curated collections and Epic sponsored assets. The asset store offers a mix of 3D models, environments, and animations, as well as shaders and plugins. Unreal has an active community too, so forums and Discord servers are helpful to learn from and get feedback.

3. GameMaker

GameMaker is built for 2D games and features both drag-and-drop visual scripting and a GML scripting language, making it ideal for no-code development. GameMaker was developed by YoYo games and is free for non-commercial use but requires a paid license to publish on all platforms, with consoles only available with the Enterprise license. The engine’s cross-platform support lets devs export to all major platforms.

The GML scripting language, an abbreviation for GameMaker Language, lets devs write game logic like movement or enemy attacks. GML code syntax is similar to JavaScript and C-style syntax, so it’s easy to learn and supports event-driven logic. Undertale used GML to make its mixed turn-based and real-time dodging battle sequences.

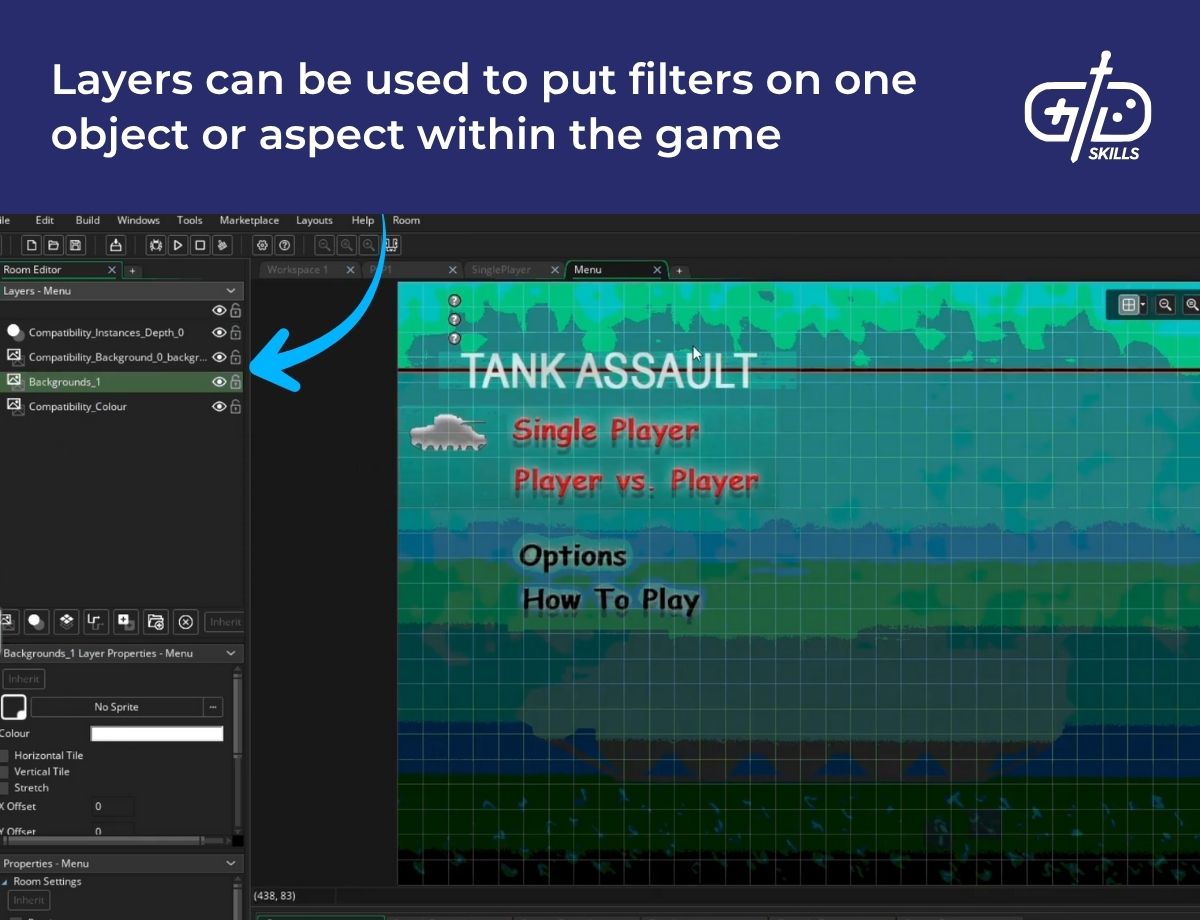

GameMaker Studio 2 is another version of GameMaker with expanded platform support to include browsers, consoles and Linux with improved UI. The UI is customizable with moveable panels and workspaces so devs are able to work on their own terms. GML is still in use but has a built-in editor and debugging tool. GameMaker Studio 2 pushed out features like the layer-based room editor and built-in physics effects alongside the real-time debugging tools. The layer-based room editor lets devs build their game by placing objects in layers. A platformer game like Spelunky needs different layers for terrain, traps and enemies, and seeing them arranged visually helps devs figure out the layout quickly.

The built-in physics effects means devs are able to make characters move around without coding complex formulas. The real-time debugging tools add further value since they let devs test out their game after adding the physics and layers to see how it runs. Problems that crop up are fixed while the game is running, reducing iteration further along in development.

4. Construct 3

Construct 3 is a 2D game engine that lets devs build games directly in a web browser without the need for installation. Over 7,180 games have been published on Construct Arcade, with 154 million total plays. Games are publishable to Windows, Android, iOS and browsers, covering all the major platforms. Construct 3 has a free trial but paid plans are needed to unlock all the export options and advanced features.

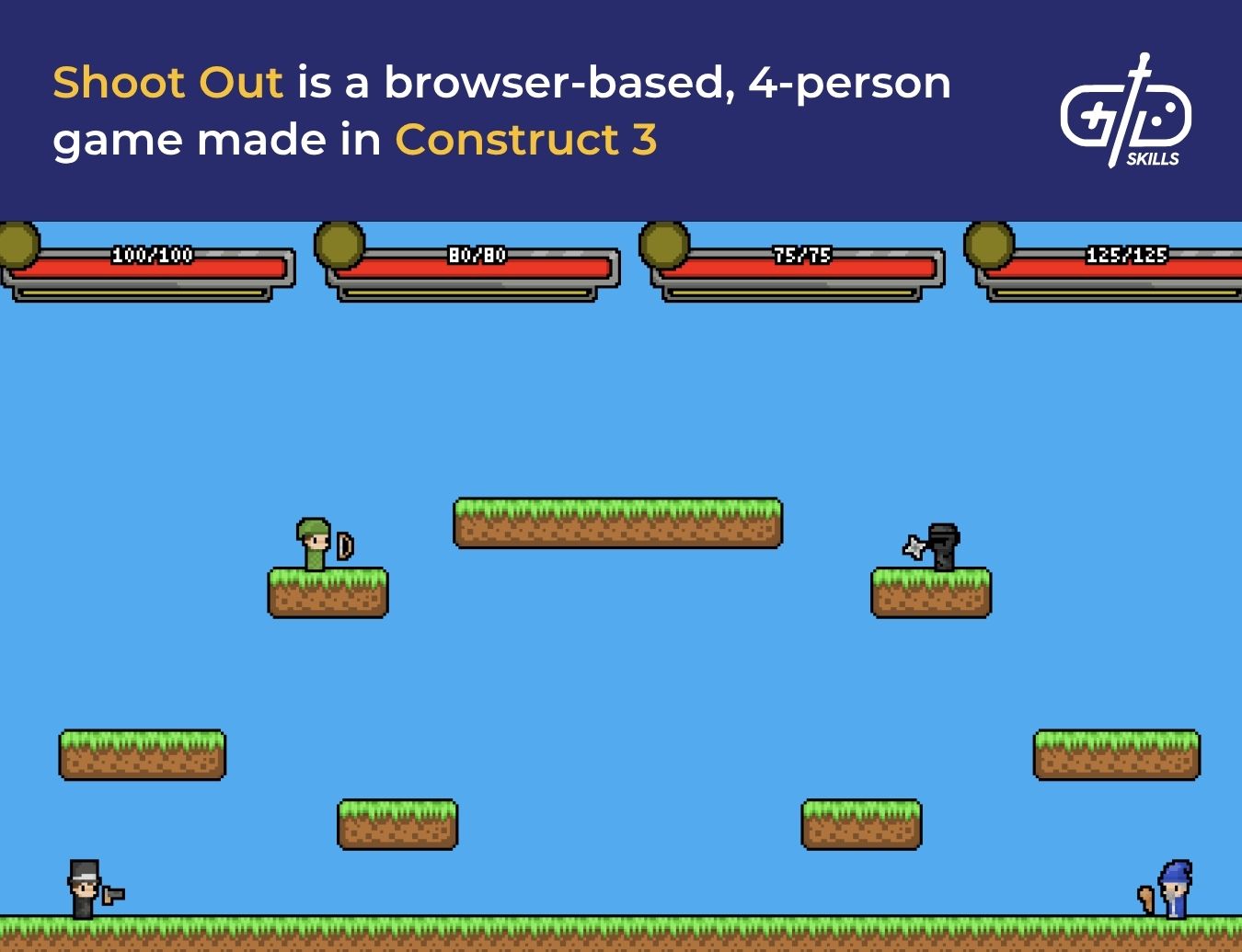

Construct 3 was built with indie and educational game devs in mind. Accessibility is prioritized via the drag-and-drop tools and IF-THEN logic, which eliminates the need for conventional code. There is TypeScript support for experienced devs to create systems like custom AI behavior or physics. Shoot Out, for example, is a 4-player game developed on Construct 3, that’s able to handle basic multiplayer systems.

5. Godot

Godot is one of the three industry-standard game engines next to Unity and Unreal, and is free and open-source. Fast-paced action games such as Halls of Torment have been built in Godot, and still run well on older hardware. This is because of Godot’s lightweight architecture, plus built-in 2D and 3D editors and animation and debugging tools.

Godot has its own GDScript language, with a syntax inspired by Python that opens the floor for devs used to Python. GDScript supports event-based logic and timed actions. The visual scripting system makes Godot accessible to non-coders as it’s node-based and makes use of flowcharts. It’s not as popular as GDScript but is a useful learning tool.

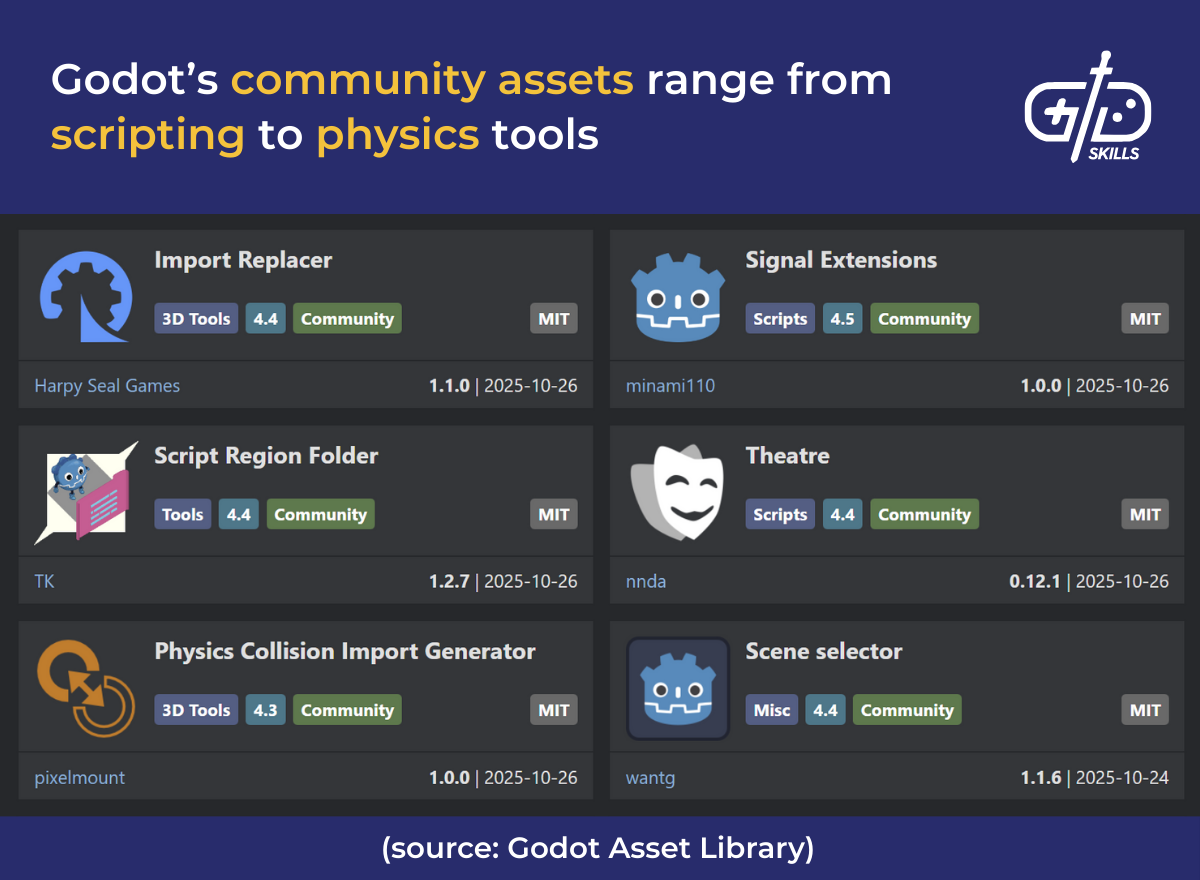

Godot’s active community ensures its asset library is filled with add-ons, tools and scripts. The assets are open-source too, so they’re customizable for specific concepts. Godot is still comparatively smaller than Unity and Unreal, without the same level of support for AAA graphics and multiplayers, despite upgrades in Godot 4. Godot 4 comes with improved dynamic lighting effects and is optimized for modern devices. Its new, advanced material shaders give textured surfaces additional depth.

6. Blender

Blender is a free 3D modeling tool with features similar to expensive tools like Maya Autodesk. Blender is open-source with an active community, making it ideal for indie devs and studios running on tight budgets. Artists are able to use Blender to create models of objects like a ship, animate how it moves, and add textures to the wooden hull.

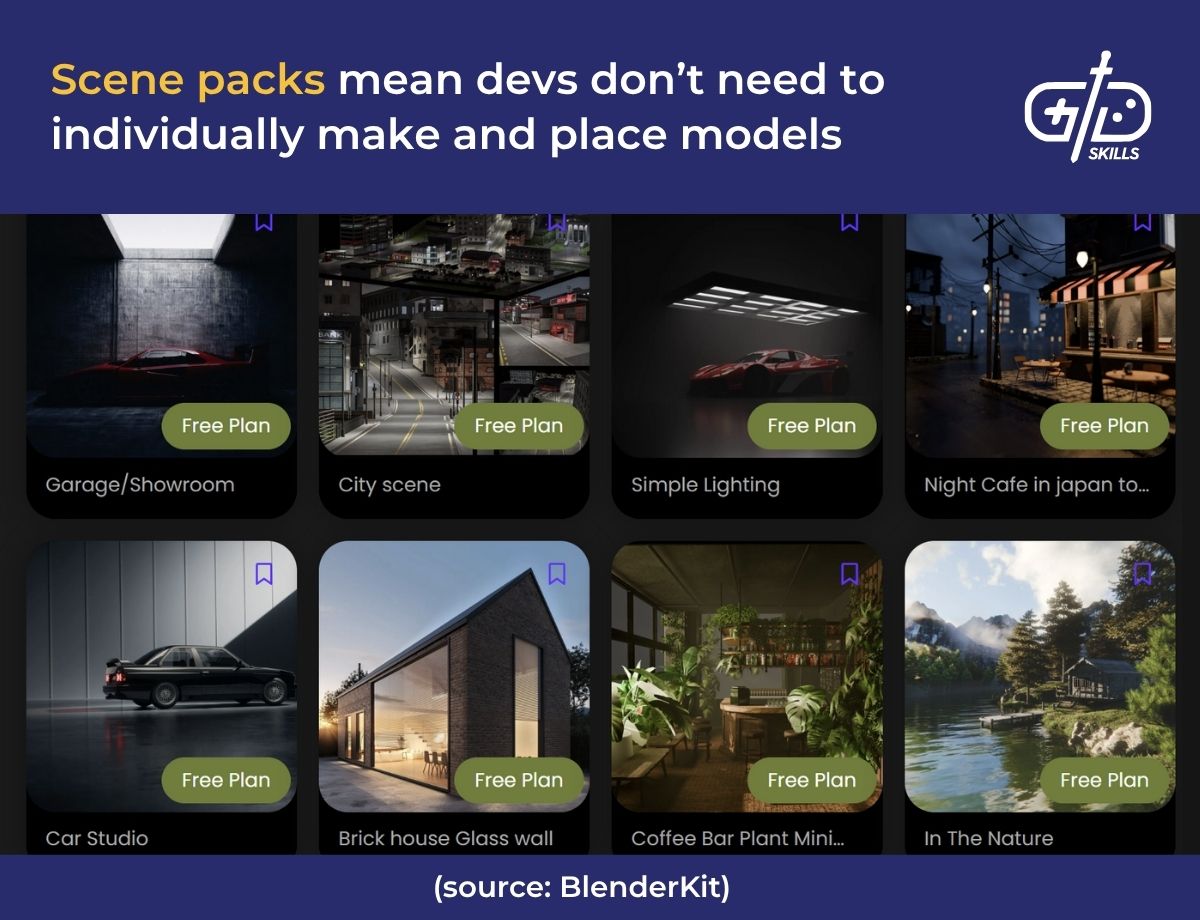

The BlenderKit asset library offers over 100,000 assets with a drag-and-drop system. The assets include models, like trees and furniture, as well as materials to apply to the models, like wood for the trees. HDRIs (lighting environments) are available too, alongside scene packs. Different brush options allow artists to add minor details to models and sculpt out concepts.

Blender has a steep learning curve due to the vast array of tools available. The name is a metaphor: Blender feels like it has about as many tools as a kitchen blender has overlapping blades. Beginner Blender users rely on 2 or 3 tools to model a simple cube, while a professional uses 12+ tools to create an entire city with dynamic lighting and procedural generation.

Beginners and intermediate artists are recommended to refer to Blender Guru, which is Andrew Price’s YouTube channel. He goes into how to navigate the UI and covers Blender modeling basics, with advanced topics including lighting and real-time effects. Andrew’s “Donut Tutorial” is infamous for teaching modeling, texturing and lighting in a fun manner.

7. RPG Maker

RPG Maker is designed to create 2D, turn-based RPGs akin to the older Final Fantasy games, but has enough flexibility to be used for other genres. Beginners and devs are able to use it since it has both drag-and-drop tools for non-coders and plugin support for scripting languages. Games like To the Moon used RPG Maker to create a narrative with simplified movements, dialogue and cutscenes. RPG Maker’s main versions need to be purchased for export and permanent access to all features, but free trials are available.

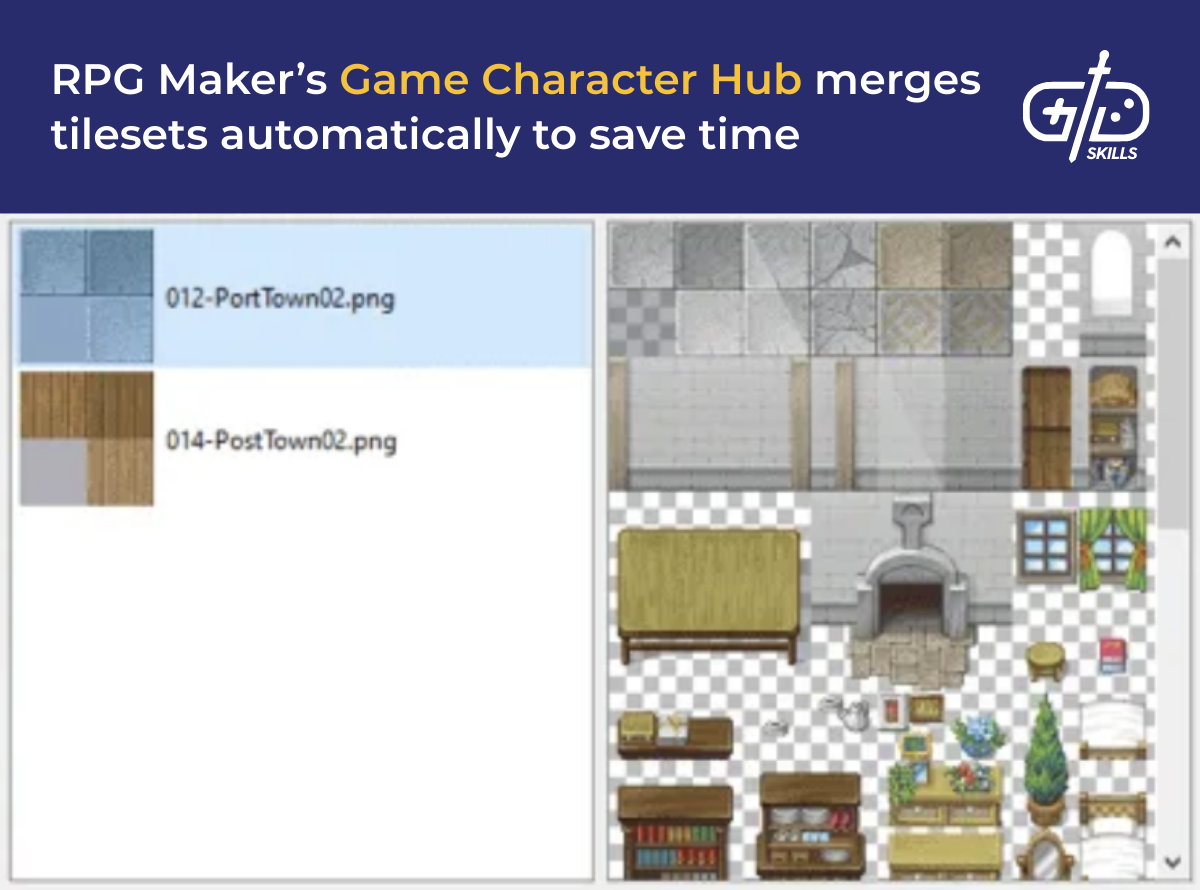

RPG Maker has a tile map editor which lets devs design levels by placing tiles, like grass and walls, on a grid. The character and enemy lists make it easy to modify the stats and appearance of characters and enemies. Devs are able to visually map out their game’s structure, then run through sections of the game and change it as needed.

The event system in RPG Maker lets devs use IF-THEN logic to trigger dialogue, battles or cutscenes in their games. Beginner designers make use of the premade assets, since these cover the full range of characters, tiles, music and effects. The premade assets are useful starting points but don’t hold out in the long run as they aren’t unique.

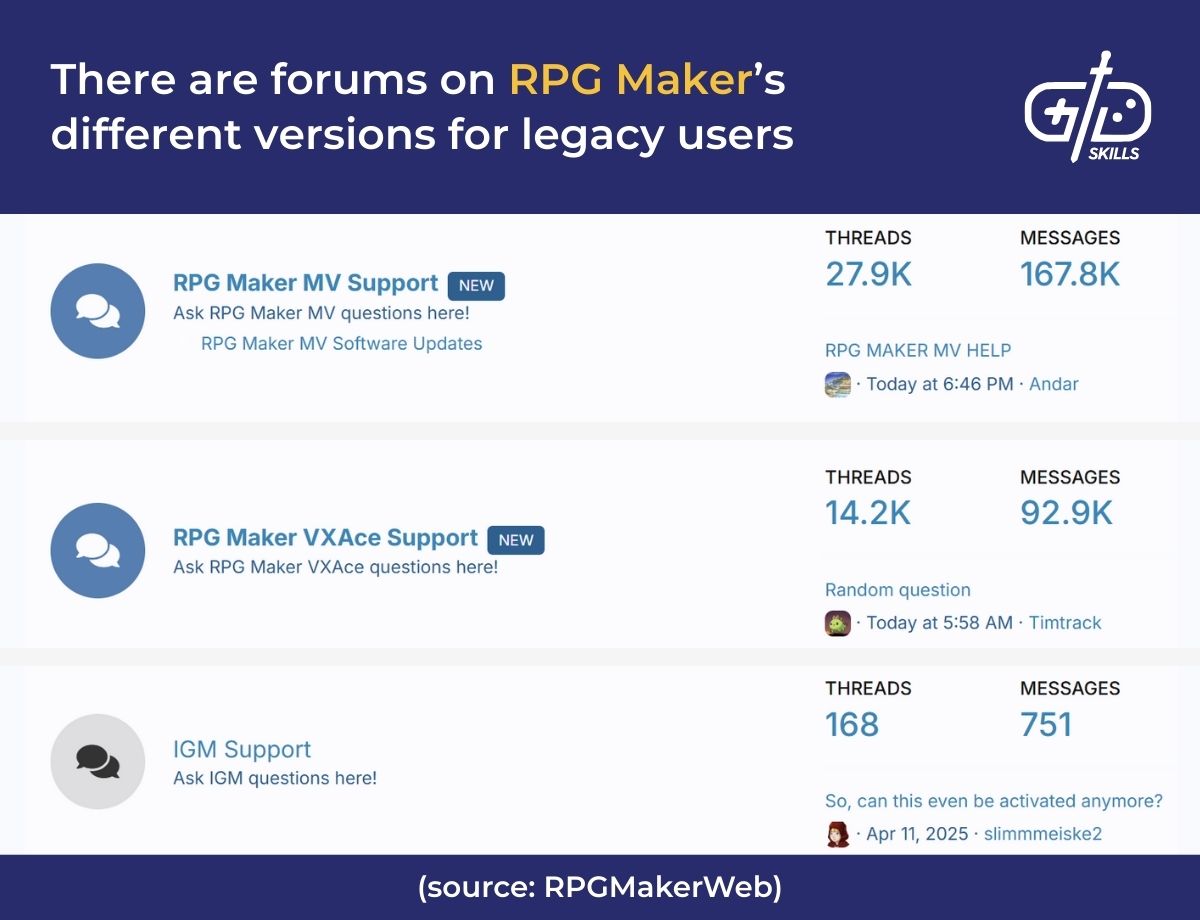

RPG Maker’s active dev community, based in the RPGMaker subreddit, is another useful resource for beginners. The overall community has been active since 1992, helping learners with no game design experience create their own RPGs. The forums have tutorials, asset packs, plugins, and many helpful people willing to give feedback on ongoing projects.

RPG Maker MZ is the latest version with improved graphics and animation (but still the same rendering engine as MV) plus plugin support. Experienced devs are able to write custom scripts as it has strong plugin support for JavaScript ES6+. Layer control returned to the tile map editor and turn-based battle systems were enhanced with a time-progress mode in MZ. RPG Maker MV, the previous version, supported the same export options as MZ, letting devs export to Windows, macOS, Android, iOS and browsers.

Cordova is a tool that helps turn the game into a mobile app, which is useful since RPG Maker MV only makes games that run on browsers. Older versions of RPG Maker, like VX Ace and XP, supported only Ruby. XP had very minimal support compared with newer versions but was the first to support custom scripts, opening the floor for experienced devs. Games like Aveyond and Laxius Force were made in early versions of RPG Maker, demonstrating its continued improvements and extended support.

8. Stencyl

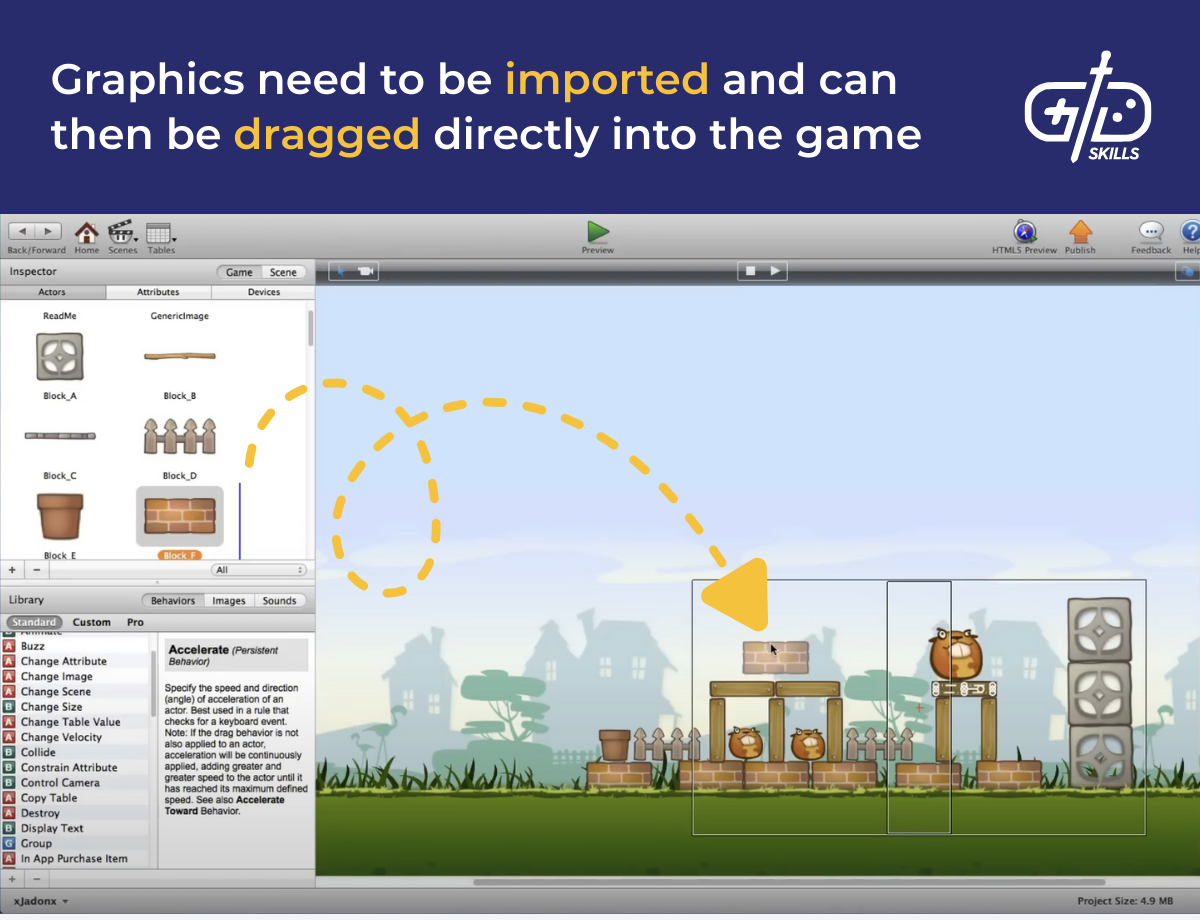

Stencyl is a beginner-friendly game engine geared toward 2D games that uses visual scripting and drag-and-drop tools, so it’s ideal for non-coders. Stencyl was built with Haxe, a programming language that lets Stencyl export games to multiple platforms. Box2D, used in games like MoonQuest, powers Stencyl’s physics engine. Stencyl is free to use but has paid tiers to publish on mobile or desktop platforms.

The dev center is for advanced users to develop custom extensions using Haxe, while Box2D is ideal for platformers, arcade games and physics puzzles. Box2D is an industry standard engine so advanced devs are able to use it for collision detection and realistic movement. A game like Angry Birds has the possibility of being prototyped using Haxe and Box2D for its slingshot physics.

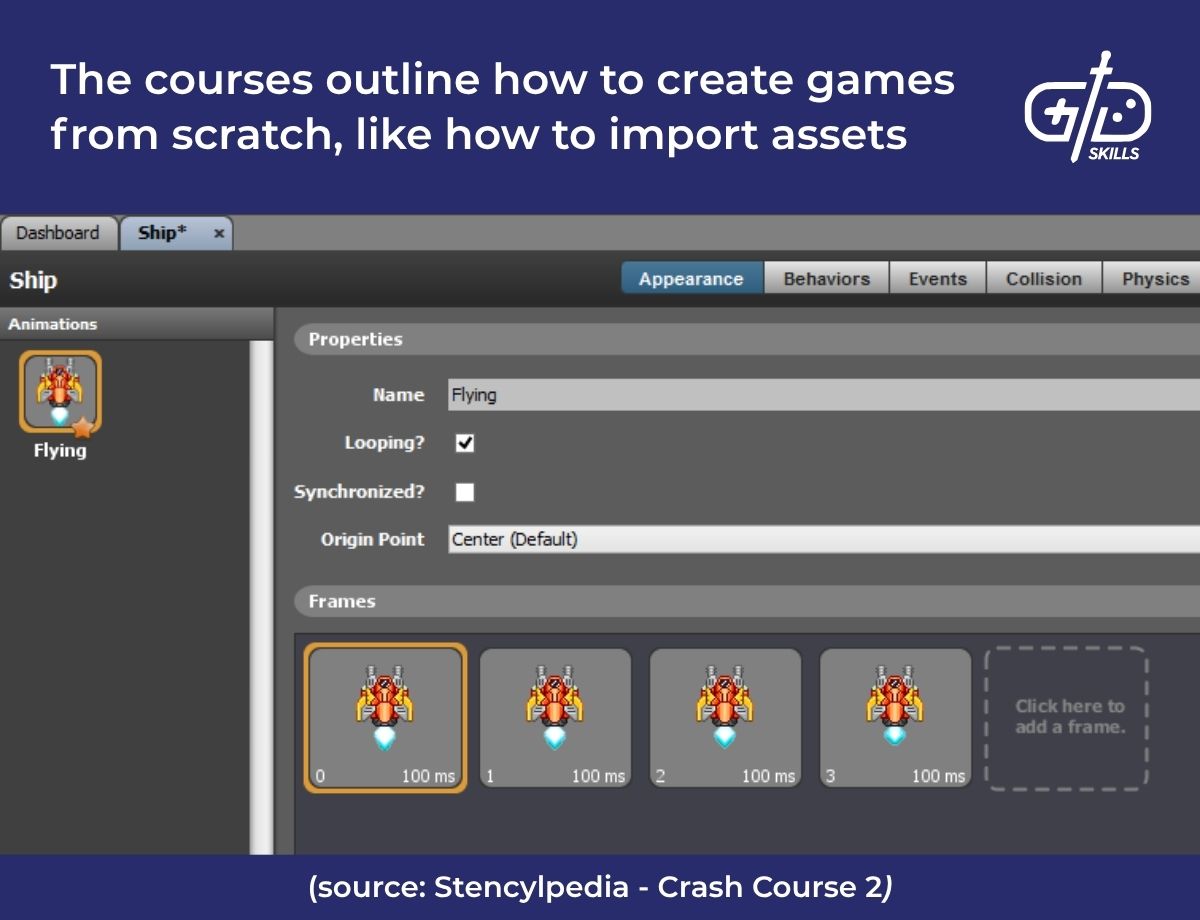

Stencylpedia Knowledge Base has full tutorials and educator kits for new users to take advantage of. The courses explain what each visual block does and give practical exercises where learners work with sample games and use the tools available to complete tasks. One tutorial lets students build a Space Invaders version of their own, with custom art and sounds.

The StencylForge asset library is a useful community resource with free assets. Logic tilesets, sounds and characters developed and sourced by community members are available. Collabs and reuses are actively encouraged so learners are able to ask devs directly for advice on how to use or work with them on their own projects to get hands-on experience.

9. CryEngine 5

CryEngine 5 is a graphics engine created by Crytek, with tools that let devs create games with photorealistic visuals. CryEngine is free for use until games gain over $5000 in revenue, after which a 5% royalty is required. Crytek used CryEngine to develop the FarCry and Crysis franchises, both of which are known for their high-quality visuals.

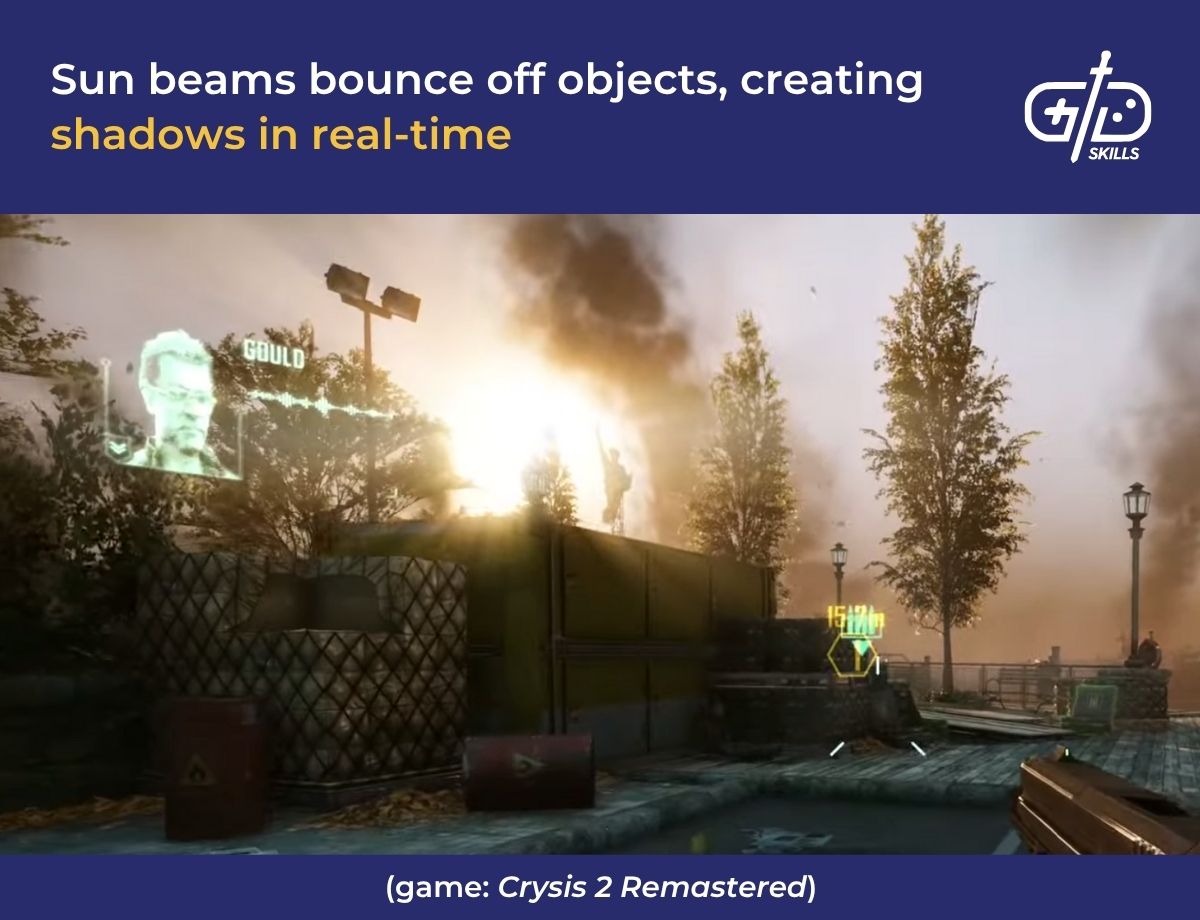

The studio makes extensive use of global illumination and high-quality rendering in their games, with sandbox editing thrown in to create an immersive and vast world. CryEngine’s graphic tools helped Crysis 2 push the limits of high-end PCs, so it’s used to test and benchmark GPU performances because of how heavy the visuals are. Crysis 2 was built on CryEngine 3 initially but had continued to be developed using CryEngine 5 with enhanced visuals.

CryEngine 3 already had a toolset inclusive of visual scripting for non-coders called Flowgraph, with TrackView for camera control. The built-in sandbox editor is a real-time level editor that lets devs drag and drop assets or adjust terrain. The editor was designed so that whatever it shows is exactly what players see in the game. Cry Engine 5 took these and improved them so they synced up with player actions and introduced new tools. The new tools included material and particle editors as well as global illumination and rendering systems.

CryEngine 5’s global illumination system gives games dynamic lighting and shadow. Player actions and game moments sync up with how light moves, like when a room brightens as the curtains are opened. Volumetric fog is included and reacts to light and depth accordingly, as well as real-time reflections in fluids and mirror-like surfaces. The PBR (Physically Based Rendering) rendering system makes sure materials reflect light naturally with environment probes to help set rules for how light acts in zones. Warm light is used indoors, for example, while blue tones are more prominent outside.

The visual interface for CryEngine 3 was fixed, without customization available. CryEngine 5 introduced a customizable UI with a viewport and dockable panels. CryEngine 3 also only supported PC, PS3 and Xbox 360, so Cry Engine 5 expanded support to PS5, Xbox series X/S and VR headsets.

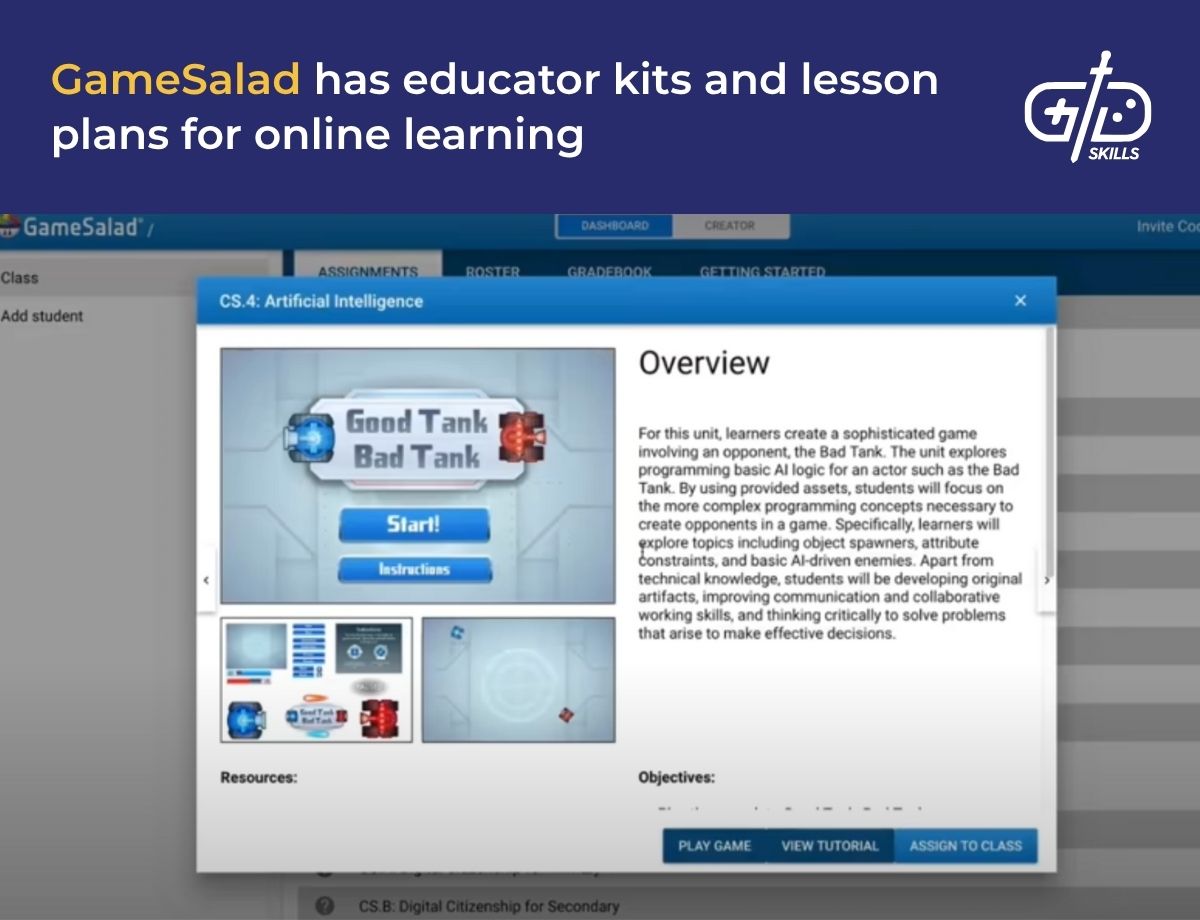

10. GameSalad

GameSalad focuses on 2D mobile and web game development, and lets devs build their games without the need for code, making it ideal for beginners and learners. GameSalad is accessible via major browsers like Chrome and Safari, and supports cross-platform publishing. It’s cheaper on the basic plan, but export options are limited. The pro plan comes with full access despite being more expensive.

GameSalad is easy to use because its visual interface has tools like the scene editor, behavior designer and asset manager. Devs are able to prototype their game and drag in sounds or effects at will without needing to open multiple tabs. The renderer comes equipped with layers and blend modes while there are performance tools to make sure games are optimized for mobile.

The performance tools include behavior profiling and asset compression. Behavior profiling keeps track of what slows down games while asset compression assists by reducing file sizes. There’s built-in support for touch controls and optimization for mobile systems. 2D game development is supported too, with ready-made animations and graphics to pull, like anime slashes or hit sparks. The physics engine has basic physics like gravity and bounce effects, making it ideal for platformers and arcade games.

GameSalad has learning resources such as Learn@Home, Arcade and educator kits. Learn@Home is GameSalad’s tutorial hub, covering both beginner and advanced topics. Arcade is a community showcase where devs and learners are able to play and share each other’s GameSalad projects, with remixing options. The educator kits include lesson plans for teachers to use.

11. AppGameKit Mobile

AppGameKit Mobile is a free IDE designed by The Game Creators to develop games on Android and iOS. The IDE is ideal for fast development since it lets users write, compile and run their games on their mobile device, and is useful for beginners. Games are exportable to all major platforms, including Linux and Raspberry Pi.

The Game Creators are known for developing tools like GameGuru and Dark Basic, which prioritized accessibility by catering to both learners and advanced devs. The publisher continues this sentiment by offering educational licenses, with regular updates churned out by an active community. GitHub has additional community forums and tutorials for both educators and learners.

AppGameKit Mobile’s ease of use comes from its drag-and-drop asset management options and the sandbox mode for experimentation. Built for 2D, the sprite engine lets devs render quickly with Box2D Physics for gravity and collisions. Graphics are high-quality even for a 2D engine too, since they use both Vulkan and OpenGL rendering.

AppGameKit is free on both Android and iOS but has paid editions for desktop use. The free mobile version lets users code using its standard scripting language but supports C++ for advanced users. There are built-in demos and cloud saving to sync with the desktop version, but no desktop export options and limited access to all features.

The desktop versions of AppGameKit have varying prices depending on whether devs purchase only the core engine or go for the IDE as well. Add-ons come with both the mobile and desktop versions, like an extra fee for iOS export, which are sold separately. VR/AR support is currently in development but available via extensions.

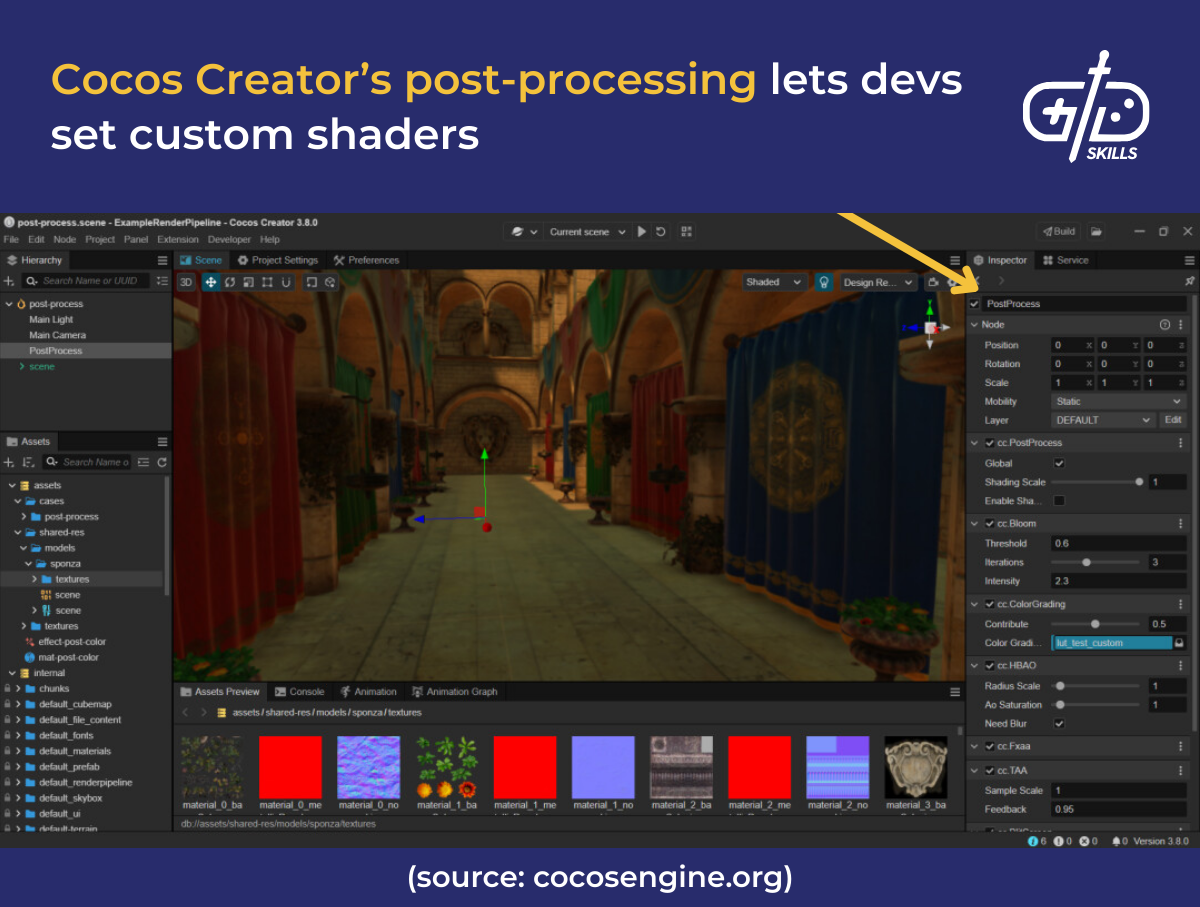

12. Cocos Creator

Cocos Creator is an open-source engine with a complete IDE, drag-and-drop tools, visual scripting and real-time previews. Cocos Creator was built on several previous versions. The latest release, version 3.8, introduced additional features like extended support for TypeScript and JavaScript (ES6+) for advanced devs.

The previous version, Cocos2d, was a 2D code-based engine with multiple variants for specific platforms and languages. The engine was originally Python-based and geared for 2D games, with support for C++, JavaScript, Lua and Objective-C. An external IDE, like Xcode or Visual Studio, was required. Cocos2D had five variants which have been detailed below.

- Cocos2d-x: C++-based with cross-platform support for mobile and desktop; used inside external IDEs like Visual Studio for development

- Cocos2d-JS: Used JavaScript for its language, with the same support as Cocos2d-x, and was used inside external IDEs

- Cocos2d-Lua engine: Used Lua scripting, which made it lightweight, but was built only for mobile platforms like iOS and Android

- Cocos2d-ObjC iOS/Cocos2d-ObjC Apple: Used Objective-C and was built for iOS, but is otherwise similar to the Cocos2d-Lua engine

- Axmol Engine: Used C++ and is a fork of Cocos2d-x, with cross-platform support for mobile and desktop; community-made and still undergoing active development

Cocos Creator has full support for 3D, but still supports 2D development. It comes with a built-in asset manager and debugging tools, with improved support for animation and visuals. Cocos2d users had to manually handle their assets and debug their games. Platform support has been extended to desktop, in addition to the existing mobile and web options. There’s limited support for VR at the moment.

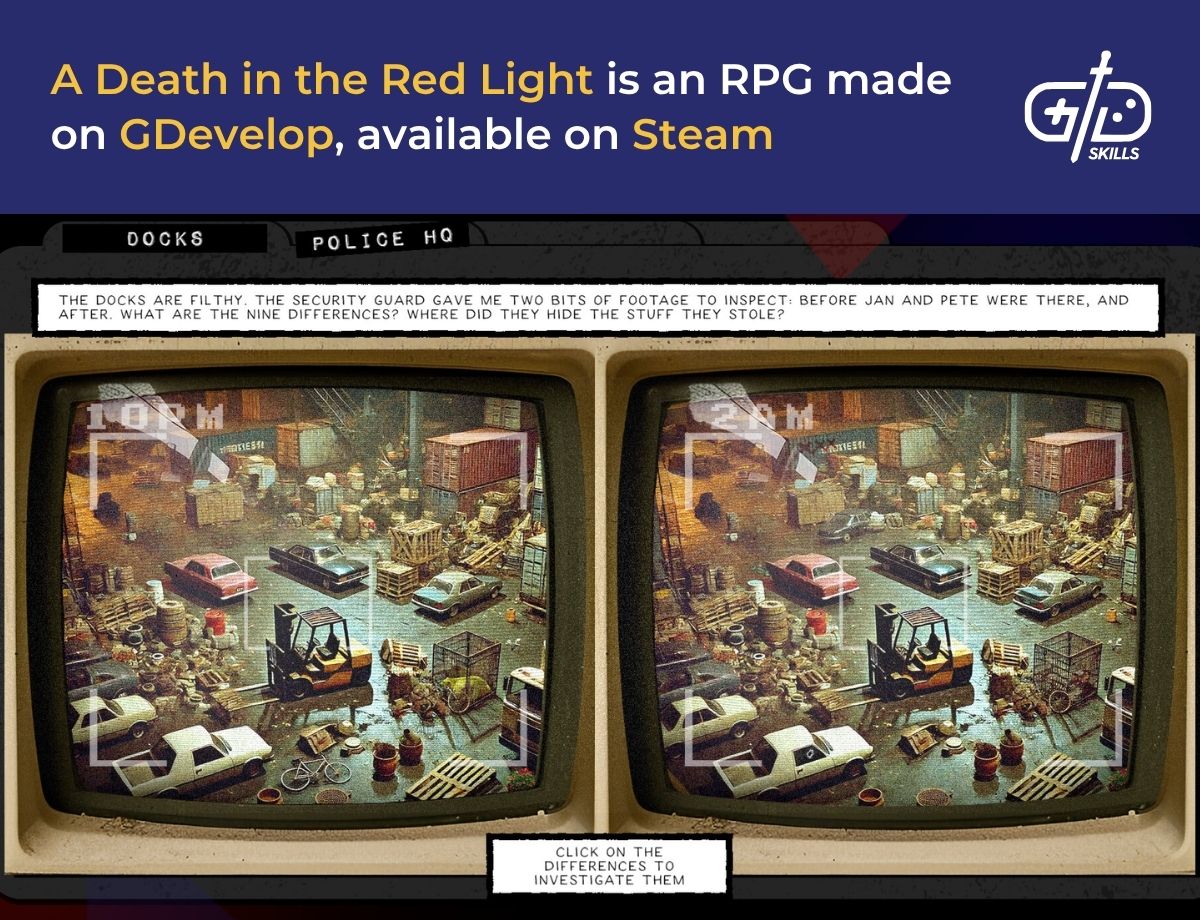

13. GDevelop

GDevelop is a 2D open-source engine with a drag-and-drop UI, making it friendly for both non-coders and beginners. The engine is licensed under MIT with active development on GitHub, so there’s strong community support on forums and Discord. There’s cross-platform support as well, with export options extending to Linux and browsers.

GDevelop uses event-based systems with IF-THEN logic, and devs manage events through the system logic management tool. The events are organized into groups, or functions, and are reusable. Event-based systems aid less experienced devs in organizing their thoughts, making it easy to check and explain the rules of their game. The visual programming system comes with a scene editor and object manager, helping devs design a level while organizing its sounds and events accordingly.

The renderer is WebGL-based, supporting layers, blend modes and camera effects, while the animation editor is frame-based. Frame-based editors allow devs to preview scenes and add transitions, plus scene triggers, for smooth gameplay. Box2D is used for physics simulation, so gravity and collision effects are available, too. Local multiplayer is possible, but online multiplayer needs external plugins or custom scripting, which isn’t ideal for beginners or large projects.

14. Defold

Defold is a free, open-source engine that’s currently maintained by the Defold Foundation, with investments from King, the original developer and the studio behind Candy Crush. There’s cross-platform support for all major platforms, including Linux and consoles like Nintendo Switch and Xbox. Defold is built for speed, making use of the Lua scripting language. Lua is both lightweight and doesn’t take up memory, so it’s ideal for web and mobile game development. Using IF-THEN logic makes it easy for beginners to understand.

The Defold Editor interface combines visual editing, code writing and asset management into one tool. Scene editors and code editors are thrown in for on-the-go changes, with live preview letting devs see changes immediately. The drag-and-drop feature available for all the tools makes it easy for beginners to insert objects without coding them in. There’s direct access to community plugins, too.

The Defold website has asset packs and extensions, alongside tutorials and showcase games for learners to review. Defold’s documentation is recommended for its in-depth tutorials on Lua, game logic and UI. There are guides for cross-platform publishing and optimizing performance in the engine.

Defold’s community is active on both Reddit and Discord, with active development being tracked on GitHub. The r/defold community has forums for devs to share their ideas and games to receive feedback, and to ask for help with any bugs or logic issues. Defold’s Discord server is similar, but offers real-time chats with devs, educators and Foundation members.

15. Twine

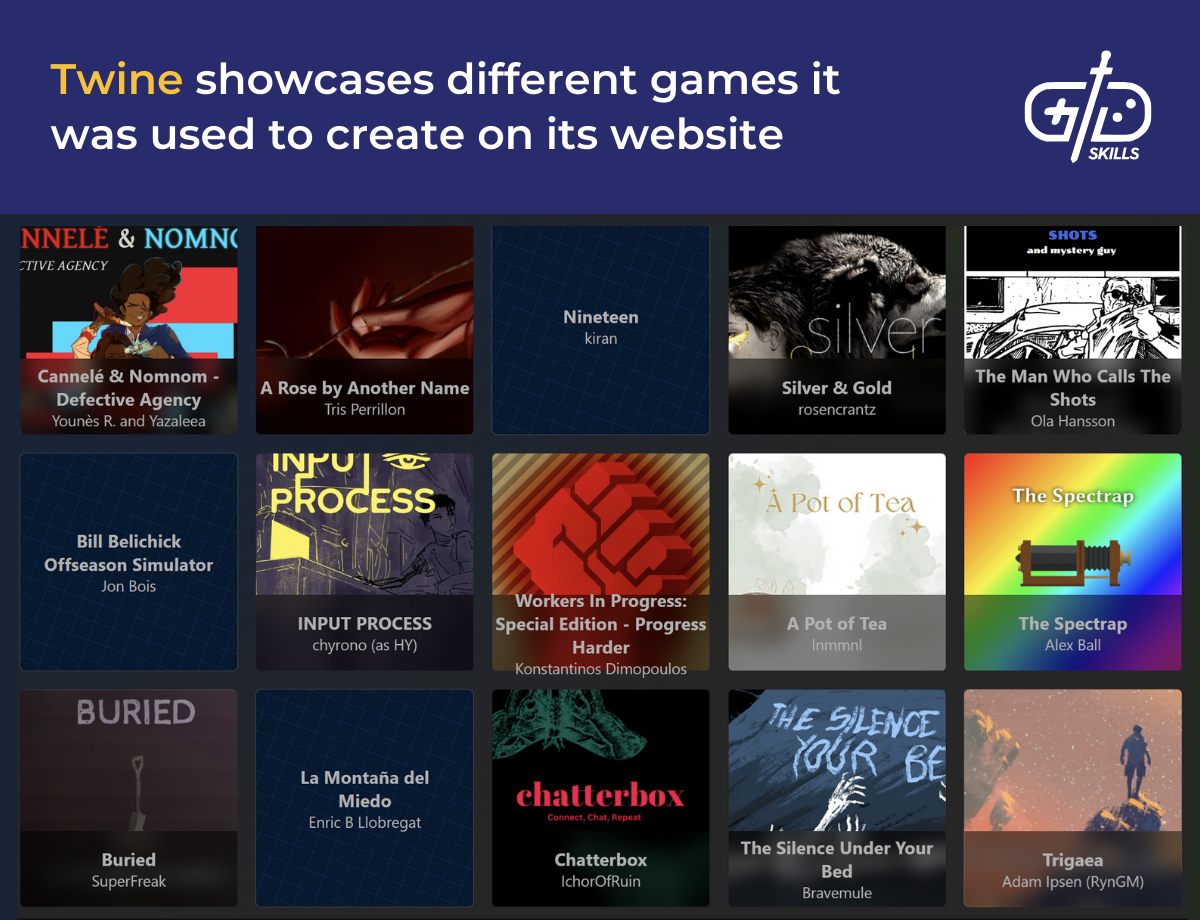

Twine is a free, open-source engine that lets users create interactive stories, making it ideal for writers, educators and indie devs. Twine has a visual editor where users are able to drag and drop story passages and connect them to one another. CSS and JavaScript support help users stylize the fonts and add timers or additional game elements.

Twine runs on Windows, macOS and Linux, and Twine games are exportable to HTML, making them playable on any browser. Twine 2 is needed to run it in a browser, though, and Twine 2’s Chapbook is required to publish browser games. Twine doesn’t require any coding, but supports CSS and JavaScript for advanced devs to add features.

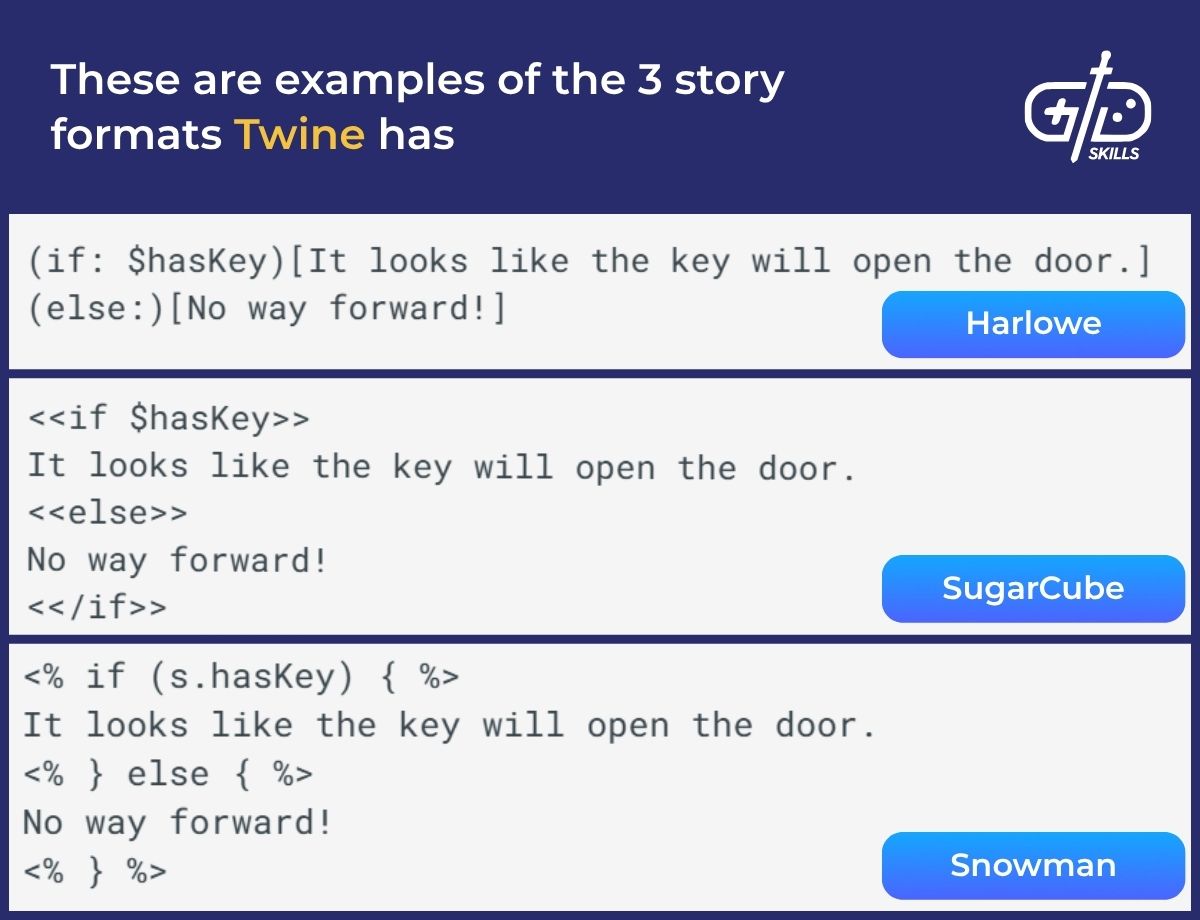

Harlowe, SugarCube, and Snowman are different engines in Twine that set the story format and change how the game behaves. Harlowe is the default format, ideal for basic stories with emotional arcs. SugarCube is more advanced and is ideal for branching RPGs with elements like inventories. Snowman is the minimalist format, designed for devs that want full control with JavaScript to insert custom UI and experiment freely.

Twine is, to summarize, ideal for creating branching stories driven by dialogue and adding emotional depth. The story formats ensure Twine users are able to create a range of experiences with varying narrative strengths, including minimalist, comedic games like You are Jeff Bezos. Twine isn’t recommended for games with extensive graphics or physics-based gameplay, but adding stylized audio and animations is possible using external tools.

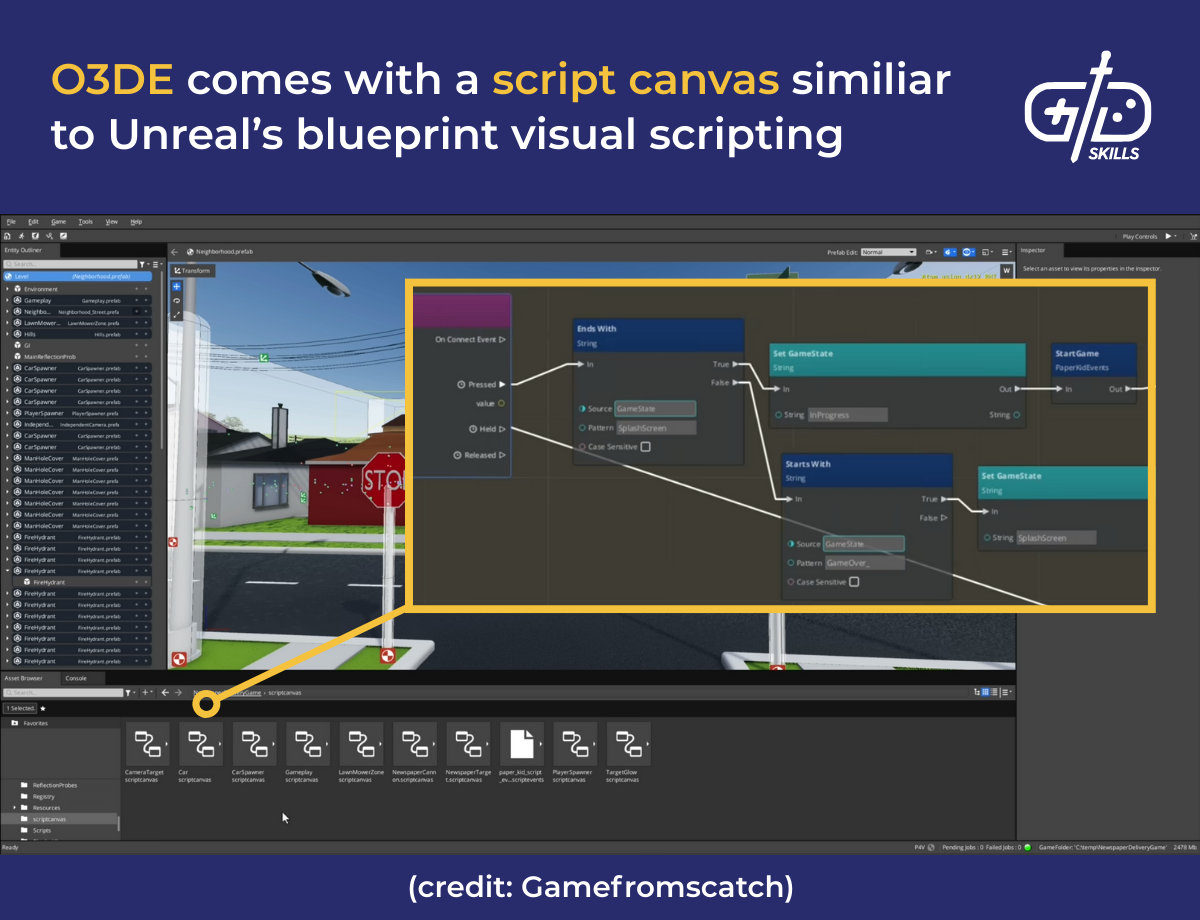

16. Open 3D Engine

Open 3D Engine (O3DE) is a free, open-source engine developed by the Open 3D foundation and is the successor to Amazon Lumberyard. Lumberyard was an engine based on CryEngine, so features like real-time rendering have carried forward to O3DE, making it ideal for demanding 3D games and simulations.

O3DE also retains Lumberyard’s built-in support for Amazon Web Services (AWS) and cloud-based storage and multiplayer functionality. Twitch ChatPlay is included, with interactive features like polls and commands that let viewers vote for things like enemy spawns. O3DE’s Twitch Gem feature gives API access, but needs to be manually turned on. Lumberyard’s rendering and physics systems were built upon in O3DE, with enhancements added to keep up with new tools and software.

O3DE’s rendering engine is powered by Atom Renderer, which handles dynamic lighting and shadow. Visuals become cinematic as a result, with devs being able to add glow effects or fog, like a fiery sword in a dusty battlefield, bringing them on par with those made in CryEngine and Unreal. The physics system is based on Lumberyard, with improved realism for gravity and collision effects. O3DE uses a modular architecture with over 30 modules for rendering, physics, animation and other game elements. The modular design lets devs switch or personalize systems using C++, Lua or JSON, as there isn’t a drag-and-drop interface like Unity or Unreal. Strong coding skills in those scripting languages is needed, so O3DE isn’t ideal for beginner devs.

17. Cerberus X

Cerberus X is a free, open-source programming language derived from Monkey X, using a syntax similar to BASIC. Cerberus X runs on all major platforms, including Linux and browsers, with limited known titles but the community share their projects on GitHub and itch.io. The community is small compared with Unity and Godot’s, so there are limited asset libraries and templates.

Cerberus isn’t an IDE, and doesn’t have one built in, so devs need to use external editors like Visual Studio or Mollusk to write and manage their code. Cerberus has core features like built-in 2D rendering support as well as audio playback and input handling, including touch-sensitive controls.

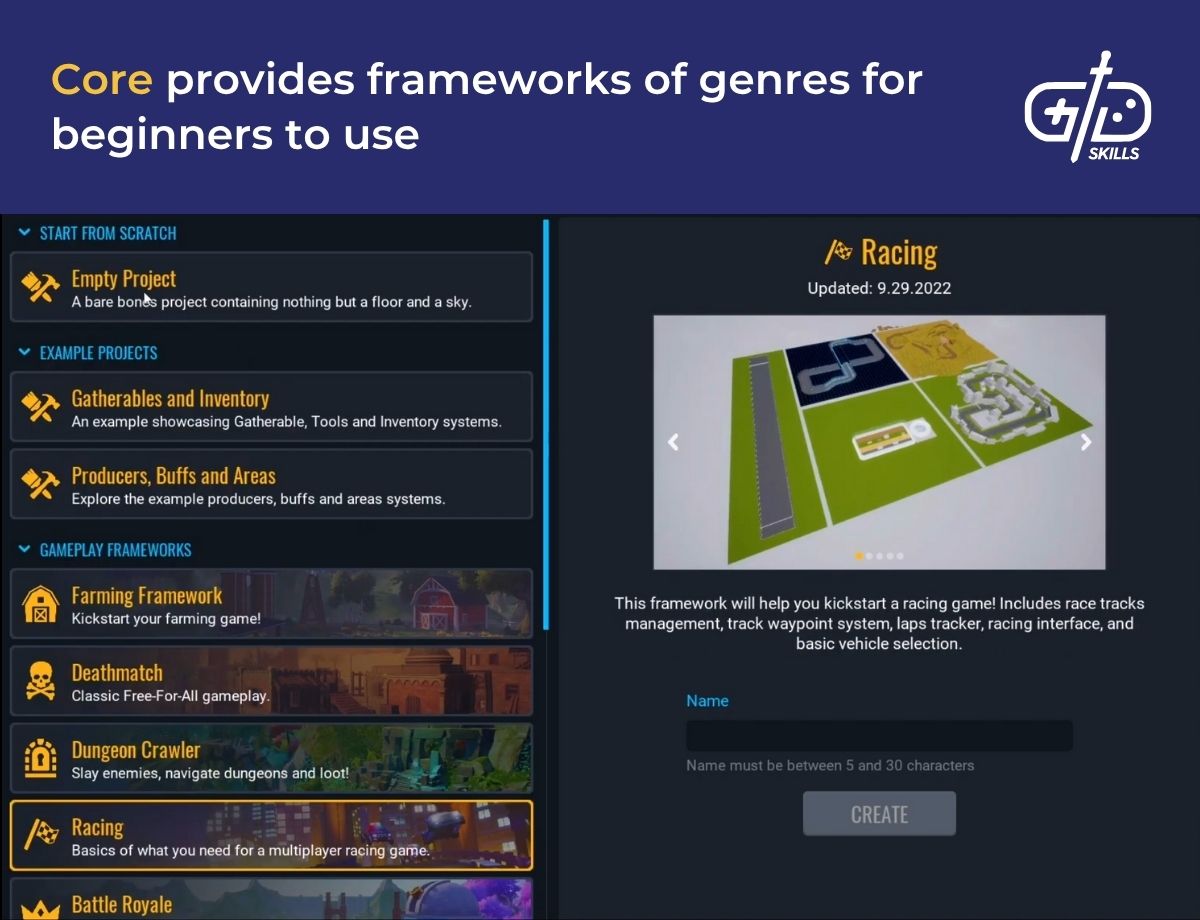

18. Core Game Engine

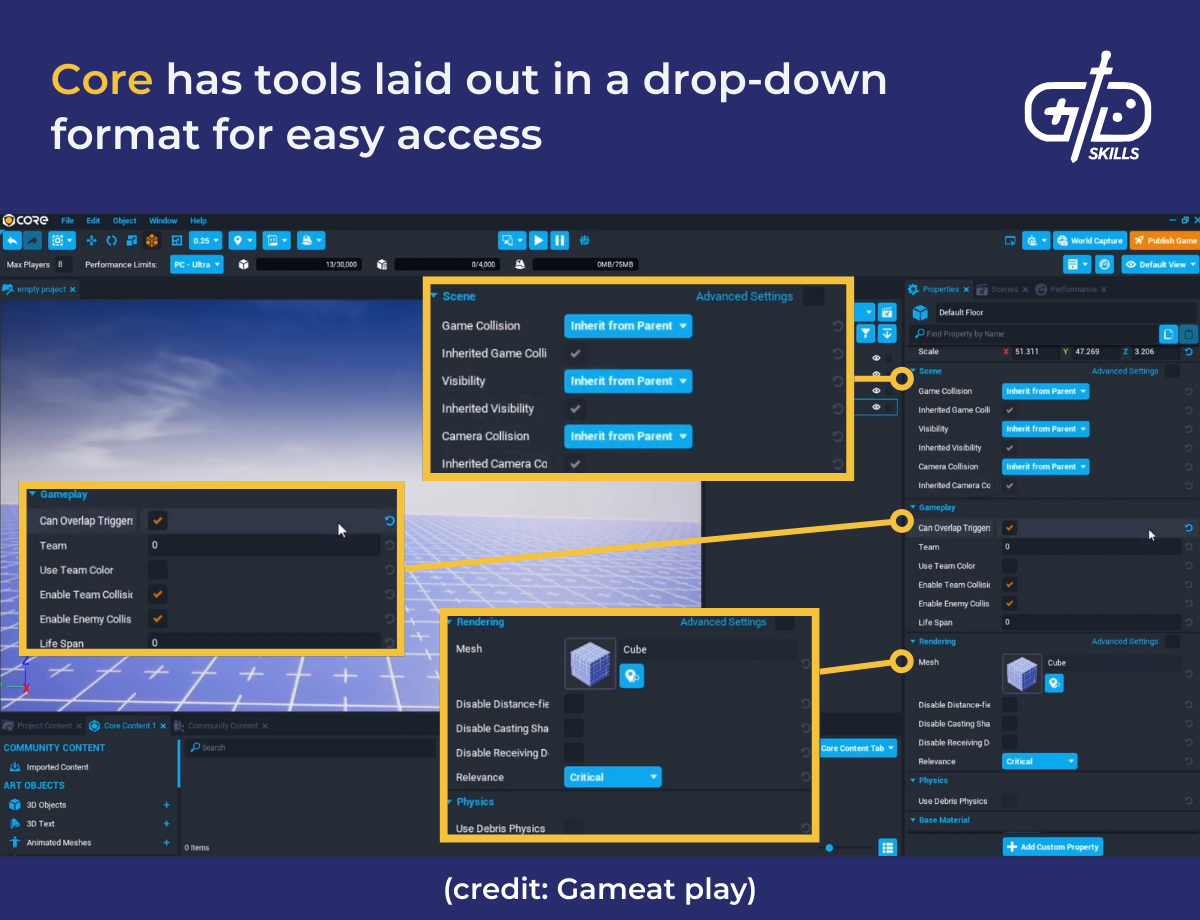

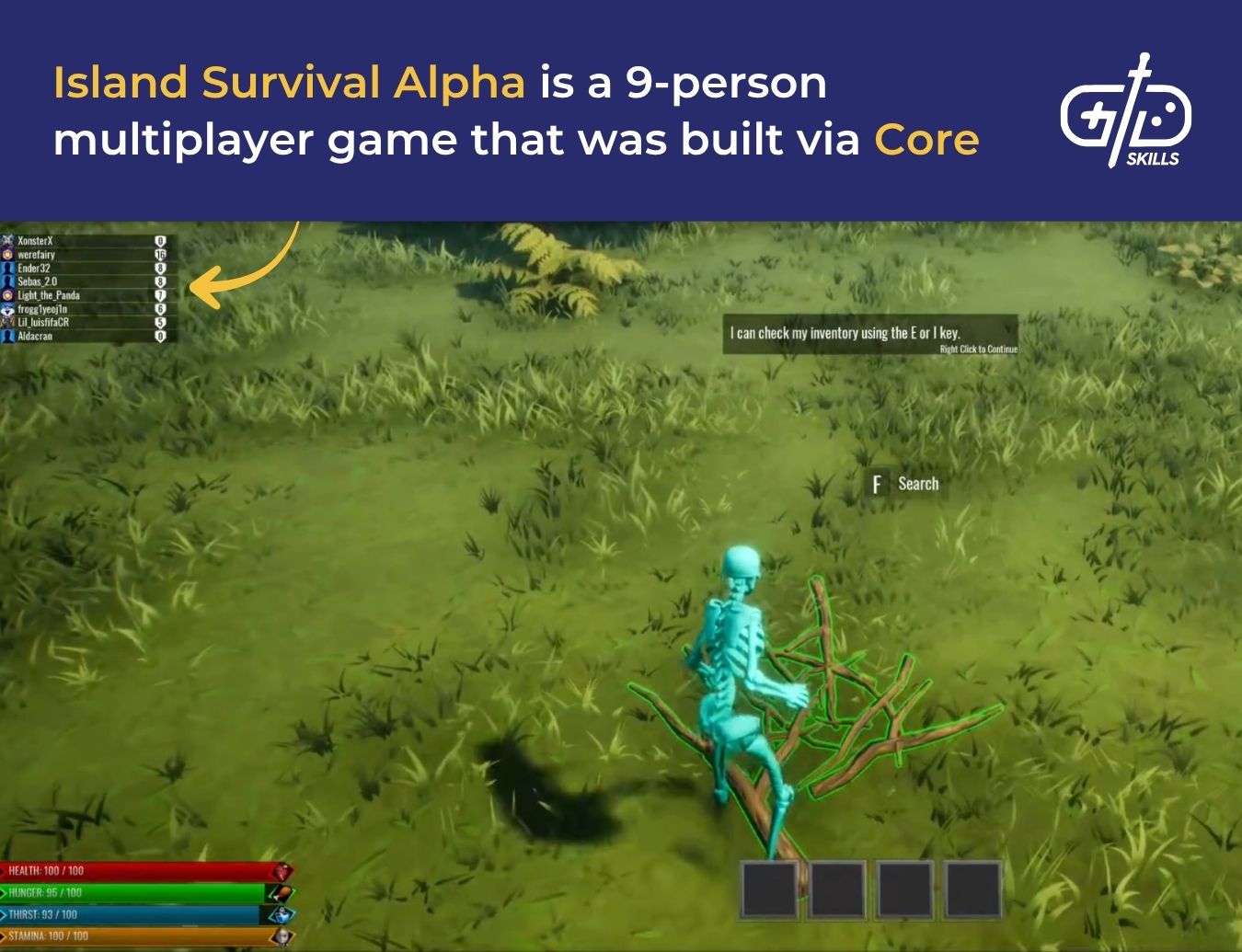

Core Game Engine is a beginner-friendly, open-source platform for devs to build and publish 3D multiplayer games. The framework is based on a modified version of Unreal Engine 4 and was created by Epic Games. By the agreement, all games published in Core Game Engine belong to Epic with monetization options. Core offers modular tools such as templates, logic blocks and assets, so devs are able to start with a racing game base, for example, and go from there.

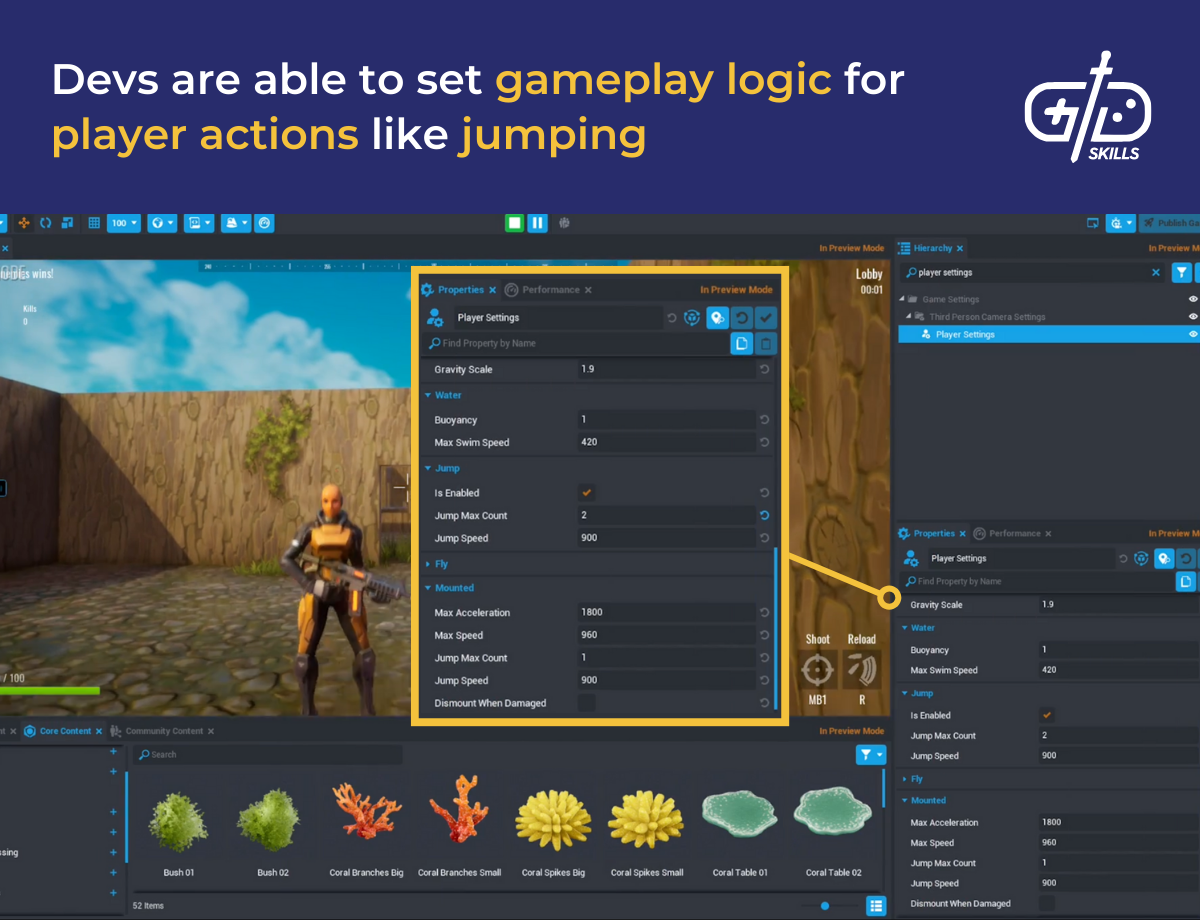

Scripting, asset management, networking and publishing have been merged into a single environment, so there’s no need to switch tools during development. The scripting language is Lua, which is lightweight and uses IF-THEN logic, making it easy to learn. Core’s drag-and-drop system for visual scripting opens the floor to both beginner devs and hobbyists.

Core’s Entity Component System (ECS) lets even beginner devs take a game object, like a treasure chest, and add behaviors to it, like open. The logic then comes into play to update the components so that the treasure chest only opens when the player is nearby. Certain components are reusable, like health, which applies to both enemies and players. Core’s simplified built-in tools help beginners develop and publish concepts.

The rendering engine is similar to Unreal’s, but with limited access to lighting and materials. Creating high-fidelity games is still viable, as there are high-quality visuals with reflective materials and particle effects, adding to realistic gameplay. Core’s physics engine is based on Unreal’s, so it supports rigid bodies, gravity and basic collision, making it efficient for arcade and platformer games.

The animation system comes with pre-rigged assets—built-in characters with a set of animations like walking—but custom rigging is limited. Blend trees are included, smoothing out transitions between animations, like a sequence of running, jumping and then landing. Animations use event-based systems, so they get triggered by player actions and gameplay logic.

Core’s audio engine lets sounds change depending on player actions, like footsteps that get louder as another character comes closer, or grunting noises for jumps. Background audio for specific locations is included, so devs are able to adjust volumes and layers to a minimum with low-latency playback for multiplayer. Low-latency playback means players are able to hear other players’ actions in real time when in the same world.

Core is able to support up to 32 players at a time, which is ideal for battle arenas or co-op missions. The networking module comes into play for multiplayer to function efficiently. All players are updated as the world shifts, so everyone sees the same game world. Objects and positions appear on all screens at the same spot via cloud syncing, while event broadcasting ensures game updates are visible to all players at once.

The scene graph is a node-based hierarchy where devs are able to organize their objects into parent-child systems, such as setting a car as the parent and its wheels as the child. Real-time rendering comes with the graph, adding realism by making elements fade away with distance or combining similar objects to cut down on performance lag. Loading assets asynchronously in the background lets them pop up during scenes immediately, further improving performance.

19. Game Builder Garage

Game Builder Garage is a Nintendo Switch game developed in-house that’s designed to teach programming skills to children. Game Builder Garage’s lessons break down the process of making simple games, like obstacle courses or Tag. Toy-Con Garage from Nintendo Labo was used as the foundation, with Nintendo Innovator turning it into an educational tool then distributing the game globally.

Game Builder Garage lets users program via color coded logic blocks called Nodons, which represent inputs, outputs and game mechanics. Debugging tools and previews lets users see how their game performs live and test it out immediately. Student-developed games are shareable on Switch, but aren’t publishable externally or exportable to PC or mobile platforms. I code my own projects now, but highly visual tools like Game Builder Garage are especially useful for people starting from scratch. The tools and knowledge accumulate, putting aspiring devs on the path toward more advanced tools like the blueprints in Unreal.

20. libGDX

libGDX is a free open-source Java framework used to build 2D and 3D games. Version 1.0 for libGDX came out in 2014, introducing a set of tools for asset management in addition to enhanced physics and rendering engines. Portability was improved, so devs are now able to export to all major platforms. The latest version, 1.14.0, has a stable UI and receives ongoing community fixes.

libGDX’s rendering system is based on OpenGL, which lets devs draw flat 2D shapes and create 3D models. The rendering engine uses OpenGL ES 2.0/3.0, which is the same engine used for mobile and browser games. libGDX’s shaders allow for custom lighting and color effects, and its texture atlases let devs combine multiple images into a single file. Batching and camera systems add further support for 3D rendering.

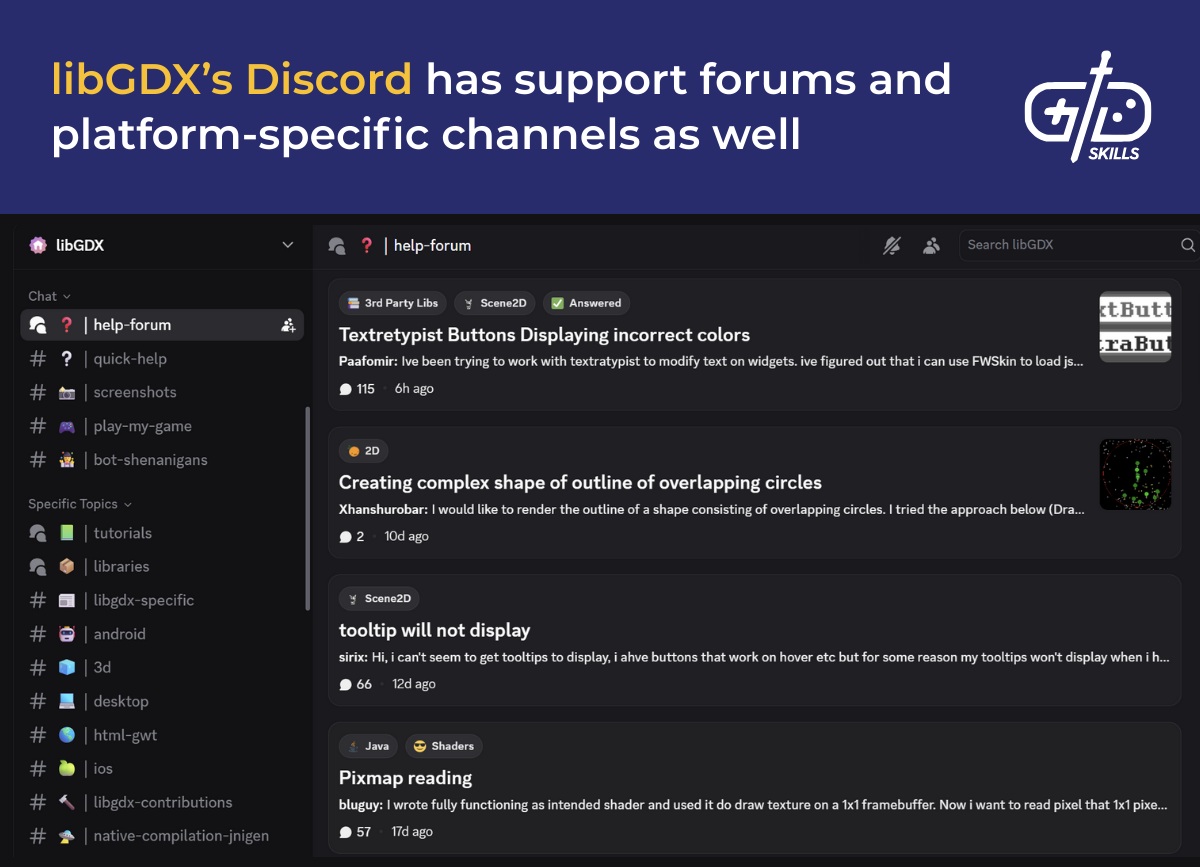

libGDX uses the Box2D physics engine to handle gravity, collisions and 2D simulations. The collision detection system shows what objects are about to be hit and which event to trigger. For beginners and learners, the Discord community is active and helpful, with specific channels for platform export, tutorials and more.

Java is the primary language, so the Java runtime environment is needed for PCs, while Android and iOS have their own specific runtimes. The Java ecosystem comes with IDEs like Eclipse plus libraries and tools that help with the design and export processes. To export to browsers, the Java code is converted to JavaScript via Google Web Toolkit (GWT) with the Lightweight Java Game Library (LWJGL). The GWT toolkit comes with a compiler that translates Java directly to HTML5, so devs don’t need to rewrite their games for browser export. There’s also built-in support for Android OS, with RoboVM or MOE for iOS.

Asset management comes with bitmap fonts, so devs are able to set custom fonts like scores or dialogue with stylizations. libGDX also uses JSON/XML for data storage. Game data like enemy locations, character stats and game settings are stored outside the code to reduce the need to code things from scratch.

21. Clickteam Fusion

Clickteam Fusion is a game engine developed by Clickteam, the software studio known for tools like Multimedia Fusion and The Games Factory. Devs don’t need to know how to code, as Clickteam comes with a drag-and-drop interface and an event-based logic system. It was designed for 2D games, specifically educational and arcade-style games, with costs varying by edition. The Standard edition caters to hobbyists and indie devs with add-ons for export options, while the full-access Developer version is for professional devs and studios.

The visual scripting uses a block-based event editor, making it easy for beginner devs and learners. Logic is built via conditions, such as an enemy spawning when a player interacts with a plant. Events are organized into rows and columns via the event editor, with devs able to group the logic for reuse through the event editor’s logic module. Visual programming works with the drag-and-drop interface to eliminate the need for scripting, so devs are able to select objects and behaviors from a menu or a list.

Clickteam is currently at version 2.5 with improved performance for platform support and editors. The DirectX 11 runtime brings enhanced graphics and compatibility, so there’s less lag when rendering on modern PCs. IF-THEN logic gives devs more control, and global event qualifiers apply the same logic to multiple objects. Version 2.5 comes with a built-in profiler that analyzes the game’s performance to point out causes of lag for editing and debugging. The free version is available to download on Steam.

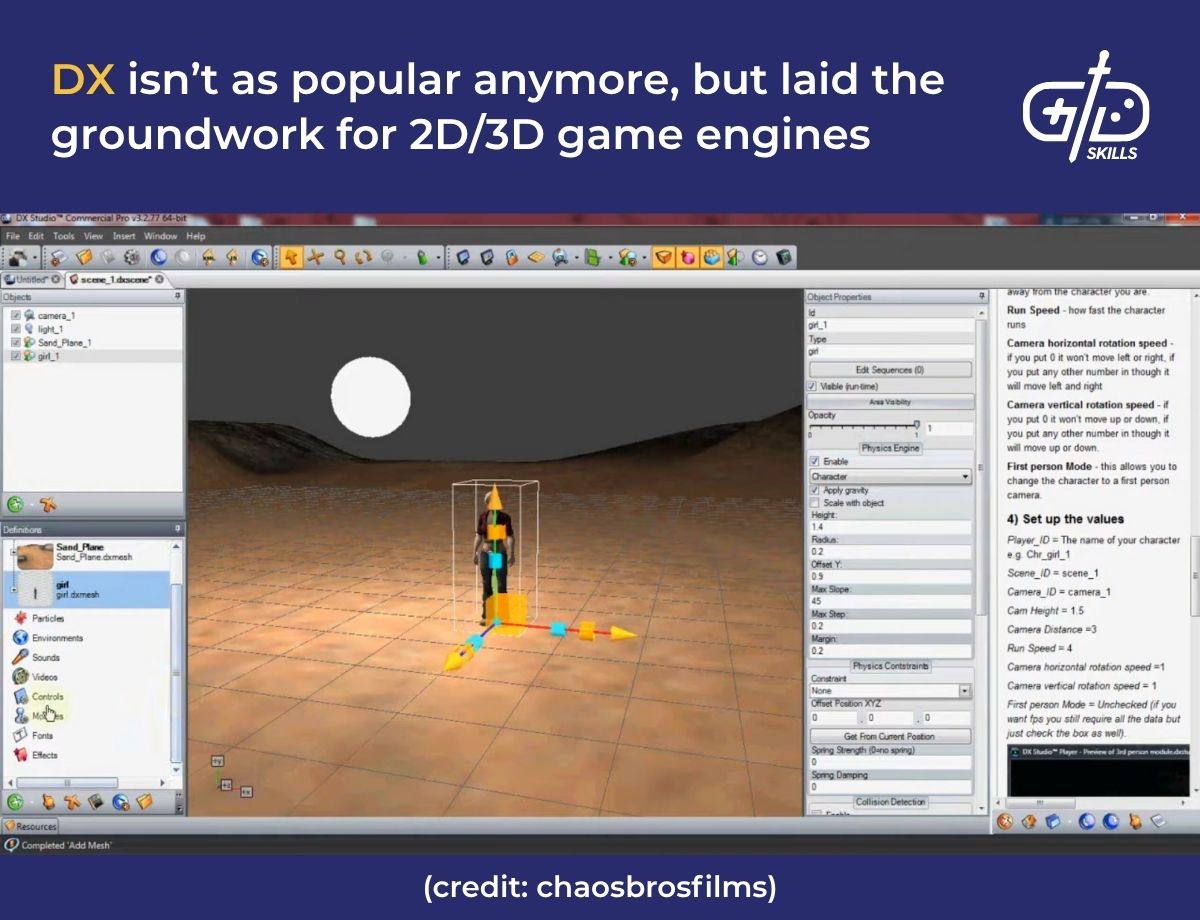

22. DX Studio

DX Studio is a complete IDE and game engine developed by Worldweaver Ltd. that became obsolete after its final release in 2010. DX Studio focused on 3D game development, specifically simulations, and was powered by DirectX for rendering. The toolkit came with an asset manager for models and graphics, as well as scripting and debugging tools.

DX Studio was discontinued with no direct successor but had an influence on other game engines like Unity and Godot. DX’s main scripting language is JavaScript, accessible to beginners with the IDE code editor and debugger. The editor points out syntax errors, completing repetitive commands automatically, while the debugger lets devs run and fix their logic in real time. The JavaScript scripting convinced other engines to base their own languages off JavaScript, like Unity’s UnityScript. DX Studio combines a full IDE with scripting and 3D editing, and the environment compiler helps run and test games quickly for fixes. This combination inspired Unity, Godot, and Construct to do the same.

The DirectX graphics API is Microsoft’s graphics library for Windows, which is built into DX studio. Graphics are rendered in real time as a result, enhanced with dynamic lighting and shading tools. Devs are able to add textures to bring out realism in their game worlds, like grassy terrains. The physics engine comes with basic collision and rigid body dynamics, so devs are able to execute actions like a ball bouncing off a wall.

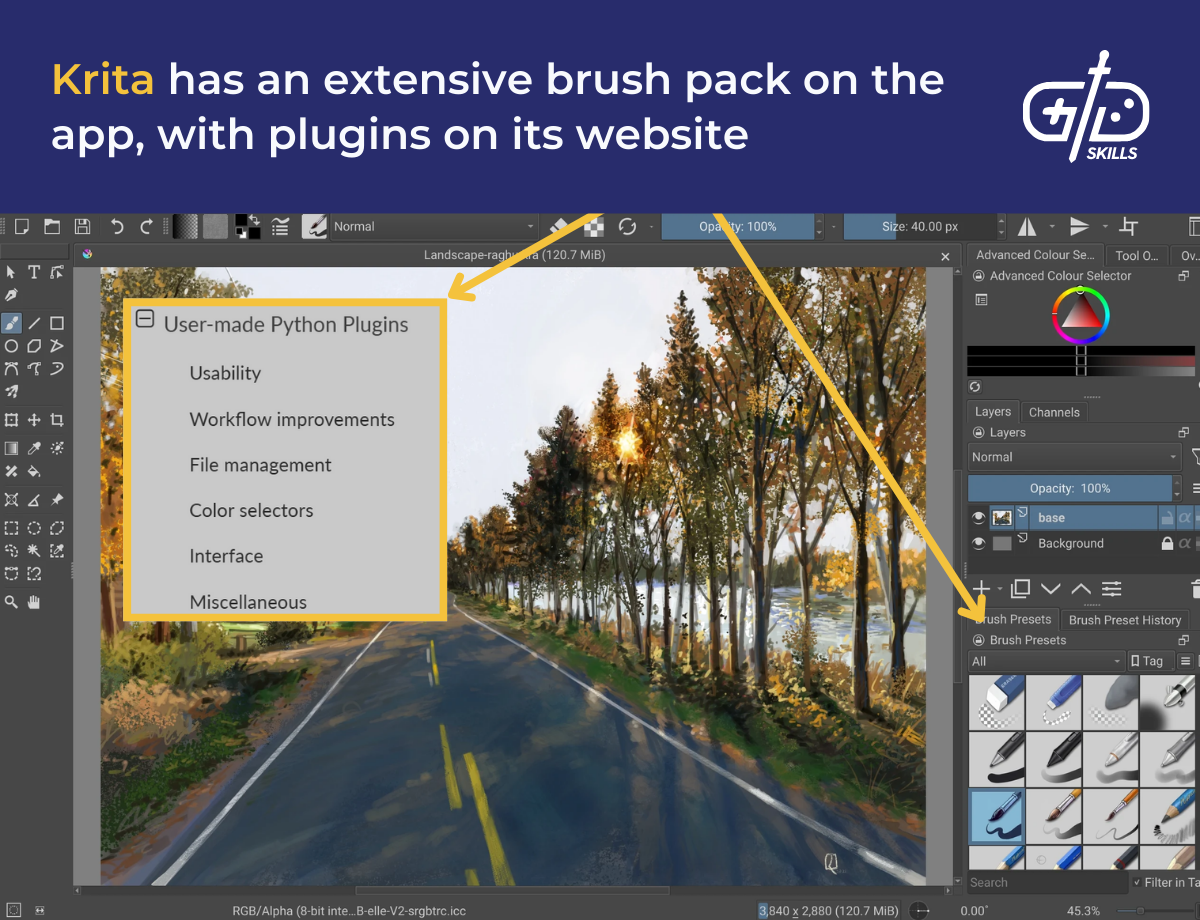

23. Krita

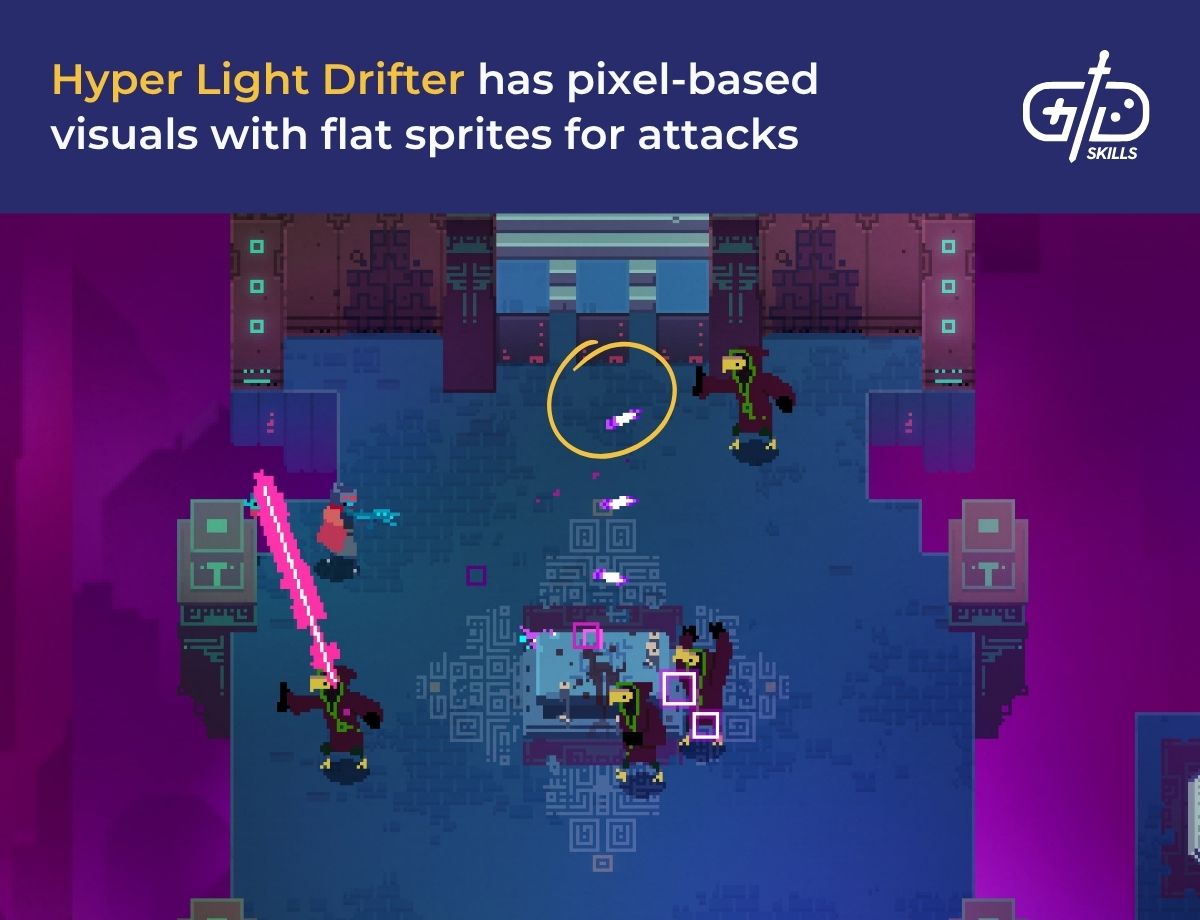

Krita is a painting and 2D animation software designed for artists and animators that’s both free and open-source. The absence of licensing fees makes Krita ideal for indie devs, hobbyists and studios on a limited budget. Games with pixel-based design like Celeste and Hyper Light Drifter used Krita to create concept art and polish 2D effects.

Krita has a customizable UI and a variety of brushes and animation tools. The infinite canvas makes it efficient to map out larger concepts, character sheets or world maps. For 2D sprite sheets and layered VFX, layer management supports the layer types outlined below.

- Raster: Pixel-based, similar to painting

- Vector: Scalable shapes like logos or UI buttons

- Filter: To apply effects like blur or sharpen

- Group: Layers are organized to avoid clutter

- Clone: Edits on one layer are mirrored across all, or selected ones

Krita’s 2D animation tools let users draw on a frame-by-frame basis with a timeline to organize the frames. Onion skinning is a feature that shows the previous or next frame as a preview, which is essential to animate smoothly. Cuphead’s animators used workflows similar to Krita, but in Toon Boom. The UI comes with dockable panels, so users are able to move tools and brush sets around freely. Light and dark modes are available, with color accents for variety.

Krita lets users use Python scripting to code in automated tasks or build tools for custom use. Users are able to extend Krita via plugins, which function similarly to Blender’s add-ons. Krita’s active community has forums and a Discord server for contributor channels, making it ideal for feedback and finding plugins. There are frequent updates on GitLab, and Krita has no royalty fees for commercial use.

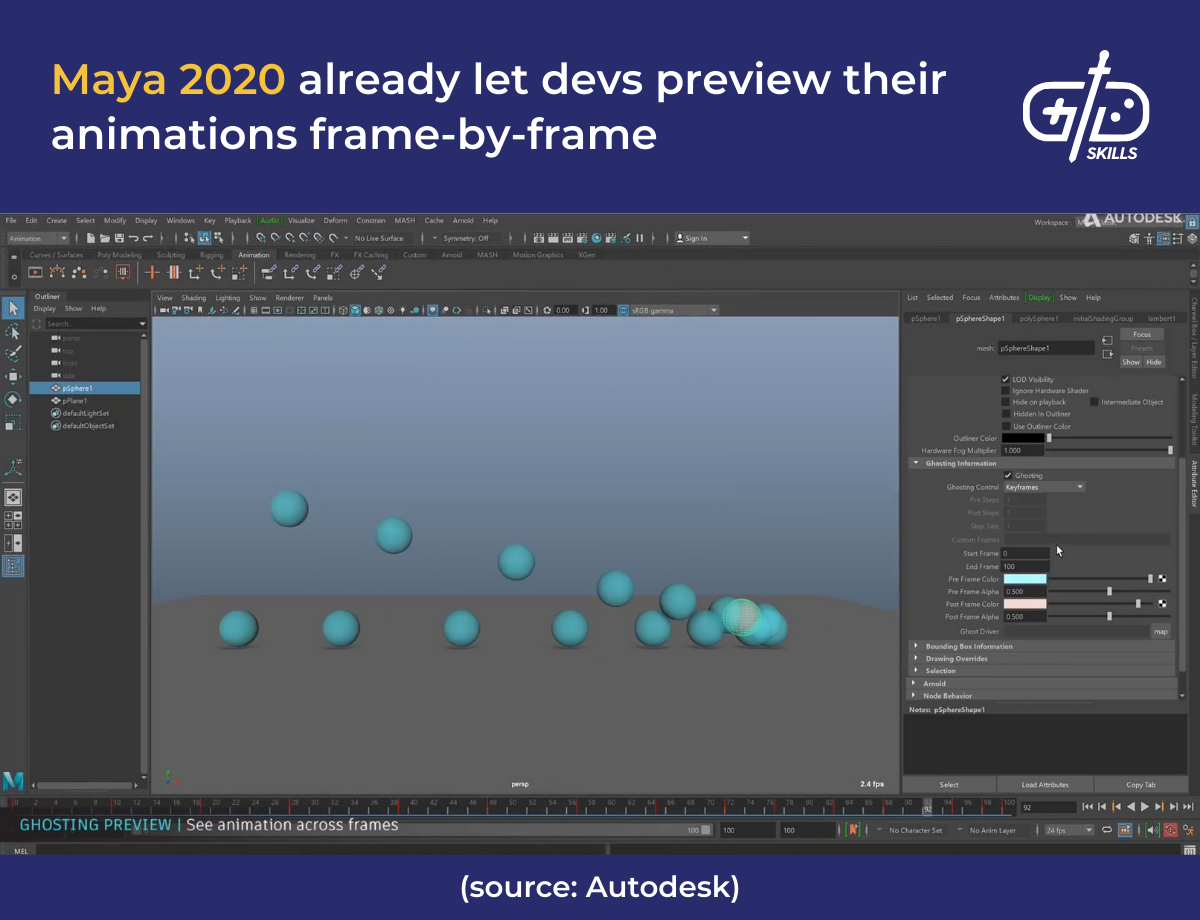

24. Maya Autodesk

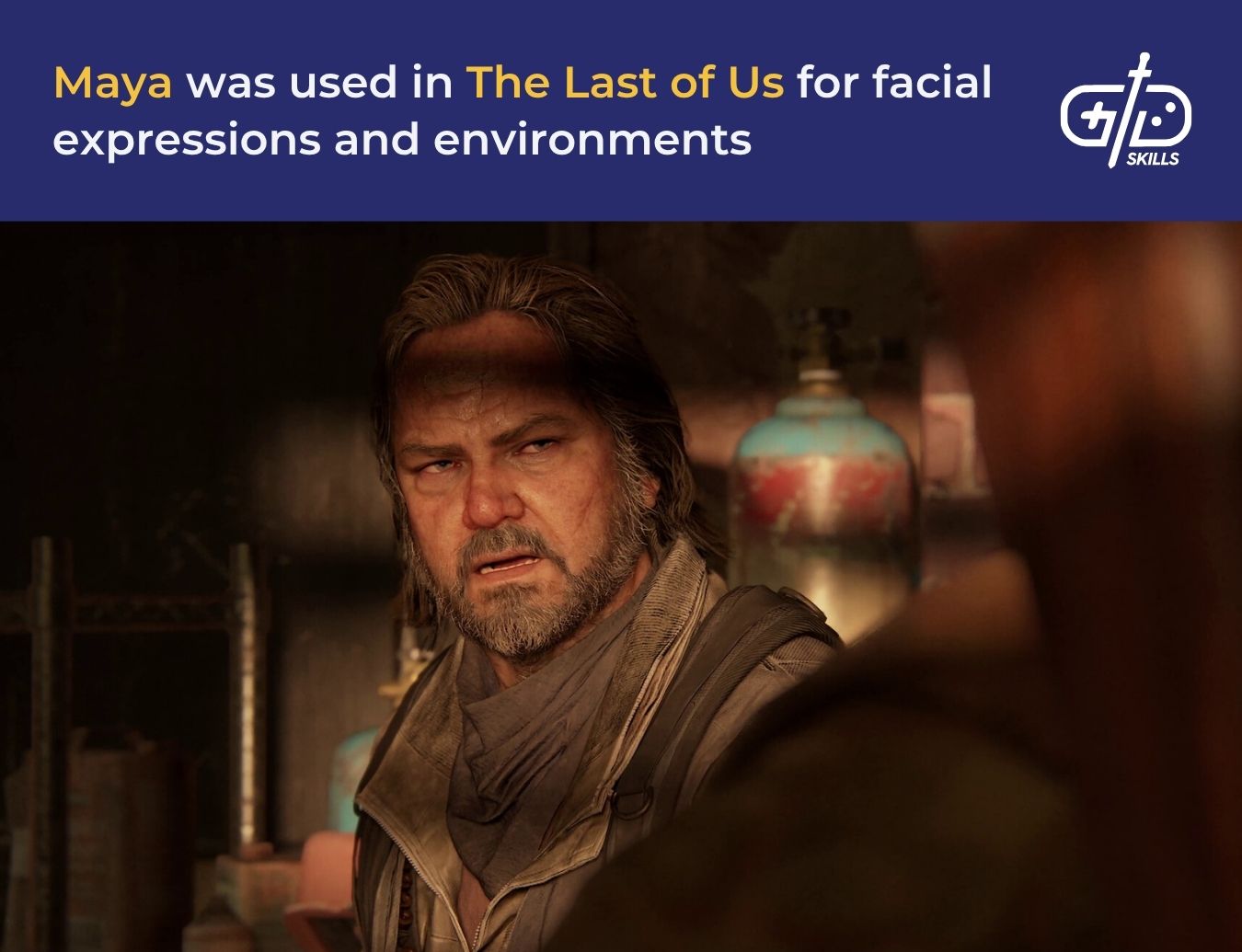

Maya Autodesk is professional-grade software for 3D modeling, animation, and rendering that was created by Alias but is owned and developed by Autodesk. Maya has been used for both AAA games like The Last of Us and films like Avatar. The steep learning curve means it isn’t ideal for beginners. Maya Autodesk is subscription-based, with both premium pricing options and the less expensive Maya LT.

Maya LT is lightweight but retains all the core modeling and animation tools, letting devs export to game engines like Unity and Unreal. Maya LT doesn’t have Bifrost or full Arnold, like the premium version, but its major features and lower cost make it better suited to indie devs. Bifrost is a node-based system to simulate fluids, smoke or particles. It’s a no-code system, using visual scripting just like Unreal’s Niagara. The Arnold rendering engine lets users render realistic lighting, shadows and materials. Ray tracing is included as well, making it ideal for cinematic gameplay and assets.

Maya’s latest version has enhanced tools for animation and scene layering, on top of the rigging, motion capture, and keyframing tools added in previous editions. Updated animation curve tools make it even easier for devs to fix motion arcs and timing. The slider was enhanced to give intuitive control over VFX timing, like explosion effects for successful hits. Improved scene layering also offers more control over grouped elements like facial movements, helping organize complicated scenes.

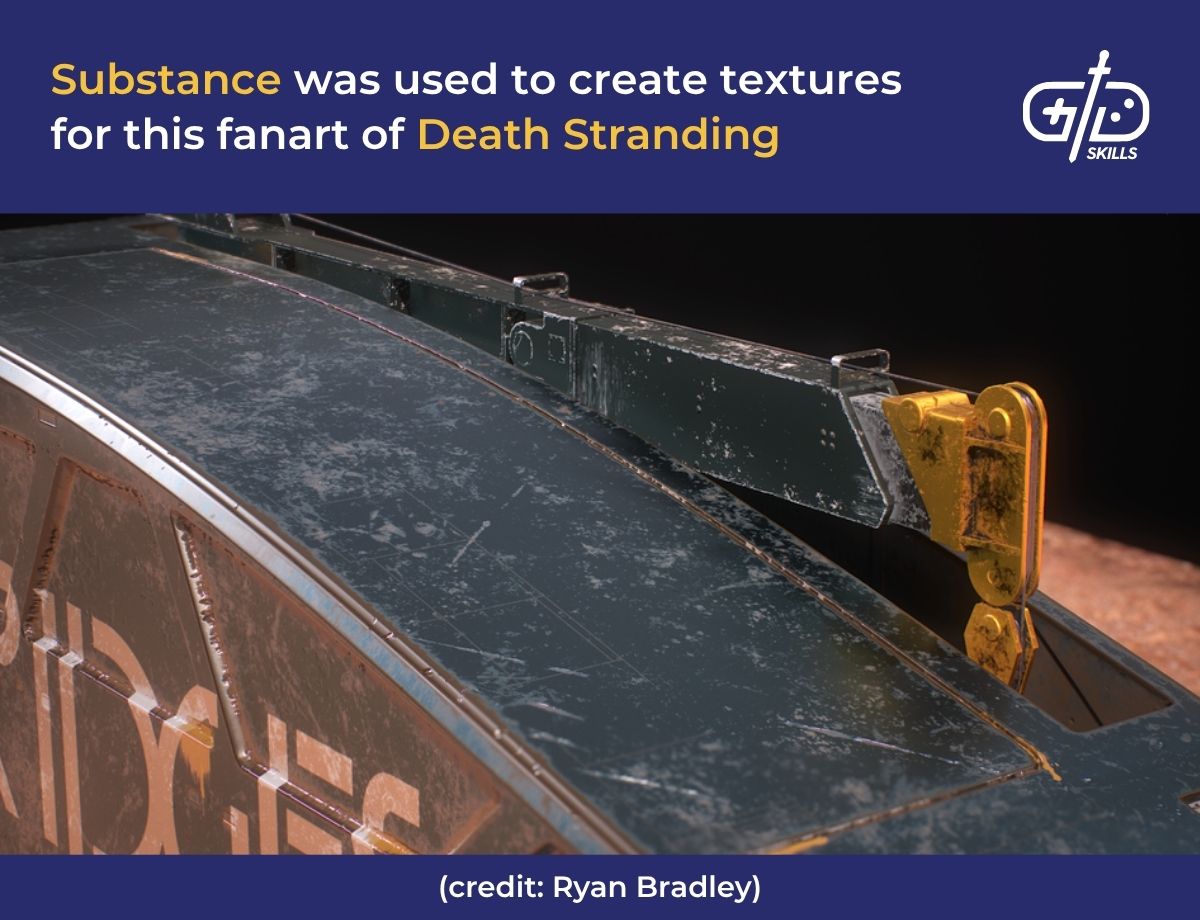

25. Substance 3D Painter

Substance 3D Painter is a tool that lets artists paint directly on 3D models, with a viewport that shows live changes to enhance precision. Substance was developed by Allegorithmic but acquired by Adobe in 2019 and became a part of the Adobe Creative Cloud ecosystem, next to Photoshop and Illustrator. Substance is part of Adobe’s Substance 3D Suite and has additional tools for texturing and PBR.

Adobe’s Substance Suite comes with Designer, Sampler and Stager, which let artists create materials, turn photos into textures and map out scenes, respectively. Designer comes with a node-based editor that lets artists build materials using logic blocks so there’s no need to manually paint. Variations of the same material, like a chunk of rusted material, are generated automatically, so a single asset is reusable across multiple props.

Sampler uses AI to turn real-world photos of textures like a brick wall into PBR textures to use in-game. PBR involves creating materials that respond realistically to light, like a highly reflective metallic surface. Substance lets artists stack effects and textures similarly to Photoshop, using layers for easy control. Cloud libraries allow assets to be shared across teams and apps, making it easier for large teams to follow the same process and get the same final product. Games like The Witcher 3 and Call of Duty used Substance for realistic weapons and environments.

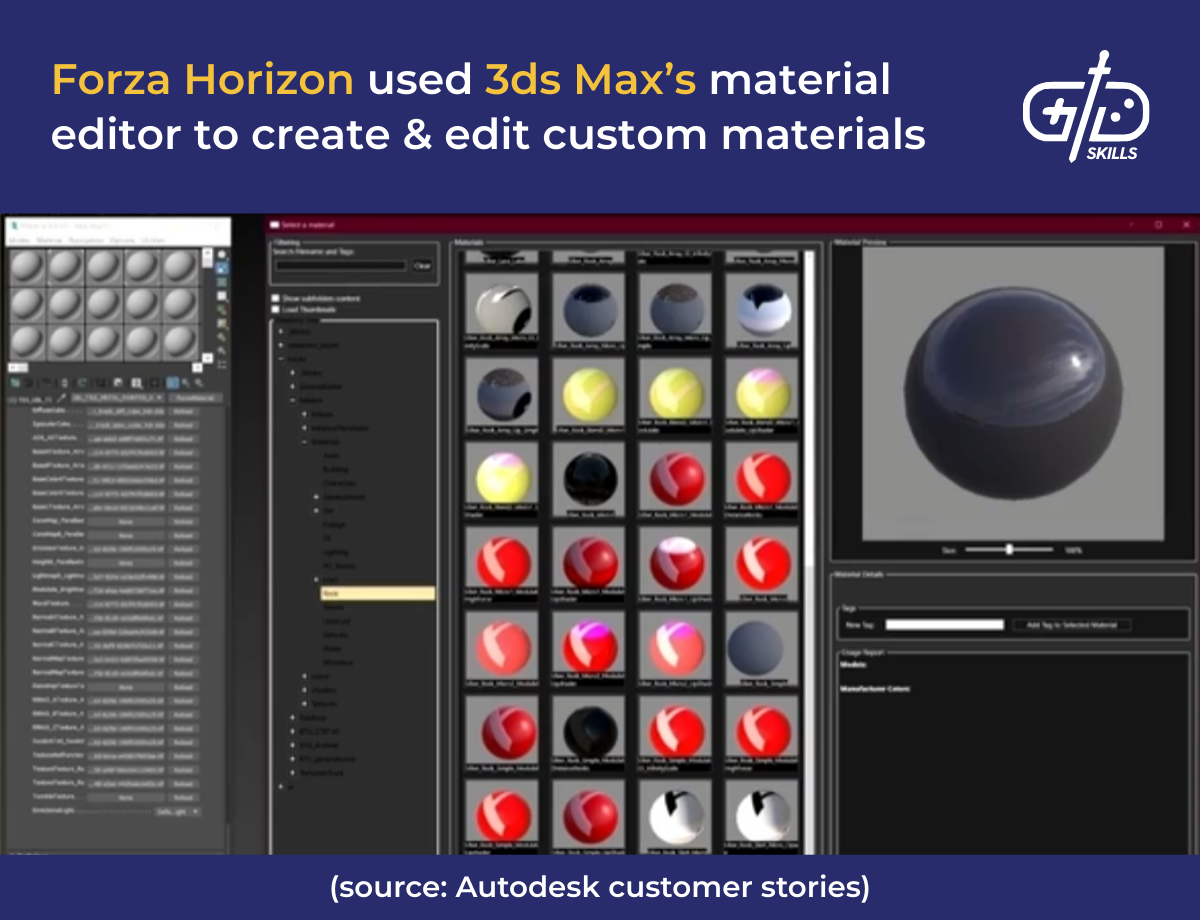

26. 3ds Max

3ds Max is professional 3D software for modeling, animation and rendering developed by Autodesk. 3ds Max was first released in 1994 under the name 3D Studio Max, when it was used to map out early 3D workflows in both games and films. It’s been used in AAA titles like Far Cry and Hitman, known for their cinematic VFX. The latest version is 3ds Max 2026 according to the yearly release cycle, with improved viewports and plugin support.

I used 3ds Max when I started learning how to make game assets. 3ds Max is the most natural to use for programming-minded devs since it starts users off with simple shapes that they’re able to stack modifiers on to. Maya and Blender are the standard graphics tools now, but 3ds Max is an ideal tool to start with.

The software churns out high-res scenes with advanced particle simulations, as well as lighting effects, which all need high-end hardware to function smoothly. The system requirements for 3ds Max have been laid out below.

| Hardware | Minimum Requirement | Requirement for Complex Projects |

|---|---|---|

| RAM | 8 GB | 64 GB |

| GPU | 4 GB VRAM (Directx 12) | 8 GB+ VRAM (Certified GPU) |

| OS | Windows 10/11 (64-bit) | Windows 11 Pro |

| CPU | Intel/AMD multi-core with SSE4.2 | High-frequency multi-core |

| Disk Space | 9 GB free | SSD with 100+ GB |

3ds Max has a monthly subscription fee of $205, with flexible licensing for both freelancers and indie devs that’s ideal for short-term projects. The annual subscription averages at $1800 for long-term production, which is better for large studios and professionals. 3ds Max integrates with the Autodesk ecosystem, including Maya, MotionBuilder and Revit, as an added benefit for teams working across these tools.

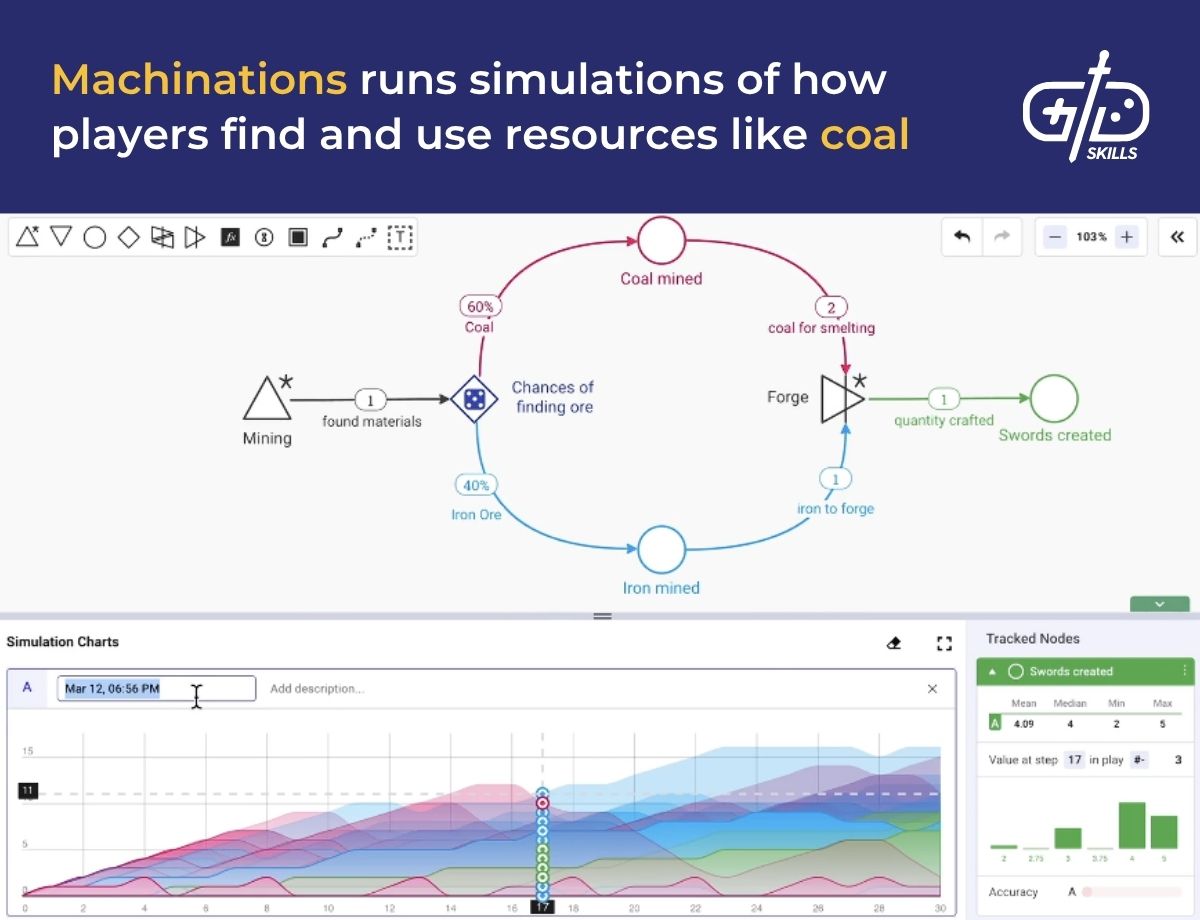

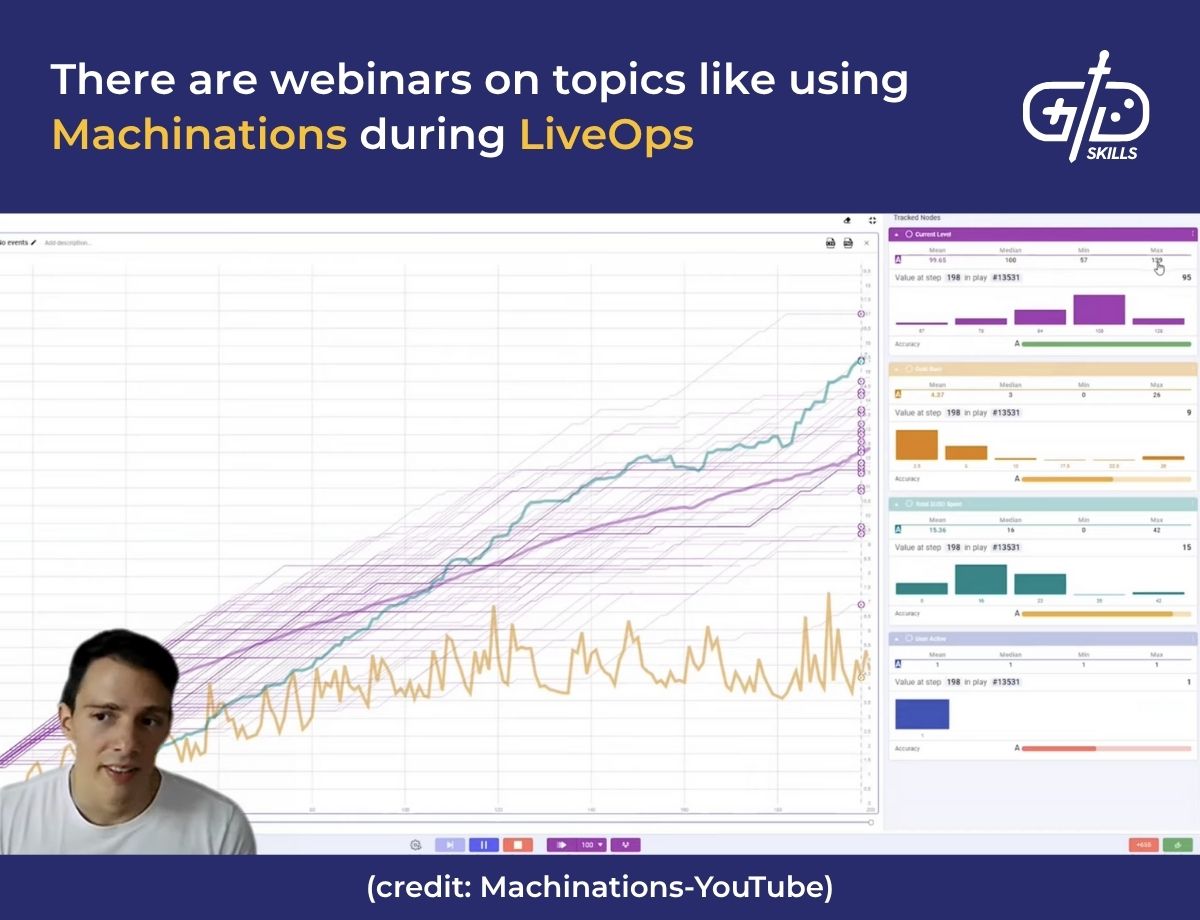

27. Machinations

Machinations is a browser-based tool to simulate and visualize games’ economic systems and let devs test them out. Simulations of the game’s economy are run in real time or step-by-step for devs to see how resources, choices and events affect outcomes. Machinations uses node-based diagrams, so devs build systems using interactive flowcharts that represent resources, actions and logic. Resource flow is simulated, including both how players earn currency or resources, and spend or lose them. Exchanges like trading and crafting are simulated, helping devs design systems for XP and implement loot drops as needed.

Sources and converts represent inputs and transformations. Inputs refer to resources, like gold, and transformations refer to the event that affects the input, like spending gold to buy an item. There are modeling graphs that simulate the plot and show how the economy changes with gameplay, like currency inflation and player progression.

Machinations lets devs add event triggers using logic gates to test different outcomes. Adding a rule, like an upgrade unlocking once the player reaches 1000 XP, simulates whether players progress faster in pursuit of the reward. Devs are able to change the game mechanics for optimal outcomes in this way, with plenty of wiggle room before committing to the code.

Machinations make use of visual models and diagrams to showcase issues like player-found exploits or points where progress frequently stops. Highlighting and resolving these issues helps ensure the game’s pacing is efficient—neither too fast nor too grindy. During live operations, this lets devs iterate immediately to further refine the game’s economy.

28. Articy

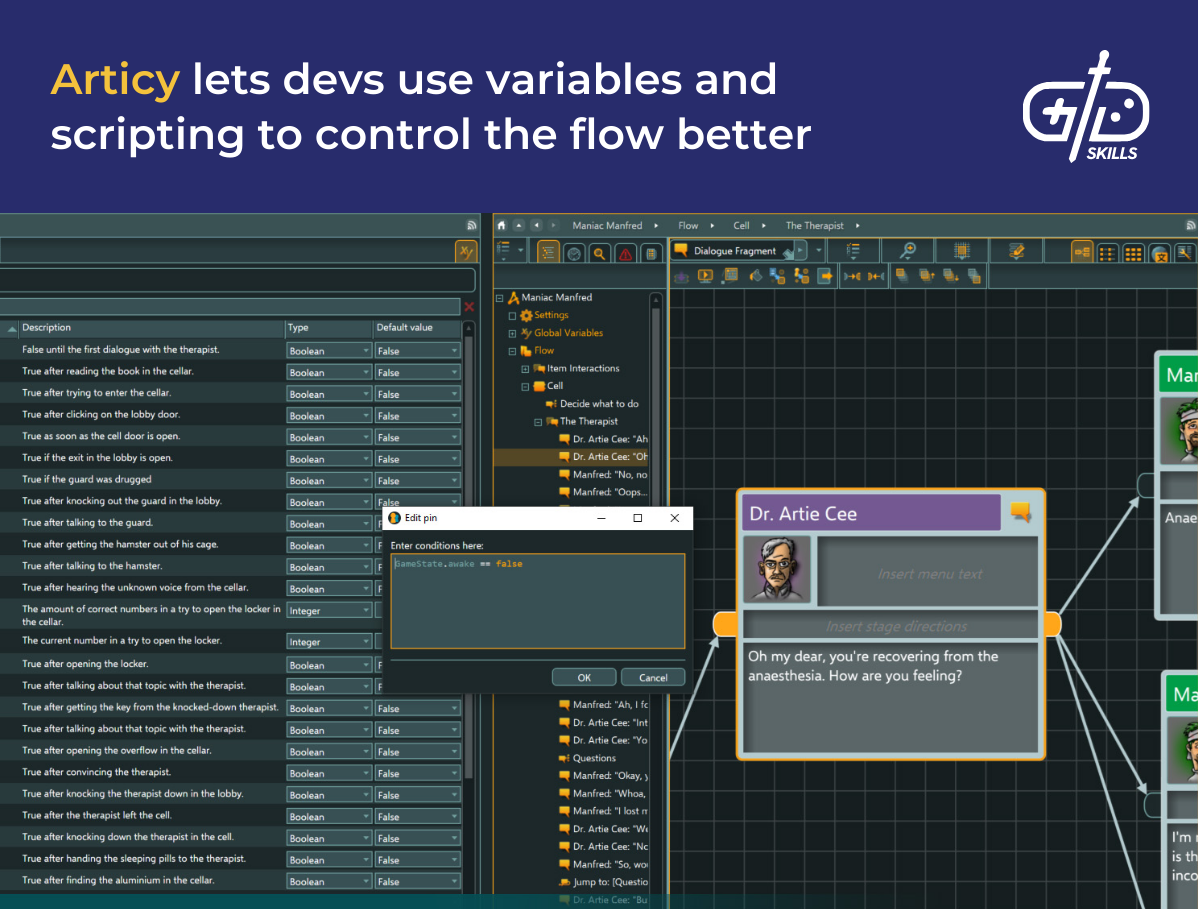

Articy is a narrative design tool that lets devs map out story elements with story blocks to keep track of key events and affected characters. Articy breaks dialogue into individual exchanges, letting devs set conditions, choices and speaker tags. Speaker metadata keeps a record of who’s speaking and how they’re speaking. The tool is ideal for narratively complex games with multiple branching plotlines, like The Witcher and Game of Thrones.

Articy isn’t a game engine, but it exports to engines like Unity or Unreal via plugins. The free trial version includes up to 660 objects, so it’s useful for prototyping and testing out concepts, and full-access pain plans are available. Articy:draft version 3 introduced the Unity and Unreal export plugins along with flow simulation and improved collaboration tools. Flow simulation lets devs test out narrative logic before coding it in.

Articy’s latest version is Articy:draft X, which came out in August 2025 and is undergoing active updates. It lets devs manage voice-overs and translations directly with AI extensions. Articy:draft X’s engine importers for Unity and Unreal have also been improved with the Generic Engine Export tool helping with export to other engines. Previous versions of Articy already came with stability updates and minor UI touchups, and added scripting improvements and extended plugin support.

29. Cascadeur

Cascadeur is a standalone 3D animation program developed by Nekki, who developed games like Shadow Fight and Vector, known for their fluid animation and characters. The software, as a result, comes with features that make it ideal for animating characters with realistic physics. Cascadeur evolved from Nekki’s internal tool for hybrid 2D/3D animation and integrates with engines like Unity and Unreal.

The AutoPhysics engine simulates gravity, momentum and collisions to make jumps, falls and combat moves look fluid. The keyframing tool helps edit based on a timeline, making it easier to smooth out the transition between movements like walking and running. AI posing comes into play and offers helpful suggestions to correct poses based on the keyframes.

Cascadeur lets devs export to Unity through the .FBX and .DAE formats, and is compatible with Unity’s animation controller. Unity’s scripts are able to trigger Cascadeur’s animations, despite these not being listed in the Unity Asset store. Cascadeur’s animations are optimized to run with real-time playback and rendering on Unreal. The animations integrate with Unreal’s animation graph and physics systems, tying Cascadeur’s physics realism neatly into Unreal’s high-quality rendering. Devs need to use Unreal’s blueprint visual scripting or C++ to trigger Cascadeur’s animations.

30. Visual Studio

Visual Studio is Microsoft’s IDE to develop and export apps across desktop, web, mobile and cloud platforms. VS runs on Windows, but support for Mac was discontinued in 2024, despite VS for Mac still available for use as a legacy tool. The IDE comes with multiple versions and paid tiers, depending on team size and use.

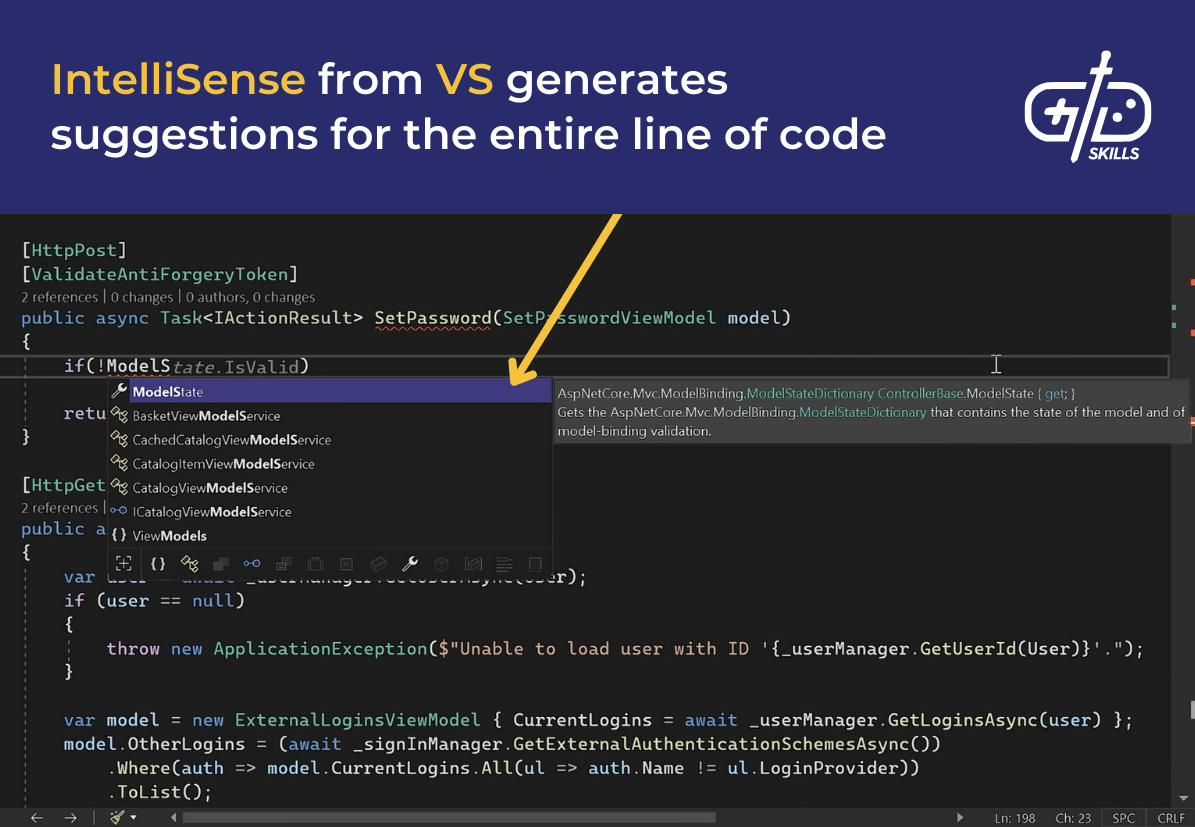

Visual Studio’s latest release has 64-bit architecture along with a range of tools and extensions, so it’s able to take on large projects with little to no memory restrictions. Marketplace extensions were made available via the VS marketplace, so users are able to add tools specifically for GitHub, or Unity, and so on. VS already supported C#, C++, Python (with extensions), JavaScript and additional scripting languages, so it’s accessible to devs coming from almost any coding background. IntelliSense even gives users suggestions with auto-complete and code navigation features.

VS Community Edition is free for solo devs, students and indie studios with less than 5 people, but it lacks CodeLens and Live Share. CodeLens shows real-time updates above the code, like who last modified a gameplay mechanic, so there’s no need to open Git logs. LiveShare lets devs share their coding session with another dev, letting team members navigate and debug the game code together. There’s an active community for this edition, with forums, GitHub discussions and MS Learn resources, making it ideal for beginners and students.

VS Professional Edition comes with CodeLens, LiveShare and Git integration, which is an improved version of the version control tools. Azure benefits are included as well, so devs and teams have access to training and support resources. These all make VS Pro ideal for small to mid-size teams that need to collaborate around the same workspace.

VS Enterprise is ideal for large enterprise teams that have complex systems and pipelines. Enterprise comes with Intellitest and a snapshot debugger. IntelliTest auto-generates unit tests from the code devs write, while the debugger debugs on cloud apps without interfering. The performance profiler that’s included examines any bottlenecks while optimizing for runtime. Load testing helps out by simulating traffic to test out the systems. VS 2019 is still available, with support for older .NET frameworks and long-term projects. It’s stable with a mature feature set and is ideal for enterprises that are working with legacy codebases.

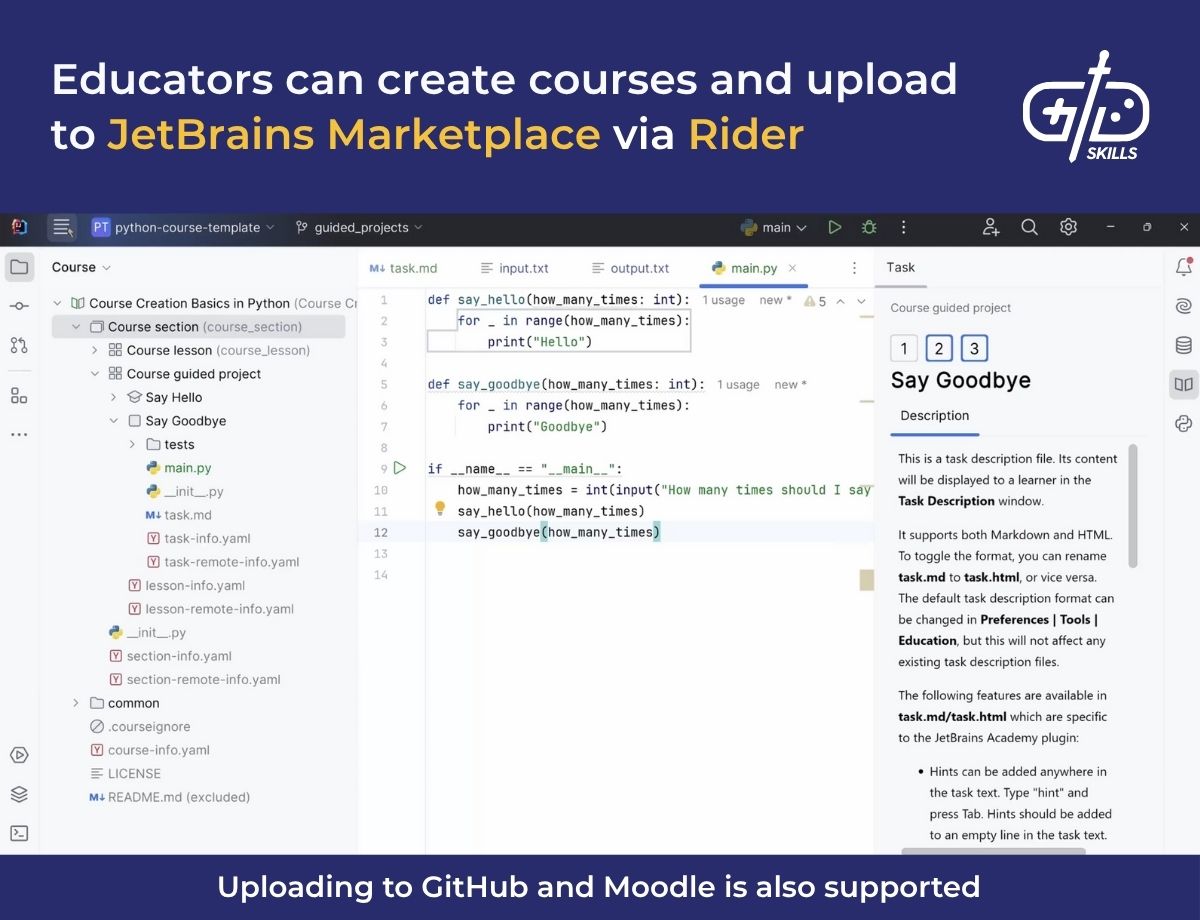

31. JetBrains Rider

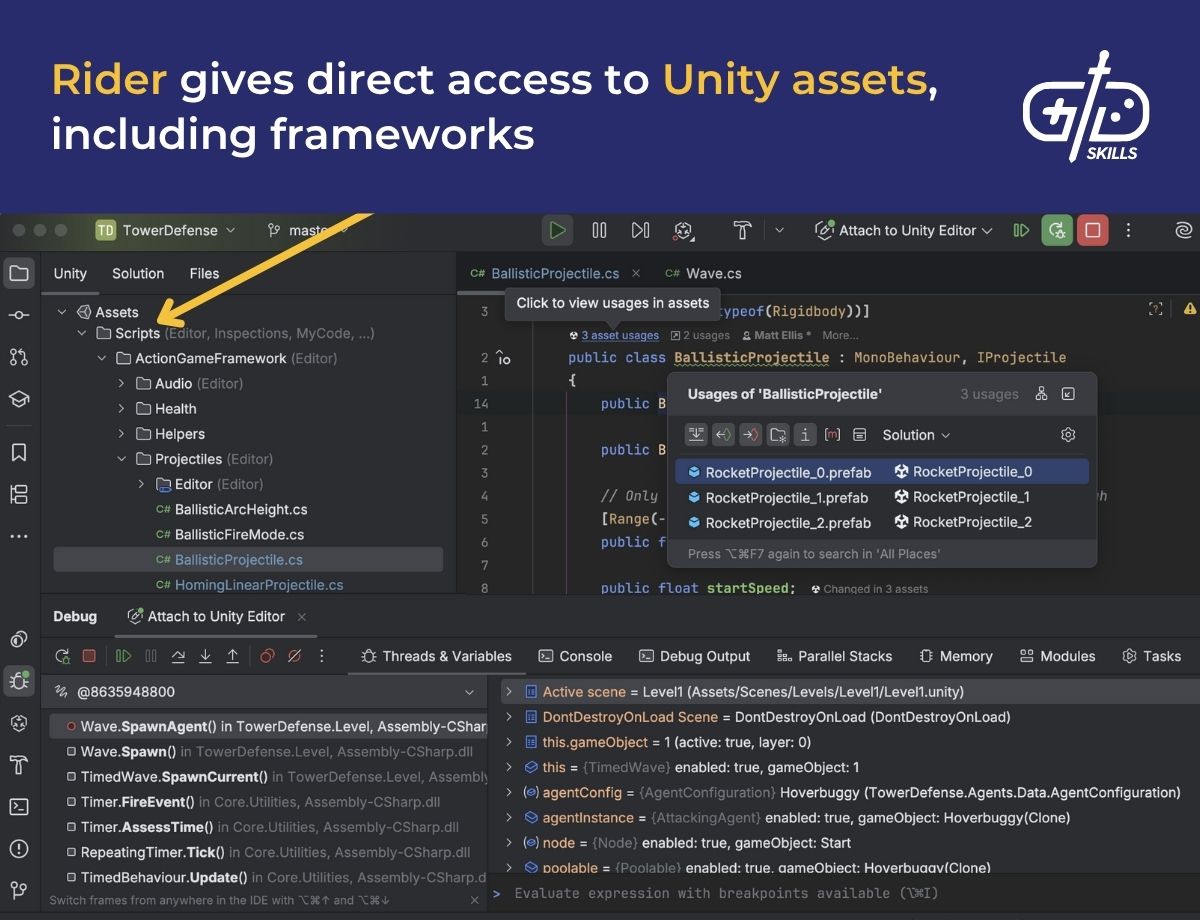

JetBrains Rider is a cross-platform IDE that runs on Windows, macOS and Linux from its first release in 2017 by JetBrains. Rider caters to .NET and game development via its IntelliJ platform, which comes with deep code analysis and support for C#, C++, JavaScript, F#, and VB.NET. The IDE is ideal for development alongside game engines, especially Unity and Unreal, as it comes with specific features supporting them.

Rider for Unity has Unity-specific inspections where it detects recurrent scripting errors and gives suggestions for code completion. Devs are able to start or stop Unity play mode directly from Rider and immediately access their assets via the asset indexing feature, which was introduced with Rider version 31.

Rider supports both C++ and blueprint visual scripting, which are the main scripting languages for Unreal. Rider for Unreal integrates with the Unreal Build System, so gameplay engineers and tech artists are able to include animation and physics-based mechanics. The installation size is only 199 MB, which is lightweight compared with Unreal IDE setups and Visual Studio.

Rider’s community is active on both Unity and Unreal forums as well as JetBrains’s community forums, which share extensions plus Unity and Unreal-specific plugins. GitHub has discussions with multiple Unity and Unreal projects that include Rider-specific configurations and tips too. YouTube tutorials provide even more help for beginners and connection points for the community. The JetBrains Blog and Academy deliver regular updates and .NET and define C# learning paths for beginners.

The pricing and licensing for Rider comes in two tiers, with a 30-day trial period and a 3-year plan for both. The Individual plan caters to solo devs and small studios, while the full-access Commercial Org plan is best suited to large studios. There’s a dotUltimate bundle which includes Rider, ReSharper and other JetBrains .NET tools as well.

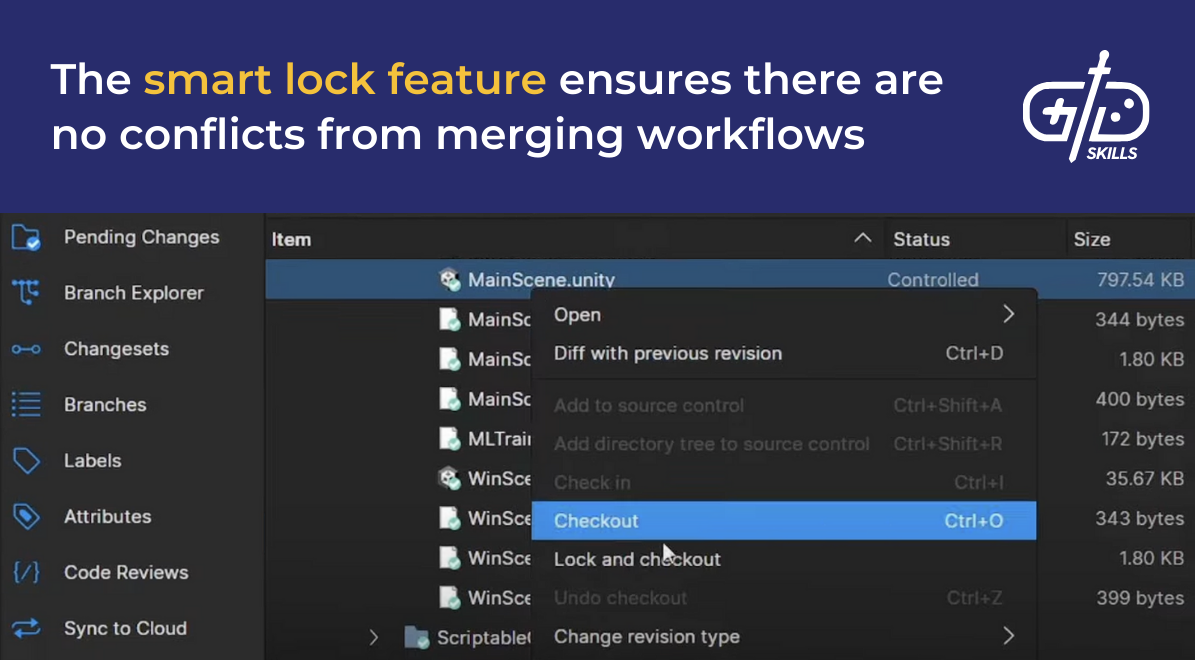

32. Unity Version Control

Unity Version Control is a cross-platform version control system that’s compatible with most major game engines and works on Windows, macOS and Linux. A version control system keeps track of every version of every file, like codes, textures and audio, so work isn’t lost. UVS was developed by Codice Software and was previously known as Plastic SCM. It has a free tier that allows up to 3 users and a pro tier for large studios with unlimited users, and is included in the Unity Pro and Enterprise plans.

SCM was built to integrate with multiple engines, including Unity, Godot, CLI and GUI. Unity Collaborate, in contrast, only integrates with Unity. UC is beginner-friendly and built for small teams, showing only the basic file history. UVS is more advanced and built for large studios, so it’s able to handle branching workflows. UVS allows one team to work on a new weapon system while the other takes on narrative design, for example.

UVS’s merge engine merges code with binary assets, like textures and models, and handles any conflicts that come up when two people edit the same file. There’s a graph-based UI as well, so teams are able to see all the branches, merges and changes, making it easy to track who changed what and why.

UVS retains all the existing features from SCM but tightens them for Unity integration and increases the accessibility of the UI for web users. Role-based workflows allow roles to be assigned and permissions to be granted on a team-by-team basis. Cloud deployment was integrated to improve functionality for remote teams. UVS is part of Unity DevOps, which means it comes with build automation and artifact management tools.

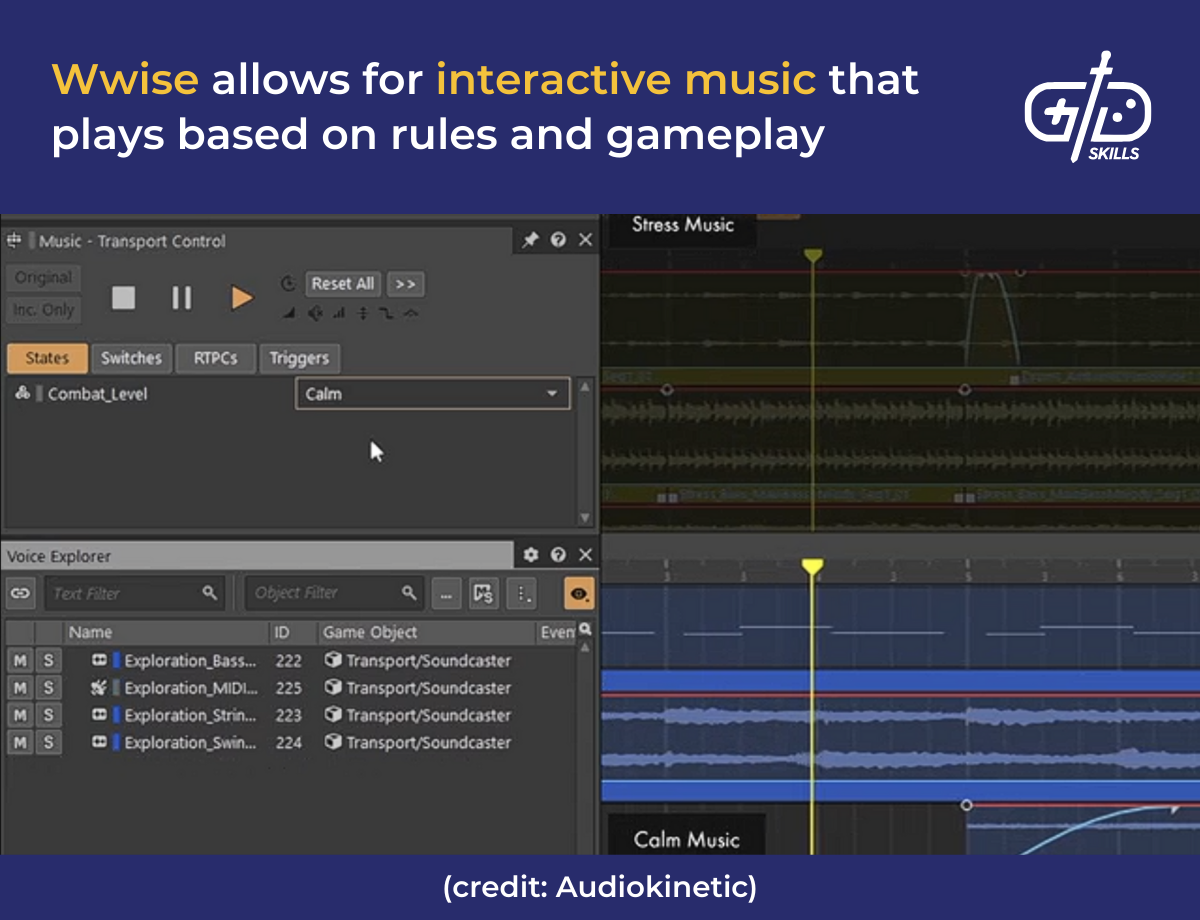

33. Wwise

Wwise is middleware that connects to game engines to activate sound effects and music instead of running them through the engine. Devs create sound effects in a Digital Audio Workspace (DAW) and put them into Wwise, which defines what triggers each sound effect in-game. Wwise determines when to play the crunchy gravel footstep sound instead of the grassy field one. Wwise was founded in 2009 and acquired by Sony Interactive Entertainment in 2019. It’s currently maintained by the Audiokinetic software devs (who also manage Strata, a sound effects library) and has been used in AAA games like Halo.

Wwise is free for non-commercial and academic use, with a one-time license for the indie version where projects need to have a budget less than $250K. The pro version is geared for projects between $250K and $2 million, including engineering support. Devs are able to install Wwise along with specific SDKs and plugins using the Wwise installation manager. The update manager helps export projects and sync plugins across versions.

Unity and Unreal integration is included, with RTPCs (Real-Time Parameter Controls) to link Unity variables and blueprint and C++ support for Unreal. Wwise lets devs trigger sounds directly from Unity scripts and sync audio up with gameplay sequences for cinematic effects. RTPC helps link game variables like speed and health with sound properties, such as making characters breathe heavily when their health drops past a certain point. Spatial audio allows enemy sound effects to get louder or quieter based on their distance from the player (extremely useful in horror games). Real-time processing means there’s no need to code in all the possible variations.

The audio editing tool includes volume curves for devs to control how loud a sound gets over time and blend containers to layer multiple tracks and blend based on conditions. This means, for example, combining sounds for wind, rain and thunder when a player enters a specific location. Wwise’s visual sound editor ties everything neatly together, as it gives devs a full interface of all the tools to organize and manage their audio.

Studios use Wwise and other middleware like FMOD for sounds instead of game engines because they come with a larger array of tools and effects. These allow for complicated sound tricks that engines might not have. I remember Wiksy. W. over at Riot converting a few projects over to Wwise and the audio team being very pleased at the results. I’ll most likely use Wwise for the next project that progresses far enough for audio integration too.

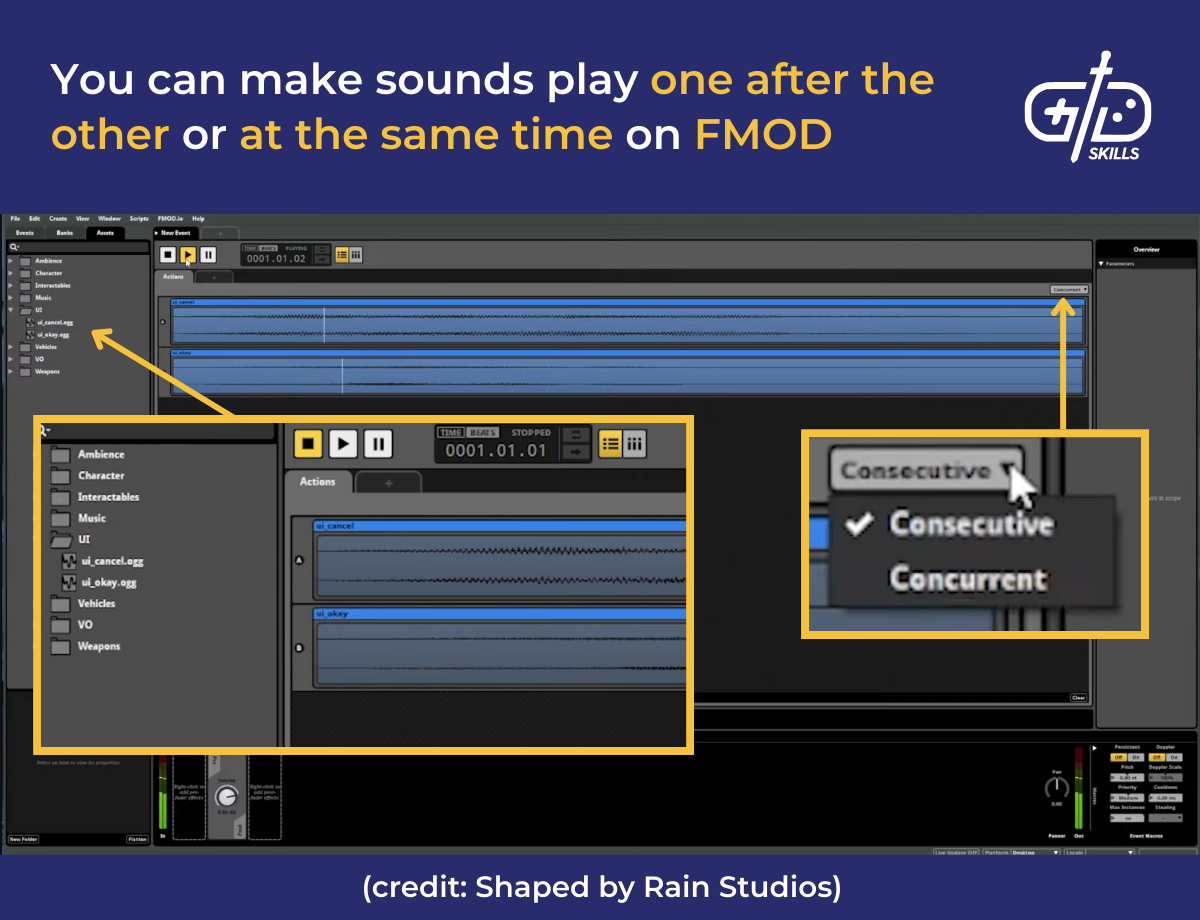

34. FMOD Studio

FMOD Studio is a type of middleware, similar to Wwise, that controls when sounds are played in the game by connecting to game engines. FMOD Designer was the predecessor for FMOD studio, and was used for sound sequencing and timeline editing. It’s still referenced in older projects. After being developed by Firelight Technologies in 2002, FMOD has since been used in games like Celeste and multiple indie titles.

FMOD is favored for rapid prototyping by small teams because of the speed stemming from its drag-and-drop interface. The user-friendly interface means devs are able to use the event management system to organize their sounds into events like footsteps or explosions. Editing tools include timeline editing, layering and parameter control with real-time audio processing, similar to Wwise.

FMOD comes with built-in plugins for Unity and Unreal as well. Event posting is available so devs are able to trigger sounds from Unity scripts or via visual logic from Unreal’s blueprint. There’s API support for custom engines, so advanced devs are able to use C++, C# or FMOD’s own API to integrate audio logic. We used FMOD multiple times at Riot before switching to Wwise. FMOD was quick and easy to use, so it was great for getting games out of the gate.

Licensing includes four tiers, with costs varying based on the project budget. Licensing is per title, rather than per seat, making it ideal for studios that are publishing across-platforms. The free tier is for non-commercial and academic use, or for projects with less than $200K in revenue. FMOD’s indie tier is for budgets under $600K, with the basic plan for under $1.8 million, and premium tier for over $1.8 million.

35. Valve Hammer Editor

Valve Hammer Editor is the official map editor for games built in the Source Engine, like Half-Life 2 and Counter-Strike: Source. Hammer was previously called Worldcraft and was originally developed by Ben Morris in the mid 1990s for Quake Engine games. Worldcraft was acquired and rebranded by Valve Corporations into Hammer Editor in 1996.

Worldcraft let users build custom maps via entity placement, texture alignment and brush-based geometry. Players were able to add enemies or NPCs with triggers using entity placement. Texture alignment added materials to surfaces while brush-based geometry was used to build walls, floors and other architectural elements. The toolset fostered an active modding community for games like Half-Life, so the Worldcraft modding scene carried over to Hammer.

Valve’s acquisition and rebranding of Worldcraft into Hammer Editor made it the official tool for building maps in HL, CS, Team Fortress and other Source games. Modding is a central element of Valve’s games since CS started out as a mod, and Team Fortress, as well as Dota are community creations. By adding Hammer to Source’s SDK, alongside additional tools, Valve gave players freedom to build entire games.

The Source SDK is available on Steam and is fully free, so Hammer is free by extension. Devs who own a Source-based game like Half-Life 2 are able to install the SDK from the Tools section directly. The additional tools include a model viewer to preview 3D assets and a faceposer to create facial animations. Newer Source 2 games like Half-Life: Alyx use a different editor.

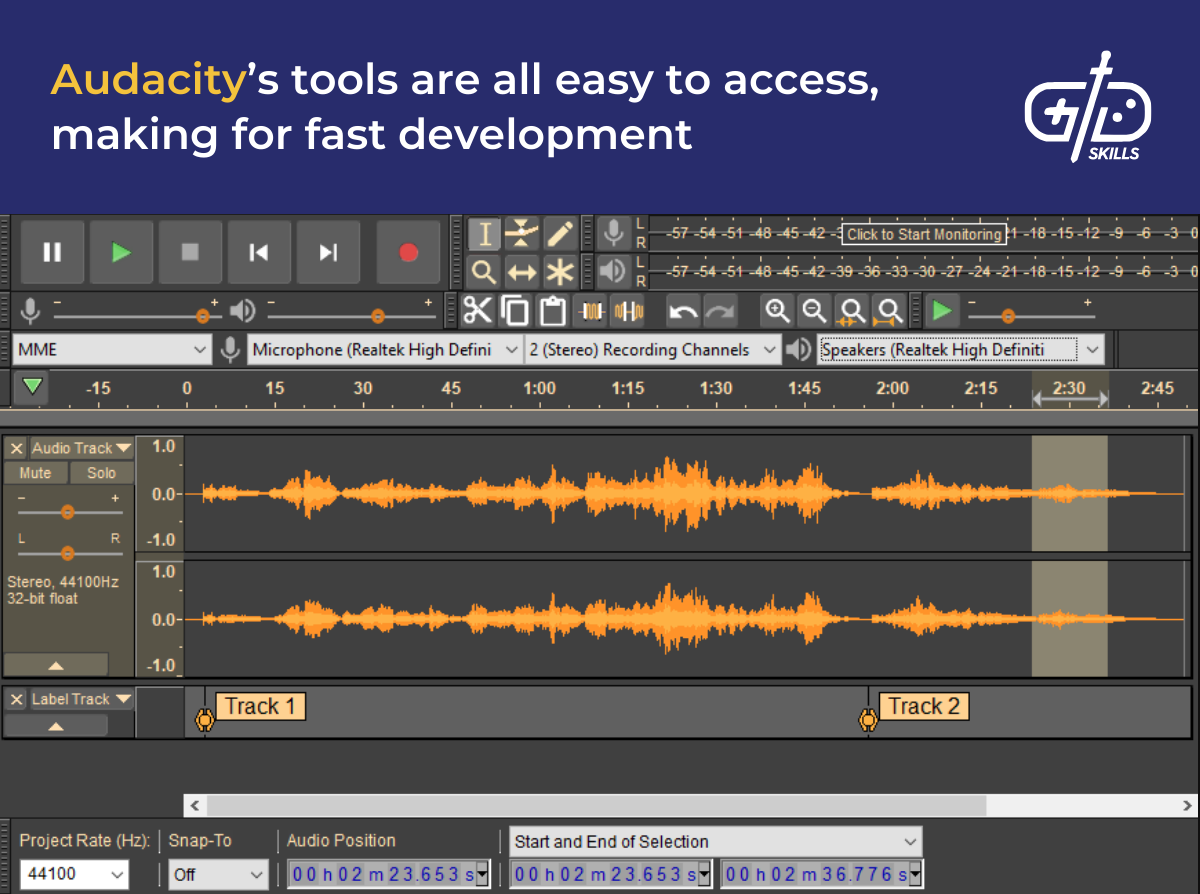

36. Audacity

Audacity is a DAW geared for adjusting noise levels, applying different effects and letting devs export in multiple formats. Even though it’s a DAW, Audacity is used to edit existing audio files. DAWs like Reaper create audio from scratch, so devs record audio or write music with MIDI, making them ideal for full sound production instead. Audacity was developed by the Audacity Team with current maintenance by Muse Group and is completely free and open-source. Its accessibility makes it ideal for indie devs, students and hobbyists. Games like Celeste and Undertale used Audacity to edit dialogue clips and add retro-style sound effects.

Audacity’s core features are multi-track editing, a real-time effects preview and the spectrogram view. Mutli-track editing lets devs cut, copy and paste across multiple audio layers, making it easy to time audio loops or combine voice effects with music. The real-time effects preview lets devs test out new settings by hearing changes before applying them. Audacity’s spectrogram view helps visualize frequencies like clicks or unwanted noise, making these easy to locate and remove. Plugin support lets devs mix in a range of different audio effects. Audacity saves files in WAV, MP3, FLAC or Ogg format and supports exporting to middleware like FMOD.

What is the purpose of video game software?

The purpose of video game software is to facilitate the design and development of every aspect of a game using the most powerful tools available. Game designers use a range of software to render and create movements, gameplay logic, graphics and sound. Game engines work with animation and graphics software, while DAWs and middleware help create immersive gameplay experiences. IDEs, AI systems, prototyping tools, and networking engines tie all the systems together to smooth out the gameplay.

AI systems included in game engines are useful for defining NPCs’ behavior, decision making and navigation patterns. Sports games use AI for referees to ensure fair gameplay, or to generate an overview of the match’s result. Combat- and strategy-focused games have automated threat assessment systems that notify players of enemy levels, but give enemies automatic reactions based on player behavior.

Game engines combine rendering, physics, logic and asset management into one environment. Devs are able to turn 3D models and textures into cinematic graphics within the game as a result. The built-in physics engines help devs create realistic movement, like falls and jumps, based on collision and gravity. Level editors are available too, so devs are able to map out object placements and lighting. Some engines come with their own scripting languages, or have built-in support for specific languages, like C++ or JavaScript.

Graphics and animation software help create and animate textures, characters and environments. Assets’ core visual features are added, then the artwork is exported to engines to layer on more complicated effects. Animation software like Houdini helps create moving features like facial expressions and realistic cloth fabric. Keyframing for animation lets devs edit movement on a frame-by-frame basis, making it easy to fine-tune. Devs then tie the movements to gameplay logic, ensuring they’re in sync with every relevant player action and game state.

DAWs and middleware allow devs to create audio effects that match what the player is seeing and doing, further deepening their immersion. Sounds are made using DAWs, then middleware forms a bridge between the audio effects and the gameplay. Middleware like Wwise connects the sounds to in-game events, signalling changes between gameplay states like exploration and combat through the music and background noises.

IDEs are used to test game elements, debug, and organize game projects. IDEs support a range of scripting languages, and engine integration varies based on the IDE. Most engines are able to export to the major platforms, including desktop and mobile. Prototyping tools help devs test out ideas and elements quickly.

Prototyping tools reduce risk in game development by giving devs space to test ideas and mechanics repeatedly, making sure everything works perfectly. The tools don’t need separate software, as the process occurs within the game engine itself. Godot was used to simulate combat loops in Hyper Light Drifter and help the devs avoid any bugs or discrepancies.

In large multiplayer games like Fortnite, networking engines connect players with each other while ensuring smooth gameplay. Engines like Unreal come with built-in multiplayer support or include tools to add multiplayer features, like Unity’s Mirror. Networking tools work to reduce lag and delay while keeping all players in sync, such as ensuring one player’s battle sounds are heard on another’s device.

What game development software do professionals use?

Professionals use game development software like Unity, GamesDevelop, Open 3D Engine or JavaScript amongst other scripting languages to code the gameplay logic. Engines like Unreal and Godot are geared for indie devs and studios. Visual design software like Autodesk Maya, Adobe Illustrator and Photoshop, and 3ds Max are used by professionals to render high-quality graphics. DAWs like Audacity are used with middleware such as Wwise to connect sound effects to gameplay states.

Unity, Unreal and Godot are the top three game engines, but engines like GDevelop and O3DE are used by certain studios for specific game types. The game engines used most in professional settings are detailed below.

- Unity supports both 2D and 3D game development and exports to mobile, console, desktop and VR. It includes visual scripting for non-coders and C# for advanced devs. Unity has been used in titles like Monument Valley and Hollow Knight.

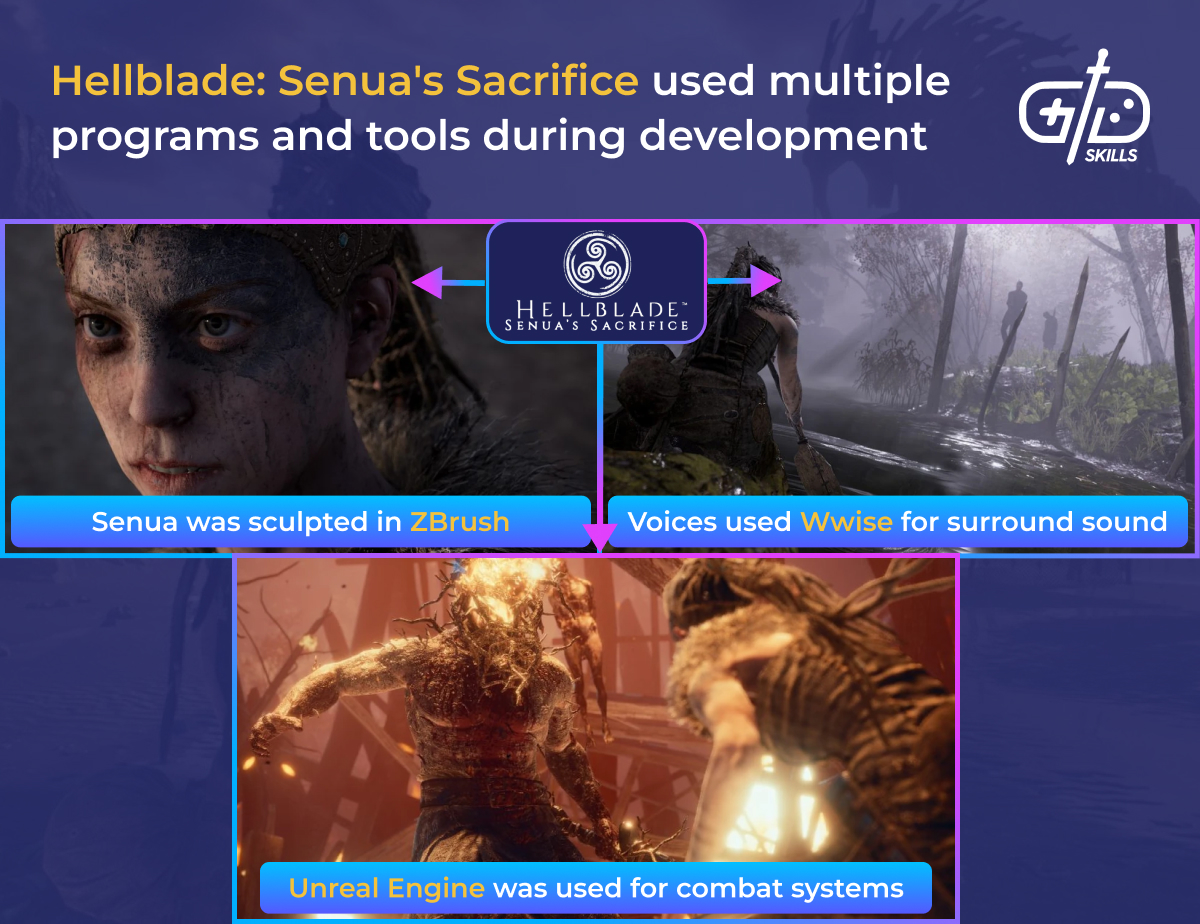

- Unreal Engine is known for photorealistic rendering, churning out AAA quality graphics. The engine’s beginner-friendly blueprint visual scripting lets non-coders define gameplay logic easily. Unreal has been used to develop games like Fortnite and Hellblade.

- Godot is lightweight and open-source, ideal for indie devs and studios. It uses a GDScript and devs are able to create 2D/3D hybrid games. Godot was used for Ex-Zodiac and The Garden Path.

- GDevelop is a no-code engine for 2D games that’s ideal for testing out prototypes quickly, and for education. It’s been used in games like Hyperspace Dogfights.

- CryEngine is known for real-time rendering and demanding graphics, using C++ scripting. It’s been used in titles like the Crysis franchise and Kingdom Come: Deliverance.

- Open 3D Engine, based on CryEngine, helps devs produce games with high-quality visuals but is more modular than CryEngine. It’s been used for simulation-heavy and multiplayer games like Mad World and State of Matter.

- GameMaker Studio is a 2D-based engine with both drag-and-drop and GML scripting used to create games like Hyper Light Drifter and Undertale.

Professionals use graphics and animation tools in tandem with game engines, like Blender and Autodesk Maya. Blender is an open-source modeling and animation tool, used in titles like Dead Cells, while Maya is the industry-standard tool for 3D modeling and animation. Maya has been prominently used in action- and lore-heavy games such as The Last of Us.

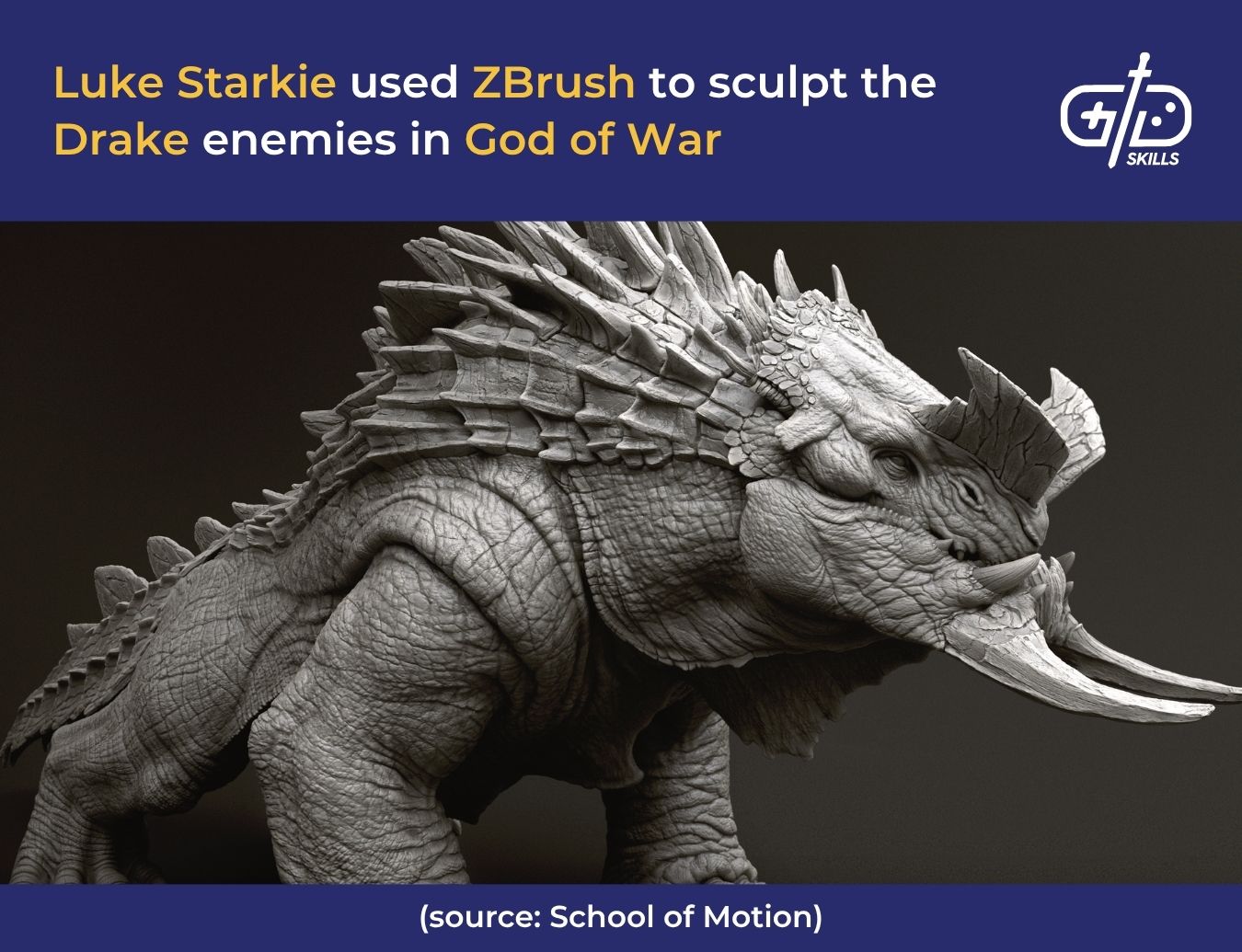

3ds Max, also by Autodesk, is an animation tool professionals use to create cinematic visuals like those in Hitman and Call of Duty and was created by Autodesk as well. Simulation and procedural VFX games use Houdini, while Zbrush is ideal for sculpting out characters in high resolution, like in God of War. Professionals don’t use the same software for all visuals. Separate tools like Adobe Photoshop and Substance Painter are used to add textures and real-time rendering effects to maximize realism.

Sounds are important for immersion so DAWs, and middleware like Wwise and FMOD are used for audio creation and to make sure audio syncs with player actions and game states. DAWs like Reaper are used to create professional-quality sound effects and music, while Audacity is ideal for simpler audio editing. Wwise and FMOD work similarly as middleware, but Wwise is more advanced and preferred by large studios. Wwise was used in Assassin’s Creed Shadows for adaptive music, while FMOD is preferred by indie devs.

IDEs are tools used in the game industry to organize large-scale projects and code. Visual Studio is the primary IDE for Unity, but has integrated support for Unreal, and for multiple scripting languages including C++. Devs are able to write their game scripts in VS, while using Unity assets because of extensions. JetBrains Rider is another IDE that’s preferred by Unity and Unreal users for deep code analysis.

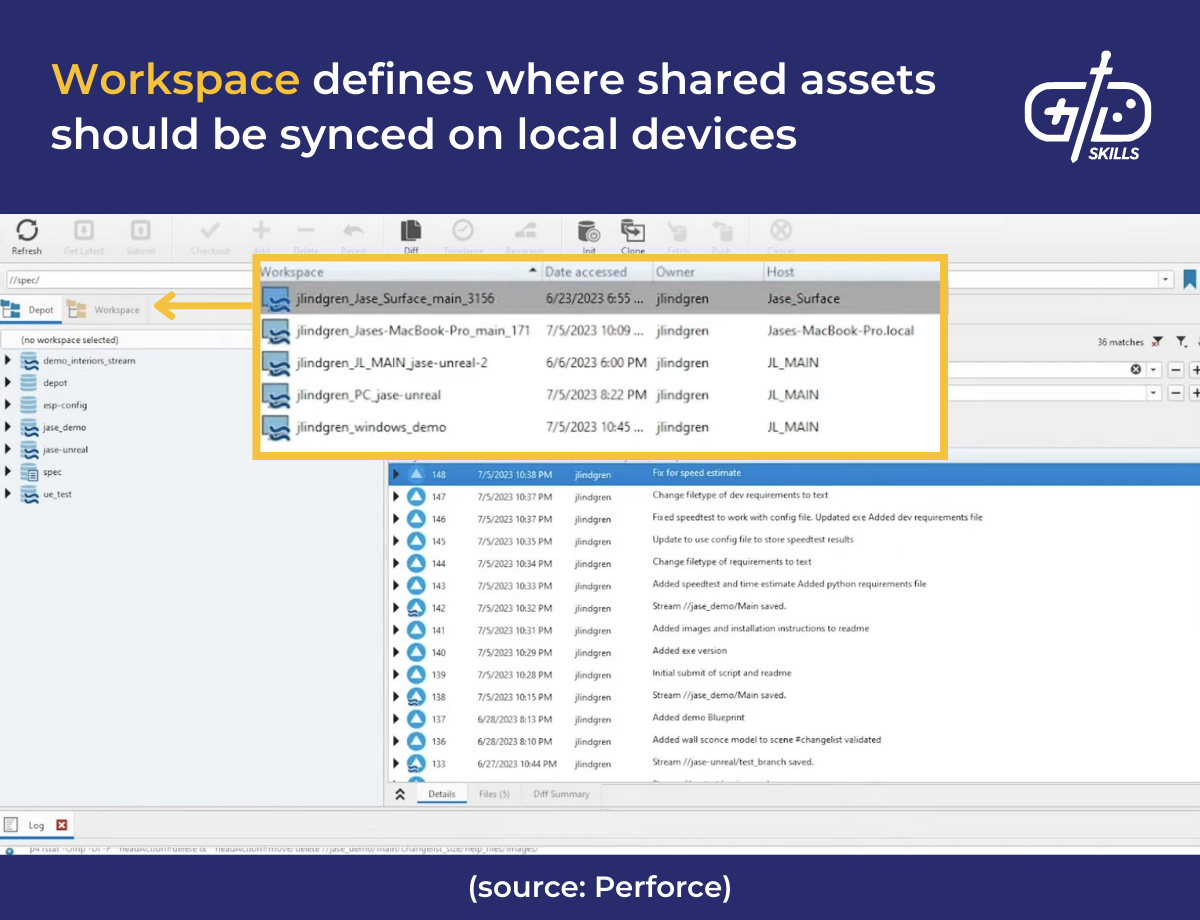

Collaborative and version control tools are important in game development to smooth out team workflows and make sure everyone is on the right track. Perforce is a version control system that’s built for large teams, so it’s been used in studios like Ubisoft and Epic Games. GitHub, on the other hand, is the go-to for indie teams and open-source projects. It comes with source control and Git logs to see who changed what and how for easier management as well.